- Review Article

- Abstract

- Introduction

- Systematic Trial-Based Instruction: Definition and Evidence-Base

- Three Core Phases of Systematic Trial-Based Instruction: Some Details

- Evidence-Base for Systematic Trial-Based Instruction

- Presenting Efficient Instruction to Address Students’ Rates of Learning and Skill Maintenance

- Depictions of Increased Instructional Efficiency Through Systematic Trial-Based Instruction

- Instructional Density: An Avenue to Instructional Efficiency and Individualized Instruction

- Practical Reasons for Using Systematic Trial-Based Instruction

- Conclusion

- References

Systematic Trial-Based Instruction: An Evidence-Based Approach to Presenting Remedial Instruction in a Multi-Tier System of Support

Timothy E Morse*

University of West Florida, USA

Submission: January 10, 2023; Published: January 26, 2023

*Corresponding author: Timothy E Morse, University of West Florida, USA

How to cite this article: Timothy E M. Systematic Trial-Based Instruction: An Evidence-Based Approach to Presenting Remedial Instruction in a Multi-Tier System of Support. Glob J Intellect Dev Disabil. 2023; 11(2): 555807. DOI:10.19080/GJIDD.2023.11.555807

- Review Article

- Abstract

- Introduction

- Systematic Trial-Based Instruction: Definition and Evidence-Base

- Three Core Phases of Systematic Trial-Based Instruction: Some Details

- Evidence-Base for Systematic Trial-Based Instruction

- Presenting Efficient Instruction to Address Students’ Rates of Learning and Skill Maintenance

- Depictions of Increased Instructional Efficiency Through Systematic Trial-Based Instruction

- Instructional Density: An Avenue to Instructional Efficiency and Individualized Instruction

- Practical Reasons for Using Systematic Trial-Based Instruction

- Conclusion

- References

Abstract

Students who require remedial instruction manifest a multitude of instructional needs. Paramount among them are sufficient response repetitions to acquire new content, ways to increase their relatively slow rates of learning, and opportunities to engage in activities that will enable them to maintain learned content. Teachers tasked to provide remedial instruction, such as those who present Tier 2/3 instruction in a multi-tier system of supports (MTSS), need interventions that can address these students’ instructional needs either singly or in combination. In particular, effective and efficient interventions are preferable. In this paper an evidence-based practice - systematic trial-based instruction - that can meet these needs is explained. It is an instructional approach that is predicated on student opportunities to respond but readily allows for embellishments that increase instructional efficiency by increasing students’ learning rates and permits them to maintain previously mastered content. This approach is first defined and explained in terms of its three core phases. Next, supporting evidence regarding this approach’s effectiveness is presented, as are examples of its use. These examples are followed by a discussion of a concept, called instructional density, that has emerged from this approach. The paper concludes with a discussion of reasons why teachers should consider using systematic trial-based instruction. Teachers will find it is a flexible approach that allows them to address the science and art of teaching.

Keywords: Teaching; Learning; Students

- Review Article

- Abstract

- Introduction

- Systematic Trial-Based Instruction: Definition and Evidence-Base

- Three Core Phases of Systematic Trial-Based Instruction: Some Details

- Evidence-Base for Systematic Trial-Based Instruction

- Presenting Efficient Instruction to Address Students’ Rates of Learning and Skill Maintenance

- Depictions of Increased Instructional Efficiency Through Systematic Trial-Based Instruction

- Instructional Density: An Avenue to Instructional Efficiency and Individualized Instruction

- Practical Reasons for Using Systematic Trial-Based Instruction

- Conclusion

- References

Introduction

Educators must account for many factors when planning to present effective instruction. Among them are an appropriate environmental arrangement, proper time management, which curriculum to teach and instructional strategy to employ, and how to conduct valid assessments to document student achievement [1].

Another factor is the number of repetitions, or opportunities to respond, that students will be required to perform while in the process of mastering a targeted learning outcome. The significance of providing students with opportunities to respond in order to acquire new content has been well established [2]. However, considerable variability exists among learners with respect to the number of opportunities they require to master new content [3].

The aforementioned circumstance is particularly noteworthy with respect to students who manifest learning challenges. This group includes students who, within a school’s multi-tier system of support (MTSS) framework, have been identified as needing remedial instruction in Tier 2 or subsequent tiers. Thus, some of these students’ remedial instruction will involve the provision of special education services. It has been estimated that 20% of all students will need to be provided remedial Tier 2 services, and that 5%-10% will present persistent, significant learning challenges requiring ongoing remedial instruction [4].

Gersten et al. [5] provided data that reveal how many opportunities to respond may have to be afforded certain students. Gersten et al. remarked that, to learn new content, students presenting learning challenges frequently need to engage in 10-30 times more practice opportunities as compared to their peers who are performing at grade level. Shanahan [3] presented a specific example with respect to a specific outcome: learning a new word. Summarizing the work of others [6], Shanahan remarked that poor readers need up to three times as many repetitions as better readers to learn a new word. However, he also indicated that the number of repetitions could be much greater for certain students since variability exists across both students and the types of words to be learned.

Others have highlighted the importance of providing students in need of remedial instruction in an MTSS framework with increased opportunities to respond. Within a typical MTSS framework, students who do not demonstrate adequate gradelevel progress upon receiving general education classroom instruction in Tier 1 are identified as needing Tier 2 remedial instruction. An oft-cited approach to this type of Tier 2 instruction is that advocated by Fuchs and Fuchs, [7]. They argued in favor of using standardized platforms to present small group instruction at the start of Tier 2 services. A standardized platform is an evidence-based program that is used with a group of students who share the same academic achievement deficit. This program can be described as a prescriptive program consisting of instructions about the instructional strategies and materials to use, the number of sessions to present each week, and the length of each session.

Data indicate that some students’ academic achievement deficit will not be resolved through this type of remedial instruction (e.g., the 5%-10% of students identified above). Recognizing this fact, Fuchs et al. [8] offered suggestions, in the form of a taxonomy, for adjusting Tier 2 standardized program instruction for the purpose of developing an effective, individualized program for a stillstruggling student. A first-step adjustment involves increasing a student’s instructional dosage.

Increased dosage refers to providing a student with more instructional sessions and an increase in the amount of time spent in each session. However, the real focus of this program adjustment is to allow for more student opportunities to respond and receive relevant feedback. Likewise, explicit instruction, which is another component of Fuchs et al.’s taxonomy, also emphasizes the need to provide students with opportunities to respond and receive feedback.

Altogether, this means that teachers who are tasked to present some type of remedial instruction need information about ways to design and implement instruction that is predicated on student responding. That is to say, having students emit responses is the paramount planning consideration followed by considerations about how to design instruction such that is addresses these students’ other learning characteristics.

Two of these characteristics that are relevant to this paper are learning content at a slower rate and demonstrating difficulty maintaining learned content [9-11]. Teachers would benefit from knowing ways to present instruction that, first and foremost, provides students with needed opportunities to respond and also efficiently addresses the need to increase these students’ rates of learning and provide them with opportunities to engage in activities that will result in their maintenance of acquired content.

Ironically, numerous studies have demonstrated how to present instruction such that students can acquire new content without being given an opportunity to respond [12-14]. Furthermore, this presentation method is supported by research about ways to ensure task maintenance [15]. What is important to note is that the basis of these studies was an instructional approach that was centered on students’ opportunities to respond in order to acquire targeted learning outcomes. Strategies that were added to this approach were designed to teach students new, peripheral content. All told, the studies reported how increased instructional efficiency was realized as students mastered a targeted learning outcome and acquired related content.

This paper presents information about an instructional approach - systematic trial-based instruction - that focuses on providing a student with opportunities to respond. Additionally, this paper explains how systematic trial-based instruction can be enhanced to increase instructional efficiency by simultaneously addressing how to increase a student’s rate of learning and enhance his maintenance of acquired content. While the approach can be used to address academic and school social behaviors, the focus here is on academic instruction.

Systematic trial-based instruction is defined and explained in terms of its three core phases. Existing, supporting evidence regarding this approach’s effectiveness is presented, as are examples of its use in a 1:1 and small group arrangement. These examples are followed by a discussion of a concept, called instructional density, that has emerged from this approach. The paper concludes with a discussion of reasons why teachers should consider using this approach.

- Review Article

- Abstract

- Introduction

- Systematic Trial-Based Instruction: Definition and Evidence-Base

- Three Core Phases of Systematic Trial-Based Instruction: Some Details

- Evidence-Base for Systematic Trial-Based Instruction

- Presenting Efficient Instruction to Address Students’ Rates of Learning and Skill Maintenance

- Depictions of Increased Instructional Efficiency Through Systematic Trial-Based Instruction

- Instructional Density: An Avenue to Instructional Efficiency and Individualized Instruction

- Practical Reasons for Using Systematic Trial-Based Instruction

- Conclusion

- References

Systematic Trial-Based Instruction: Definition and Evidence-Base

Systematic trial-based instruction stands for a class of trialbased approaches that are comprised of a basic three-component structure: the presentation of a task direction, an opportunity for a student response, and a planned response contingency. Additionally, systematic trial-based instruction is defined by the fact that strategic planning is involved in the particular design of the three-component structure, meaning that each of the three components serves a clearly defined purpose. The term systematic refers to this strategic planning.

The three components, which occur in quick succession, comprise what is known as a trial. A straightforward definition for the term trial is an opportunity to do something. In the case of presenting remedial academic instruction to a student, a trial involves providing the student an opportunity to engage in an academic task. Examples include naming letters of the alphabet, decoding consonant-vowel-consonant words, stating the product of a multiplication fact, and solving for the variable in a linear algebraic equation.

While considering the content below, it is important to remain clear about the distinction between a trial and a session. Each opportunity a student is given to engage in an academic task involves a trial, and the time during which all of the trials occur is referred to as a session. For instance, a student may be given 25 separate opportunities (or trials) to state the product of a multiplication fact. Altogether, these 25 opportunities to respond constitute an instructional session.

- Review Article

- Abstract

- Introduction

- Systematic Trial-Based Instruction: Definition and Evidence-Base

- Three Core Phases of Systematic Trial-Based Instruction: Some Details

- Evidence-Base for Systematic Trial-Based Instruction

- Presenting Efficient Instruction to Address Students’ Rates of Learning and Skill Maintenance

- Depictions of Increased Instructional Efficiency Through Systematic Trial-Based Instruction

- Instructional Density: An Avenue to Instructional Efficiency and Individualized Instruction

- Practical Reasons for Using Systematic Trial-Based Instruction

- Conclusion

- References

Three Core Phases of Systematic Trial-Based Instruction: Some Details

The basic three-component structure serves as the foundation for systematic trial-based instruction. However, each component is best explained with respect to its corresponding phase within a trial. Describing a trial in terms of phases allows for a detailed discussion of the numerous instructional strategies that can be employed during a trial for various purposes. These purposes include (a) providing a student with opportunities to respond to acquire new content, (b) presenting information in a way that increases a student’s rate of learning through processes known as incidental and observational learning, and (c) enabling a student to engage in activities that support his maintenance of mastered content.

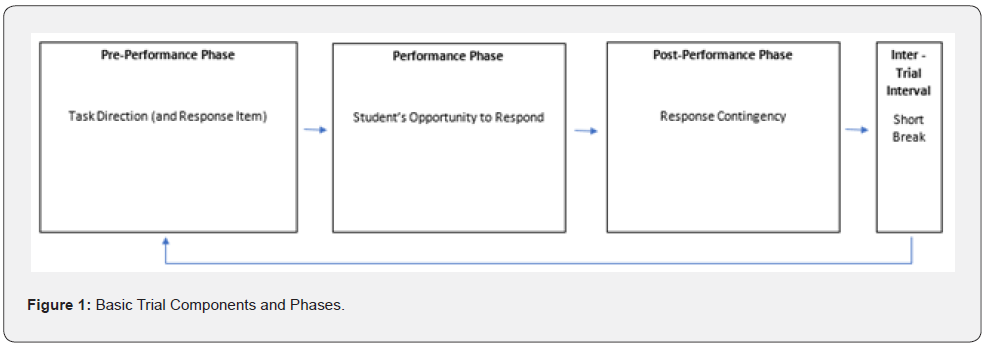

The three trial phases are the pre-performance phase, performance phase, and post-performance phase. Figure 1 depicts the relationship between these phases and a trial’s basic three-component structure. Also, the figure depicts the inter-trial interval that follows each trial within an instructional session. This interval is a short break that allows for the clear separation of one trial from another. During this break a teacher can record student performance data and prepare for the presentation of the next trial, and a student can engage with reinforcement provided for either a correct response or the display of appropriate effort.

It is important to note that the terminology used here to describe the three trial phases differs from, yet is synonymous with, the terminology that routinely is used elsewhere to describe the trial-based approaches that are highlighted in the section below about evidence-based practices. These evidencebased practices are based on the principles of applied behavior analysis (ABA), which is a professional discipline that involves the scientific study of the relationship between environmental events and human behavior. ABA’s specialized lexicon allows for precise communication among those who are well-versed in its meanings. Yet, this type of communication likely is not readily understood by those outside the discipline. Consequently, terminology that has a higher probability of being understood by a non-ABA specialist is presented here.

Pre-performance phase

The pre-performance phase, which is commonly referred to elsewhere as the antecedent phase, involves all of the activities that occur before the student is given an opportunity to respond. This opportunity marks the start of the performance phase, which occurs next. The student’s opportunity to respond is triggered by the presentation of the task direction.

The three activities that occur most often in the preperformance phase are the teacher’s presentation of (a) the task direction, (b) a response item, and (c) a prompt. The task direction is a short statement that tells the student what he is to do, such as name a letter or state a sum. A response item, such as an index card with a vocabulary word, is an item that represents the academic skill identified in the targeted learning outcome. A prompt is any additional information, beyond the task direction and response item, that is presented to the student and is intended to solicit a correct response. An example of a prompt would be telling a student the first two sounds in a vocabulary word that he is learning to read.

Performance phase

This phase is most commonly referred to as the behavior phase, but here it is referred to as the performance phase. During this phase the student is provided an opportunity to emit a response that matches the targeted learning outcome. For instance, if the targeted learning outcome is for the student to correctly answer basic addition facts, the student might be directed to orally state the sum for a fact, write it on a whiteboard, or hold up a response card that displays the sum.

In actual practice the performance phase consists of the student’s attempt, or lack thereof, to perform the targeted learning outcome. That is to say, a student may engage in an overt behavior that is deemed to be either a correct or incorrect response with respect to the targeted learning outcome. Conversely, the student may not engage in any behavior during the period of time that is allowed for a response. This lack of a response is often referred to as a “no response” and is considered to be a type of incorrect response. All of a student’s attempts are considered to be opportunities to respond. They comprise the cornerstone of systematic trial-based instruction.

Post-performance phase

This phase is most commonly referred to as the consequence phase. The post-performance phase consists of the planned contingencies that are based on what occurs during the preceding performance phase.

As is the case during the other phases, many activities can occur during this phase. The primary activity is the instructor’s provision of what is called corrective or affirmative feedback to the student. For example, the teacher may say, “No,” to inform the student that her performance was incorrect or, “Yes,” to indicate that her performance was correct.

Note that in some instances a clear demarcation between the three phases does not exist. Rather, some sort of overlap can occur across the three phases. For instance, a technique known as response interruption redirection may be used. As its name implies, it involves disrupting the student’s incorrect response (which occurs during the performance phase) and providing the student with additional information so that she produces a correct response.

- Review Article

- Abstract

- Introduction

- Systematic Trial-Based Instruction: Definition and Evidence-Base

- Three Core Phases of Systematic Trial-Based Instruction: Some Details

- Evidence-Base for Systematic Trial-Based Instruction

- Presenting Efficient Instruction to Address Students’ Rates of Learning and Skill Maintenance

- Depictions of Increased Instructional Efficiency Through Systematic Trial-Based Instruction

- Instructional Density: An Avenue to Instructional Efficiency and Individualized Instruction

- Practical Reasons for Using Systematic Trial-Based Instruction

- Conclusion

- References

Evidence-Base for Systematic Trial-Based Instruction

Systematic trial-based instruction, in and of itself, has not been identified as an evidence-based practice resulting from an evidence-based review [16]. Yet, in this paper it is referred to as such due to the fact that other trial-based procedures that are among the class referred to as systematic trial-based instruction have been identified as evidence-based practices. Two of these are described below: discrete trial teaching (DTT) and response prompting procedures.

DTT and response prompting procedures are described here in a way that allows for a comparison of the opposite ends of a continuum that depicts trial-based approaches as being mostly student-directed or mostly teacher-directed. At one end of the continuum is a type of trial-based instruction referred to as trial-and-error. It consists of minimal teacher involvement that is employed to guide relatively independent student responding. The other end of the continuum involves conspicuous, active teacher involvement that directs student responding so that near errorless learning occurs.

Discrete trial teaching

Like systematic trial-based instruction, discrete trial teaching (DTT) refers to an approach to presenting instruction. This approach is called discrete because of the clearly defined beginning and end of the instructional sequence which allows for a concentration of effort in between [17]. DTT has a history with respect to the presentation of effective instruction to individuals with autism [18] and has been identified as an evidence-based practice in this regard [19].

Discrete trial teaching involves the basic three-component features of systematic trial-based instruction [18,20]. One wellknown type of DTT is trial-and-error learning. Trial-and-error learning involves the presentation of a brief task direction by the teacher, an independent student response, and then a contingent response from the teacher. The teacher differentially responds to correct and incorrect responses. With this approach, the student discerns the correct response because the contingency associated with it is more desirable than the contingency associated with an incorrect response. Trial-and-error learning has been particularly effective in teaching children to make discriminations.

For instance, to teach a student to discriminate the color red, a trial-and-error protocol would begin with the teacher presenting a red block and a green ball to the student. Next, the teacher would present the task direction, “Touch red.” If the student touches red, he is allowed to engage with a highly preferred toy for 15 seconds. However, if he touches green the teacher says, “No,” and withdraws her direct attention for three seconds. The instructor’s different, contingent responses to the student’s independent performances are used by the student to learn how to discriminate red items from other colored items. He achieves this outcome by figuring out that when he responds correctly to the task direction, “good things happen,” whereas all other independent responses result in a much less preferred outcome. The teacher’s most important role throughout this protocol occurs in the post-performance phase of the trial.

Response prompting procedures

Response prompting procedures are another member of the class of systematic trial-based instruction. These procedures are discussed here because (a) they involve the basic three components of systematic trial-based instruction; (b) have, in some instances, been established as evidence-based practices [21], and (c) are predicated on a significant amount of teacher involvement. This means that the teacher typically performs as important, and noticeable, a role in the pre-performance phase of the trial as in the post-performance phase [22].

Response prompting procedures involve the delivery of prompts in various ways during the pre-performance phase to produce what is referred to as near errorless learning [23]. In this regard, they are more of a teacher-directed trial-based approach than is the type of DTT discussed previously. Response prompting procedures are predicated on the use of what are referred to as controlling prompts to establish a very high probability that the student will perform the correct targeted learning outcome. A controlling prompt is one that involves a type of assistance that has been shown to nearly guarantee a correct student response. Over time, the prompt fades so that the student responds correctly without it. During the post-performance phase, the teacher responds in a manner similar to that described in the discussion about DTT.

The guidelines for presenting the controlling prompt varies. This variation has resulted in the establishment of different response prompting procedures, including the system of least prompts, graduated guidance, most to least prompting, simultaneous prompting, constant time delay, and progressive time delay [24]. Recently two of these procedures – constant time delay [25] and simultaneous prompting [21] – have been identified as evidence-based practices.

Merging databases

It must be noted that DTT can be configured in ways that mirror many of the response prompting procedures. Since DTT is an instructional approach that incorporates principles of applied behavior analysis, and there are countless established principles as well as evolving ones, it is impossible to depict a uniform type of DTT beyond the basic three-component structure.

The reason it is critical to point this out is because it highlights the fact that the features of differently named trial-based approaches to instruction need to be examined collectively in order to develop a full understanding of the phenomena under consideration. In other words, using the term systematic trialbased instruction sets the occasion to merge separate databases that are distinct yet share certain commonalities. Altogether, they allow for a rich discussion of the phenomena under consideration.

- Review Article

- Abstract

- Introduction

- Systematic Trial-Based Instruction: Definition and Evidence-Base

- Three Core Phases of Systematic Trial-Based Instruction: Some Details

- Evidence-Base for Systematic Trial-Based Instruction

- Presenting Efficient Instruction to Address Students’ Rates of Learning and Skill Maintenance

- Depictions of Increased Instructional Efficiency Through Systematic Trial-Based Instruction

- Instructional Density: An Avenue to Instructional Efficiency and Individualized Instruction

- Practical Reasons for Using Systematic Trial-Based Instruction

- Conclusion

- References

Presenting Efficient Instruction to Address Students’ Rates of Learning and Skill Maintenance

Thus far, systematic trial-based instruction has been explained as an approach that allows a student to engage in the number of repetitions needed to acquire new content. However, research has shown how this fundamental approach can be embellished with strategies and features that address two additional learning characteristics of students needing remedial instruction. The research that has proven how systematic trial-based instruction can be made more efficient has involved participants with and without disabilities, with much more representation by students with disabilities. This might explain why this instructional approach is not well known in spite of the fact that this research has been conducted over the past 25 years [12].

Regarding the two additional learning characteristics of students needing remedial instruction, one is learning content at a slower rate. Over time students who exhibit this characteristic will master much less of the curriculum than their betterperforming peers. Thus, teachers need to design instruction so that, during the time that it is presented, students are afforded an opportunity to acquire content beyond that associated with a targeted learning outcome. The second learning characteristic is difficulty maintaining mastered content [10,11,26]. When a student does not maintain what they have learned a teacher will have to spend valuable instructional time re-teaching previously mastered content.

When systematic trial-based instruction successfully addresses these additional learning characteristics, instructional efficiency is increased. Efficient instruction refers to instruction that results in more student achievement with the same (or even less) teacher effort and time spent in instruction than a comparable instructional approach. If one 20-minute lesson, conducted over five days, resulted in a student mastering one targeted learning outcome (e.g., naming a numeral) and a different 20-minute lesson that also was conducted over five days resulted in a student mastering the same targeted learning outcome and acquiring related content (e.g., counting the number of objects the numeral stands for and reading its related number word), the second instructional session would be deemed more efficient. Systematic trial-based instruction can be embellished with many instructional strategies to make it maximally efficient. Most of the strategies are employed in the pre- and post-performance phases. Many of the strategies can serve more than one function, and some of the functions depend on whether a 1:1 or small group arrangement is used [27]. Four of the strategies are described next: incidental information, observational learning, error correction, and priming.

Incidental information

Incidental information is content that is presented during an instructional trial that is tangential to a student’s targeted learning outcome, which serves as the basis for a student’s response during a trial. This means the student is exposed to the incidental information but does not respond to it. Incidental learning is the term used to refer to a student’s acquisition of this information. When incidental learning occurs a student’s rate of learning increases as does instructional efficiency.

As was stated previously, acquiring content in this manner runs counter to the data supporting the importance of response repetitions. To date, several reasons have been offered to explain why incidental learning occurs and include the close relationship of incidental information to the targeted learning outcome such that a student deems it to be important because the teacher, through her actions, has identified it as being important [28].

Research has demonstrated that a student can acquire incidental information that (a) is presented during his trial as well as during the trial of another student who is in the same instructional group; (b) addresses a topic that is directly related to the targeted learning outcome or another outcome altogether; (c) is comprised of more than one piece of information; and (d) is presented in the pre-performance or post-performance phases of a trial. Incidental information presented in the post-performance phase has been referred to as instructive feedback [28].

Observational learning

Observational learning occurs when a student sees another student receive reinforcement for engaging in a targeted learning outcome that is unique to that student. Reinforcement refers to a post-performance event that, when it occurs, increases the probability a student will perform the same behavior in the future under similar circumstances. When observational learning occurs a student’s rate of learning increases as does instructional efficiency.

For observational learning to occur systematic trial-based instruction must involve two or more students: one who engages in an opportunity to respond and another who observes the response. Furthermore, a response contingency that functions as reinforcement must be provided to the student who performs the correct response while the other student observes this contingency.

Error correction

Error correction involves presenting information about how to correctly perform a task after a student makes in incorrect response. As such, error correction is an appropriate strategy for teaching students how to acquire a new behavior. Some researchers have focused, almost exclusively, on systematic trial-based instruction that results in very few, or no, student errors [24]. Reasons for their approach include the fact that some students engage in very challenging behaviors when they commit an error, errors detract from instructional efficiency, and students may assimilate errors if permitted to practice them. Still, research supports that error correction is an effective strategy within a trial-based approach to instruction. For this reason, it is highlighted here.

Priming

Priming refers to exposing a student to content that will be addressed in a future lesson. The future lesson may occur in the relatively near or distant future. A reason for using priming to address near-term concerns is that doing so lessens a student’s anxiety about what will occur during the next day’s lesson and, therefore, increases the probability that the student will engage in appropriate behavior throughout the lesson. Longer term, research has shown that exposing a student to content that will be addressed in a future lesson results in the student requiring less instructional time to acquire the content when it is the targeted learning outcome in a future lesson [29]. Hence, priming is presented here to show how both a student and teacher can benefit from the same strategy since the teacher will not have to “start from scratch” to teach each new behavior.

- Review Article

- Abstract

- Introduction

- Systematic Trial-Based Instruction: Definition and Evidence-Base

- Three Core Phases of Systematic Trial-Based Instruction: Some Details

- Evidence-Base for Systematic Trial-Based Instruction

- Presenting Efficient Instruction to Address Students’ Rates of Learning and Skill Maintenance

- Depictions of Increased Instructional Efficiency Through Systematic Trial-Based Instruction

- Instructional Density: An Avenue to Instructional Efficiency and Individualized Instruction

- Practical Reasons for Using Systematic Trial-Based Instruction

- Conclusion

- References

Depictions of Increased Instructional Efficiency Through Systematic Trial-Based Instruction

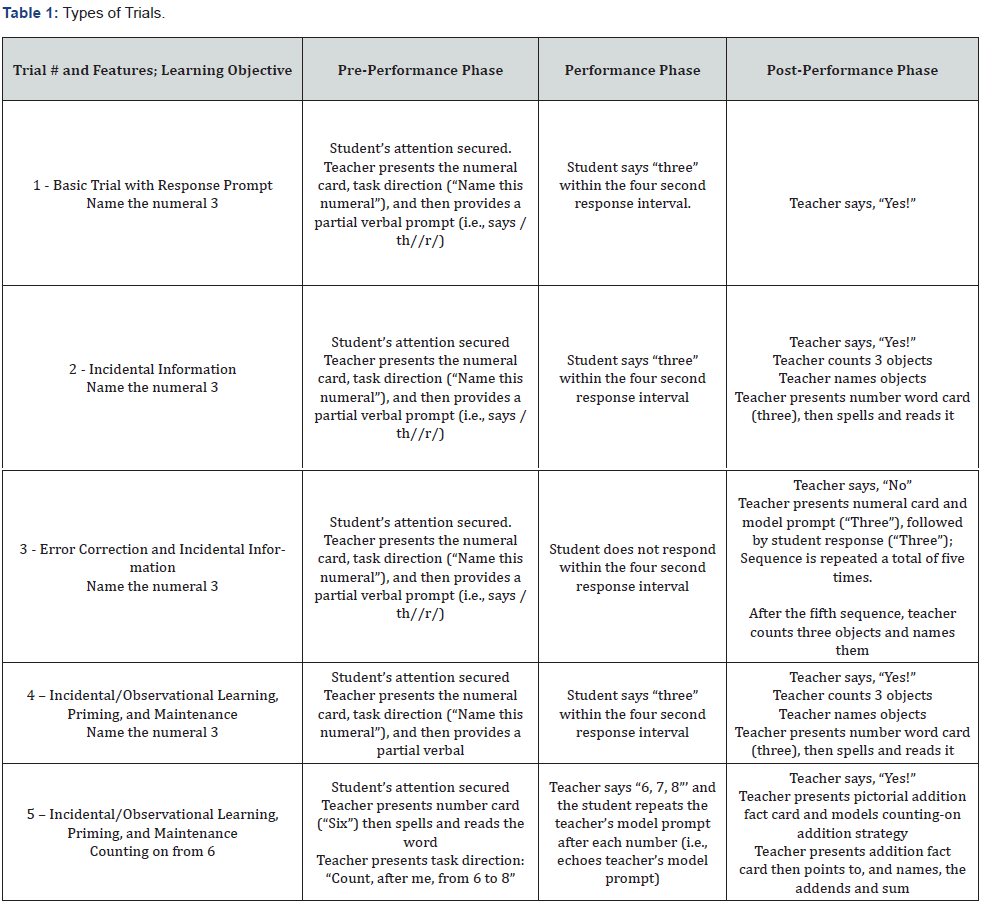

Table 1 depicts five trials which show a progression in the development of systematic trial-based instruction. First, a basic trial is depicted followed by trials that show how the previously described four instructional strategies can be employed.

Trials 1-3 are predicated on a 1:1 instructional arrangement. The targeted learning outcome for these trials is for the student to name the numeral 3. Before presenting the response item and task direction (i.e., the teacher shows the student an index card on which is written the numeral three and says, “Name this numeral”), the teacher will take some kind of action to secure the student’s attention (e.g., says, “Look here”). When the student’s body is oriented toward the teacher and he is looking directly at her, the teacher would begin the trial.

Basic trial with prompting

Trial 1 depicts a trial that consists of the basic threecomponent structure – task direction, student response, and response contingency - with the addition of one item: a partial verbal prompt. The prompt provides the student with information about how to produce the correct response. Since the student is working to acquire this learning outcome, the use of a prompt that provides the student with information about how to perform the task and can be faded over time is an appropriate instructional strategy to add to the basic trial structure.

Essentially, Trial 1 also depicts how trial-based instruction could be presented to allow a student to engage in activities that would support his maintenance of previously mastered content. During maintenance trials, the prompt would only be presented when needed, meaning only after a student makes an error. This type of trial-based instruction would be ideal when a teacher has a brief period of time to present instruction (e.g., 5 minutes or less).

Incidental information in the post-performance phase

Trial 2 depicts the addition of incidental information to the trial sequence. Specifically, four pieces of incidental information have been added to the post-performance phase of the trial. Each piece of information has been added because assessment data indicate that the student has not mastered any learning outcome that is associated with this information. For instance, the student cannot count out three objects when directed to do so, state the name for common objects that are used at school (e.g., name an eraser), or read or spell the number word three.

After the teacher presents corrective feedback (“Yes”) following the student’s correct response, she demonstrates how to count out three objects. She does so to show the student that the numeral three stands for that many objects as well as how to count that number of objects. She then names them (e.g., “These are erasers.”). Finally, she presents an index card on which the number word for 3 (i.e., three) is written. The teacher then spells and reads the word, and ends the trial by explaining to the student that the word can stand for the numeral.

Error correction and incidental information in the post-performance phase

Trial 3 depicts the addition of an error correction procedure and serves as an introduction to the concept of instructional density. In spite of the prompt, a student may respond incorrectly. For the purpose of this discussion, trial 3 depicts a trial in which the student does not emit any response during the designated response interval. Therefore, in the post-performance phase the teacher presents corrective feedback (“No”) followed by an error correction procedure called response repetition. It involves showing the student the response item, having the teacher present a model prompt (i.e., she names the numeral), followed by the student naming the numeral. This occurs five times, thereby providing the student with five opportunities to respond. After the fifth trial the teacher presents only two pieces of instructive feedback: counting out the number of objects represented by the numeral and naming them.

The teacher’s and student’s actions in this trial introduce the concept of instructional density. Instructional density is discussed below and refers to the number of teacher and student actions that can occur during a trial or session. In trial 3 the teacher decides to present less instructive feedback, relative to the previous trial, due to time limitations. In other words, a tradeoff has occurred with respect to the number of opportunities to respond that the student was provided and the amount of incidental information the teacher presented. Time is a primary factor in establishing the amount of instructional density that can be accommodated.

Small group instruction with incidental and observational learning, priming, and maintenance

The scenario for Trial 4 and Trial 5 changes such that the trials that are depicted are presented to students in a small group arrangement (i.e., 1 teacher and 2 students). Trial 4 is presented to the first student in the group and Trial 5 is presented to the second student.

In this scenario, the teacher presents one trial to each student sequentially, so the second student observes the first student perform his trial followed by the first student observing the second student perform his trial. Furthermore, in this scenario the second student has mastered all of that content that is being presented to student one while the first student has not mastered any of the content presented to the second student.

When the first student’s trial is presented the second student will be engaging in activities that support his maintenance of mastered content. The student could simply be directed by the teacher to observe the first student or else respond, sub-vocally, along with the first student. Either way, the second student would be engaging in overlearning which has been shown to result in long-term maintenance [6].

The targeted learning outcome for the second student is counting forward from a number other than one. In this instance, this student is learning to count forward from 6 to 8. Thus, trial 5 depicts the following features for this student: incidental information in the antecedent phase in the form of a number word (six) and its spelling; teacher model prompting in the performance phase to enable the student to acquire the targeted learning outcome; and a pictorial addition problem in the postperformance phase that functions as incidental information pertaining to the use of the counting-on strategy to solve an addition problem and using precise math vocabulary (i.e., naming the addends and the sum in an addition problem). Also, some of this information could function as priming for when relevant learning objectives are taught directly. Lastly, it is possible that naming the objects in the picture could function as incidental information.

The first student may benefit from the second student’s trial through incidental and observational learning. Incidental learning would occur if the student learned to read or spell the number word six, use the counting-on strategy when solving a basic addition fact, and name the addends and sum in an addition problem. Observational learning could occur if the student demonstrated the ability to count from 6-8 after seeing the second student do so and receiving reinforcement. Additionally, when the student is directly taught any of this content, less time (than otherwise would be expected) might be involved due to the priming that occurred during the second student’s trial.

- Review Article

- Abstract

- Introduction

- Systematic Trial-Based Instruction: Definition and Evidence-Base

- Three Core Phases of Systematic Trial-Based Instruction: Some Details

- Evidence-Base for Systematic Trial-Based Instruction

- Presenting Efficient Instruction to Address Students’ Rates of Learning and Skill Maintenance

- Depictions of Increased Instructional Efficiency Through Systematic Trial-Based Instruction

- Instructional Density: An Avenue to Instructional Efficiency and Individualized Instruction

- Practical Reasons for Using Systematic Trial-Based Instruction

- Conclusion

- References

Instructional Density: An Avenue to Instructional Efficiency and Individualized Instruction

A few of the ways that systematic trial-based instruction can be embellished and the reasons for doing so have been presented here. It is easy to see that a significant amount of teacher and student behavior can be accommodated in this instructional approach.

The term instructional density refers to the ratio of teacher and student actions to different trial dimensions. For example, instructional density can involve

a) the number of teacher and student actions during an instructional session;

b) the number of teacher and student actions in a single trial;

c) the number of teacher and student actions that take place across all of the students in a group when each student is given one opportunity to complete a trial; and

d) the variable number of actions, per student, in a small group when it is the student’s turn to complete a trial.

An emerging question is how many teacher and student behaviors can occur in a trial such that it results in effective and efficient instruction. It is possible that a trial becomes satiated with teacher and student actions that detract from a student’s learning. A related question is, “Which strategies are appropriate for which students and can be provided by an adept instructor during the time allocated for instruction?” Two key considerations are the satiation point for a student (i.e., when does information overload occur) and for a teacher (i.e., when can she no longer adhere to procedural fidelity because she cannot manage all of the instructional strategies that are to be used).

- Review Article

- Abstract

- Introduction

- Systematic Trial-Based Instruction: Definition and Evidence-Base

- Three Core Phases of Systematic Trial-Based Instruction: Some Details

- Evidence-Base for Systematic Trial-Based Instruction

- Presenting Efficient Instruction to Address Students’ Rates of Learning and Skill Maintenance

- Depictions of Increased Instructional Efficiency Through Systematic Trial-Based Instruction

- Instructional Density: An Avenue to Instructional Efficiency and Individualized Instruction

- Practical Reasons for Using Systematic Trial-Based Instruction

- Conclusion

- References

Practical Reasons for Using Systematic Trial-Based Instruction

Systematic trial-based instruction can evolve into a sophisticated approach to presenting effective and efficient remedial instruction. Thus, the flexibility of this instructional approach is one of its greatest appeals. Yet, there are many other reasons why teachers who present remedial instruction will be drawn to this approach.

1. The approach can be employed in the most often used instructional arrangements when remedial instruction is presented: 1:1 and small groups.

2. This approach to instruction can be employed in any setting that comprises a school’s continuum of alternative placements in fulfillment of the Individuals With Disabilities Education Act’s general least restrictive environment requirement. This includes a general education classroom in which a teacher is tasked to provide Tier 2 small group, remedial instruction.

3. Systematic trial-based instruction can be used during sessions of any length (e.g., 5 minutes or longer). A five-minute session might consist of a teacher repeatedly presenting a task directive and prompt, affording a student response, and providing corrective feedback. During a 30-minute session, a teacher might address three learning objectives while presenting multiple pieces of incidental information and directing students’ active engagement to increase the probability they will demonstrate observational learning.

4. The previous reason highlights how systematic trialbased instruction is in keeping with federal legislation. The IDEA stipulates that teachers are to use scientifically-based instruction to the extent practical. Even in a short, five-minute lesson this evidence-based practice can be employed.

5. Evidence supports its use across all students, meaning students who need remedial instruction, students with mildsignificant disabilities who receive special education services, and students without disabilities [30].

6. Within one session, a single learning objective, or multiple learning objectives, can be addressed.

7. Progress monitoring activities can be conducted during a session when systematic trial-based instruction is presented, or during another time of the school day.

8. This approach to instruction allows for the incorporation of numerous strategies that can address a number of the wideranging instructional needs of students who manifest persistent and significant learning challenges: acquisition, rate of learning, maintenance, generalization, and fluency.

9. Research has demonstrated that a wide variety of instructors can present effective instruction with this approach [31]. They include certified and non-certified personnel, students with and without disabilities, and parents.

10. Systematic trial-based instruction can be embedded within an explicit instruction lesson. Explicit instruction has proven to be effective across multiple subject matter areas - including reading, math, and writing - in teaching students with disabilities [2].

- Review Article

- Abstract

- Introduction

- Systematic Trial-Based Instruction: Definition and Evidence-Base

- Three Core Phases of Systematic Trial-Based Instruction: Some Details

- Evidence-Base for Systematic Trial-Based Instruction

- Presenting Efficient Instruction to Address Students’ Rates of Learning and Skill Maintenance

- Depictions of Increased Instructional Efficiency Through Systematic Trial-Based Instruction

- Instructional Density: An Avenue to Instructional Efficiency and Individualized Instruction

- Practical Reasons for Using Systematic Trial-Based Instruction

- Conclusion

- References

Conclusion

Systematic trial-based instruction can be configured in many ways to provide one type of comprehensive remedial instruction. Comprehensive not only means that it can readily be configured to address students’ task acquisition, rate of learning, and skill maintenance needs, but also their response fluency and generalization of learned content. This is the case even though the latter two matters were not addressed here simply because they were beyond the scope of this paper. Nonetheless, as a practical matter, perhaps what is most noteworthy about this instructional approach is how it simultaneously provides for needed structure and freedom. This approach does so by exemplifying what is meant by “the science and art of teaching.”

On the one hand, it is a highly structured, evidence-based practice that allows for the incorporation of many systematic evidence-based practices. In this regard, systematic trial-based instruction captures the science of teaching. On the other hand, a teacher will have to settle upon which evidence-based practices to use to meet the presenting needs of her situation. This includes each student’s needs as well as the collective needs of the students who comprise a small group. In this regard, systematic trialbased instruction also captures the art of teaching. While it is not the panacea for addressing all remedial instruction ills, it is an effective, efficient, flexible instructional approach that holds much promise for both the teachers and students involved with this type of instruction.

- Review Article

- Abstract

- Introduction

- Systematic Trial-Based Instruction: Definition and Evidence-Base

- Three Core Phases of Systematic Trial-Based Instruction: Some Details

- Evidence-Base for Systematic Trial-Based Instruction

- Presenting Efficient Instruction to Address Students’ Rates of Learning and Skill Maintenance

- Depictions of Increased Instructional Efficiency Through Systematic Trial-Based Instruction

- Instructional Density: An Avenue to Instructional Efficiency and Individualized Instruction

- Practical Reasons for Using Systematic Trial-Based Instruction

- Conclusion

- References

References

- Morse TE (2020) Response to intervention: Refining instruction to meet student needs. Rowman & Littlefield.

- Archer AL, Hughes CA (2011) Explicit instruction: Effective and efficient teaching. The Guilford Press.

- Shanahan T (2016) How many times should students copy the spelling words? Reading Rockets: Shanahan on Literacy.

- Edmonds RZ, Gandhi AG, Danielson L (2019) Essentials of intensive intervention. The Guilford Press.

- Gersten R, Compton D, Connor CM, Dimino J, Santoro L, et al. (2008) Assisting students struggling with reading: Response to Intervention and multi-tier intervention for reading in the primary grades. A practice guide (NCEE 2009-4045). Washington, DC: U.S. Department of Education, Institute of Education Sciences, National Center for Education Evaluation and Regional Assistance.

- Lemoine HE, Levy BA, Hutchinson A (1993) Increasing the naming speed of poor readers: Representations formed across repetitions. Journal of Experimental Psychology 55(3): 297-328.

- Fuchs LS, Fuchs D, Malone AS (2017) The taxonomy of intervention intensity. Teaching Exceptional Children 50(1): 35-43.

- Fuchs D, Fuchs LS (2006) Introduction to response-to-intervention: What, why, and how valid is it? Reading Research Quarterly 41(1): 93-99.

- Heward WL (2017) Exceptional children: An introduction to special education (11th edn). Pearson.

- Lemons CJ, Allor JH, Al Otaiba S, LeJeune LM (2016) 10 Research-based tips for enhancing literacy instruction for students with intellectual disability. Teaching Exceptional Children 50(4): 220-232.

- Westling DL, Fox L (2009) Teaching students with severe disabilities. Pearson.

- Albaran SA, Sandbank MP (2019) Teaching non-target information to children with disabilities: An examination of instructive feedback literature. Journal of Behavioral Education 28: 107-140.

- Werts MG, Caldwell NK, Wolery M (2003) Instructive feedback: Effects of a presentation variable. The Journal of Special Education 37(2): 124-133.

- Werts MG, Wolery M, Holcombe A, Gast DL (1995) Instructive feedback: Review of parameters and effects. Journal of Behavioral Education 5(1): 55-75.

- Gast DL, Wolery M (1988) Parallel treatments design: A nested single subject design for comparing instructional procedures. Education and Treatment of Children 11(3): 270-285.

- Cook BG, Tankersley M, Landrum TJ (2009) Determining evidence-based practices in special education. Exceptional Children 75(3): 365-383.

- Leaf R, McEachin J (1999) A work in progress: Behavior management strategies and a curriculum for intensive behavioral treatment in autism. DRL Books Inc.

- Smith T (2001) Discrete trial training in the treatment of autism. Focus on Autism and Other Developmental Disabilities 16(2): 86-92.

- Wong C, Odom SL, Hume K, Cox AW, Fettig A, et al. (2014) Evidence-based practices for children, youth, and young adults with autism spectrum disorder. Chapel Hill: The University of North Carolina, Frank Porter Graham Child Development Institute, Autism Evidence-Based Practice Review Group. J Autism Dev Disord 45(7): 1951-66

- Ghezzi PM (2007) Discrete trials teaching. Psychology in the Schools 44(7): 667-679.

- Tekin-Iftar E, Olcay-Gul S, Collins BC (2019) Descriptive analysis and meta analysis of studies investigating the effectiveness of simultaneous prompting procedure. Exceptional Children 85(3): 309-328.

- Keel MC, Gast DL (1992) Small group instruction for students with learning disabilities: Observational and incidental learning. Exceptional Children 58(4): 357-368.

- Wolery M, Jones MA, Doyle PM (1992) Teaching students with moderate to severe disabilities: Use of response prompting strategies. Longman.

- Collins BC (2012) Systematic instruction for students with moderate and severe disabilities. Paul H. Brookes.

- Odom SL, Hume K, Boyd B, Stabel A (2012) Moving beyond the intensive behavior treatment versus eclectic dichotomy: Evidence-based and individualized programs for learners with ASD. Behavior Modification 36(3): 270-297.

- Ryndak DL, Alper S (1996) Curriculum content for students with moderate and severe disabilities in inclusive settings. Allyn and Bacon.

- Collins BC Gast DL, Jones MA, Wolery M (1991) Small group instruction: Guidelines for teachers of students with moderate to severe handicaps. Education & Training in Mental Retardation 26(1): 1-18.

- Werts MG, Hoffman EM, Darcy C (2011) Acquisition of instructive feedback: Relation to target stimulus. Education and Training in Autism and Developmental Disabilities 46(1): 134-149.

- Werts MG, Wolery M, Venn ML, Demblowski D, Doren H (1996) Effects of transition-based teaching with instructive feedback on skill acquisition by children with and without disabilities. The Journal of Educational Research 90(2): 75-86.

- Fickel KM, Schuster JW, Collins BC (1998) Teaching different tasks using different stimuli in a heterogeneous small group. Journal of Behavioral Education 8(2): 219-244.

- Ledford JR, Lane JD, Elam KL, Wolery M (2012) Using response-prompting procedures during small-group instruction: Outcomes and procedural variations. American Journal on Intellectual and Developmental Disabilities 117(5): 413-434.