Abstract

Explainable AI (XAI) is becoming a key requirement in areas where trust in intelligent systems is directly linked to security and effectivity - especially in the management of critical infrastructure. Among the established approaches, the AI-method Case-Based Reasoning (CBR) has long proven itself due to its transparency: Every decision can be explained by reference to a similar case from the past. However, this strength is also a weakness. The effectiveness of CBR depends on the completeness of the case base, which in practice is often limited, static and predominantly based on explicit knowledge. Implicit knowledge - intuition, practical skills and situational intuition of experts - remains outside the systems and is therefore easily lost.

Generative AI (GenAI) opens up new possibilities. By analyzing unstructured expert materials - from interviews, notes and seminar transcripts to audio and video recordings - it is able to uncover hidden ontologies and semantic relationships that were previously inaccessible to formalized decision support systems. These ontologies can be parameterized and integrated into CBR databases, transforming scattered experiences into new cases that expand the range of explainable scenarios. The article justifies this synergy using the example of the digitalization of water management. The cases of wastewater treatment plant Heringhausen and sewer network system Jena show how CBR makes it possible to transform the implicit knowledge of experienced operating personnel into explainable solution models, while at the same time demonstrating the limitations of the traditional approach, in which knowledge is only partially captured. In this context, GenAI can act as a collector and enfolder of tacit knowledge by structuring previously poorly captured information and knowledge shares of experienced knowledge carriers of oral and written materials into an ontology that then fills the case base. At the same time, the risks of hallucination and misinterpretation require adaptive prompts and multi-level verification: GenAI- → -CBR → Expert.

The combination of CBR and GenAI is therefore not just a technical improvement, but could be the beginning of a new paradigm in XAI. A transition from static, explainable systems to dynamic systems is obviously and is being enriched with empirical knowledge that was previously almost impossible to capture. This is particularly important for critical infrastructure sectors like water management, as the inherent process complexity of the system means a high dependency on expert knowledge.

Keywords:Explainable Artificial Intelligence; Case-Based Reasoning; Generative AI; Tacit Knowledge; Water Management; Decision Support Systems

Explainability is not enough - can CBR grow with Generative AI?

In today’s world, where critical infrastructures are increasingly made up of digitized, distributed and interdependent elements, the ability to make informed, reliable and timely decisions is crucial. In areas such as water management, energy supply or traffic, technological failures can have far-reaching consequences - from supply disruptions and economic losses to undermining public trust in government and scientific institutions. Against this backdrop, there is a growing need for artificial intelligence systems that are not only able to provide decision-making support under uncertain and complex conditions but are also able to explain the measures taken.

Explainable AI (XAI) is increasingly seen not as an additional option, but as a central element of trust in AI systems [1-3]. This is particularly relevant in complex contexts in which new employees in particular do not have sufficient in-depth technical knowledge and yet must already be responsible for decisions [4,5]. If new employees take over a position from a departing knowledge holder, this can be problematic in critical decision-making situations and recourse to digital-based decision-making aids is desirable.

However, if algorithms remain “black boxes”, this leads to uncertainty and thus to a lower willingness to make decisions on the part of users. Explainability not only makes it possible to understand why a certain decision was made, but also to question, adapt and improve it. The issue of trust in AI in such circumstances is closely linked to the transparency of the reasoning logic and the ability to understand the cause-effect relationships between the input data and the final decision and to adjust it if necessary and sufficiently knowledgeable [6-8].

Among the many approaches to explainable AI, the case-based reasoning (CBR) method occupies a special position. Its main advantage is that it draws on past experience - just as a human expert draws on similar cases from their professional practice, the CBR algorithm develops new solutions by referring to historical examples [9-11]. Such a strategy is close to human thinking and intuitively understandable for the user: it makes the system transparent and predictable and its recommendations verifiable and repeatable. For this reason, CBR is often used in critically sensitive areas - from clinical diagnosis to the management of environmental risks [4,12]. In practical applications, however, CBR also has certain limitations. It requires a formalized and sufficiently complete knowledge base that describes various cases and conditions of the task in question and the associated context. In real-world systems, especially those associated with complex and/or social dynamics, existing case databases prove to be either too narrow, incomplete or too rigidly structured to cover contexts that are difficult to formalize [13-15].

Against this background, the possibilities of generative AI based on large language models and other transformer architectures are attracting particular attention. These models have proven their ability to analyze, generalize and generate knowledge from unstructured sources such as texts, reports, notes, interview transcripts, audio and video recordings. They are able to extract meaning where traditional models require manual formalization [16-18]. In contrast to traditional expert systems, GenAI does not require a predefined ontology - it creates it spontaneously by identifying hidden structures, semantic relationships and thematic frames. This creates the potential for a massive extension of CBR databases, especially in terms of content: GenAI can serve as a source for newly identified and defined cases that are converted into a comparable and reusable form, or as a supplier of parameters that specify the context of use of existing cases [19,20]. This is particularly important in water management, where much of the knowledge is still available in semi-structured or non-formalized form and largely only as tacit knowledge from those with many years of experience [5].

However, the flexibility of GenAI is also its weakness. The nature of generative models is such that they do not always distinguish between probability and reliability: The models are prone to so-called “hallucinations” and can produce logically coherent content that does not correspond to reality, especially when queries are unclear or lack context [1,14,18]. The question of the verification of generative knowledge, its comparability with expert knowledge, trust in interpretations as well as the control of reproducibility becomes the focus of attention. In addition, the use of generative models in critically important AI systems requires a rethinking of the interaction between humans and machines: the user should not only “accept” a decision but should also be involved in the process of its formation, adaptation and processing. In this context, adaptive prompts, interfaces with semantic feedback and hybrid architectures play a special role, in which human experience is used not only as a data source, but also as a regulator for the reliability of the model [21,11].

This creates a dynamic and effective interaction between two approaches - CBR as a stable, rigorously structured system that relies on proven cases, and GenAI as a flexible, generalizing system capable of transforming knowledge and filling gaps in experience. In this work, the possibilities of integrating these approaches to build explainable and adaptive decision support systems in the field of digitalization of water management are presented. It will be shown that integration is not only technically possible, but also methodologically justified, as it combines the strength of explainability of CBR with the potential of knowledge-oriented generation of GenAI. Particular attention is paid to issues of structural verification, semantic comparability and architectural compatibility of the components. Ultimately, it is not just a matter of synthesizing two techniques, but of creating an explainable, learning and trustworthy class of AI systems.

Explainable AI: methods, limitations and perspectives

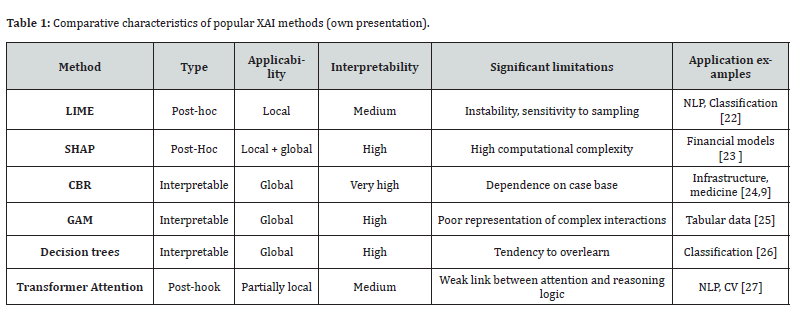

Explainability as a key area of artificial intelligence has gained particular importance over the last two decades, not only due to the increasing complexity of the models themselves, but also due to their increasing application in critical, socially sensitive areas. From water consumption prediction systems to disease diagnosis, from forensics to urban infrastructure regulation, all of these areas require not only highly accurate predictions but also an understanding of the background. As Yu et al. [17] note, there is a clear gap between advances in AI performance and the ability of users to trust the results of these systems. For this reason, explainable AI (XAI) is increasingly seen as a bridge between the computational capabilities of algorithms and the cognitive expectations of humans. Modern XAI methods can be roughly divided into two main groups: Post-hoc methods for explanation and models that are interpretable from the ground up. Table 1 lists the most popular approaches, indicating their type, degree of interpretability and characteristic limitations, which provides a better orientation regarding the applicability of each method depending on the task context.

The first category includes approaches such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (Shapley Additive Explanations), which provide a local interpretation of the predictions of “black boxes” - such as neural networks or gradient boosting [1,2]. These methods create an approximate interpretable model in the neighborhood of an observation, allowing the user to understand which features most influenced the decision in that particular case. However, despite their popularity, LIME and SHAP face the problem of instability of results, dependence on randomness of the sample, and lack of guarantees for global interpretability [23,24]. In addition, they often require specialized training for correct interpretation, and the explanations themselves may be superficial or statistically decorative [3,20].

The second category includes models that are designed to be interpretable from the outset. Classic examples are decision trees, logistic regression, generalized additive models (GAMs) and CBR, which, despite its relatively long history, has undergone a new development in the context of XAI [9,10]. The principle of CBR - decision making based on analogies to previous experiences - enables a natural backtracking of the conclusion that is intuitively understandable for humans. It is precisely this “anthropocentricity” that makes CBR particularly valuable for explainable systems, where not only the outcome is important, but also the confidence in this outcome based on the transparency of the reasoning [4,11,13]. However, CBR requires a well-structured knowledge base, which limits its application in dynamically changing or difficult to formalize domains.

Furthermore, a discussion of XAI is not possible without reference to the issue of trust, which has recently taken an increasingly important place on the scientific and applied agenda. Research shows that the mere presence of explanations does not guarantee an increase in trust - rather, their cognitive compatibility with the user’s expectations, experience and the context of the task is important [6,21]. Schoenborn [8] emphasizes that explainability must not only be associated with technical verifiability, but also with a sense of agency: The user must have the feeling that they are still “inside” the decision-making process and not on its periphery. This is particularly critical in infrastructure systems where decisions are made by humans based on AI recommendations - for example, when selecting measures to respond to water pollution or deviations in consumption.

A major limitation of most modern XAI approaches is their inability to process unstructured or weakly formalized knowledge - exactly what is prevalent in technical reports, notes, operational logs or meeting minutes. This creates a barrier between real-world practice and the formalized logic of the algorithm. In this context, hybrid architecture that include elements of generative models (GenAI), which can interpret and structure informal knowledge, and structures such as CBR, which ensure transparency and comparability of decisions, are of particular interest [14,19,20].

Thus, the modern field of XAI is in a phase of redefining its boundaries. From “explanation as retrospective commentary” to “explanation as integrated logic of system function”, from a purely technical approach to socially oriented trust engineering. This requires both the development of new methods that can integrate the context and expectations of experts and the creation of holistic architectures that combine the flexibility of knowledge acquisition with the rigor of reproducibility. Systems focused on infrastructure solutions - especially in water management - provide a unique test bed for such approaches: They combine complexity, dynamism, the need for adaptability and a high level of social responsibility.

Case-Based Reasoning meets Generative AI - memory or imagination?

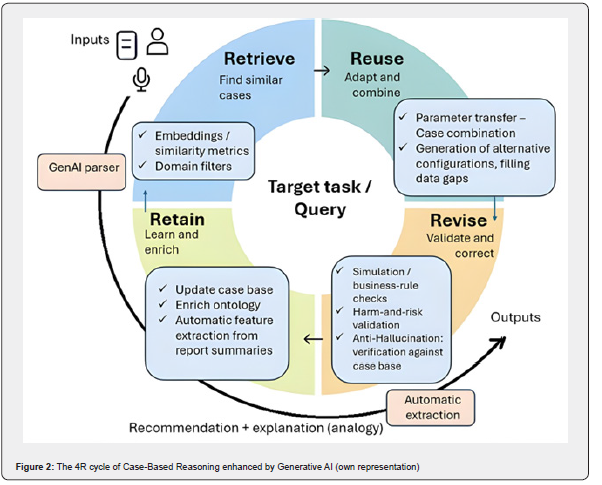

Case-based reasoning is traditionally described as the “memory” of artificial intelligence: The central idea is that new tasks can be solved by searching for and adapting similar cases from the past. From the beginning of its development, CBR was inspired by cognitive models of human reasoning: just as humans draw on their own experience or the experience of their colleagues in a new situation, the system finds relevant precedents, transfers them to a new context and stores them for future use [9,13,28]. This cycle - retrieve, reuse, revise, retain - makes explainability an integrated property of the method: each decision is accompanied by a reference to the case on which it is based, so that the user can understand its rationale and correct it if necessary. This transparency has given CBR a special status in the field of XAI: it is not an external interpretation tool, but a method originally based on explanation by experience [4,29].

However, even a solid memory has its weaknesses. The system can only suggest a solution if relevant cases are available in its knowledge base. If such precedents do not exist or are too limited, CBR is “dumb” and cannot go beyond the accumulated experience. This disadvantage is particularly evident in domains with a high degree of uncertainty, where emergencies or previously unknown scenarios may occur. In the management of critical infrastructure such as water management, such limitations are critical: events that go beyond known patterns require not only logic, but also flexibility, heuristics and often the creative imagination of experts [12,30]. In addition, CBR databases are mainly based on explicit knowledge such as regulations, documentation, reports, structured cases, etc., which can already be formalized [31]. At the same time, tacit and implicit knowledge, professional intuition, practical skills or heuristic patterns remain outside the system, although they are often crucial under uncertain conditions and are considered an important knowledge component in generational handover.

This is precisely where Generative AI comes into play, which can be regarded as the “imagination” of AI. Unlike memory, which is limited by the framework of the case base, generative models are capable of creating new combinations of meaning, recognizing patterns in unstructured data and suggesting alternative scenarios. After appropriate training, they are able to capture and structure unformalized descriptions of experience and make them available for further use. Classical architectures such as Variational Autoencoders (VAE), Generative Adversarial Networks (GAN) and diffusion models have proven their worth in the synthesis of images, audio and complex signals [32,33], while large language models (LLM) have proven their worth in the interpretation of texts, the analysis of discourse and the generation of meaningful explanations [2]. These tools are able to work with tacit/implicit knowledge by capturing it through the analysis of meeting minutes, interviews, expert notes and even video recordings. In this way, they transform the living stream of professional interpretations into formalized structures - ontologies, knowledge graphs, scenarios - that can be embedded in CBR and used as new parameters for search and adaptation [34,35].

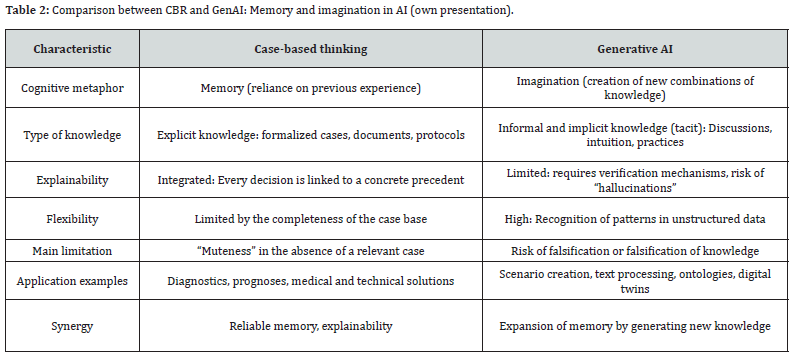

A comparative analysis of the cognitive properties of CBR and Generative AI allows them to be viewed as complementary modules - the former acting as memory, the latter as imagination. The main differences and synergy points are listed in Table 2.

As illustrated in Figure 1, Case-Based Reasoning and Generative AI can be seen as complementary cognitive systems. While CBR embodies structured and explicit memory based on case retrieval, similarity metrics, and transparent analogy-based reasoning, Generative AI represents an implicit and creative layer capable of generalization and synthesis under incomplete information. The intersection between the two highlights the hybrid zone of synergy, where hidden ontologies can be extracted, case parameters automatically derived, and generated options filtered and validated through CBR mechanisms.

Together, CBR and GenAI form a kind of “collective knowledge process” in which the accumulated (implicit) experience is constantly supplemented by new meanings. However, the integration of these approaches requires caution: generative models are prone to “hallucinations” and therefore need to be controlled and verified and require a framework of proven case studies in their freedom of generation [36]. The balance between memory and imagination becomes a key requirement for the creation of explainable and reliable decision support systems in the context of the digital transformation of critical infrastructures.

Bridging reasoning and generation - a new hybrid framework

The development of a hybrid framework that combines Case- Based Reasoning and Generative AI is based on a combination of cognitive and technological prerequisites, where memory and imagination are not metaphors but functional elements of the architecture.

The classical CBR model, represented by the “retrieve, reuse, revise, retain” cycle, has proven its worth in explainable systems for decades, as each decision is not only output, but also provided with a reference to the specific case on which it is based [28,37]. However, the strength of CBR - its transparent reliance on experience - is also a limitation: in the absence of a relevant case, the system proves to be “dumb” or too inflexible, as it works predominantly with explicit knowledge that has already been formalized and included in the database [13]. In practice, however, it is precisely the implicit knowledge of experts, which manifests itself in intuition, improvisation and hidden patterns of action, that is crucial when working under conditions of high uncertainty, for example in critical infrastructures.

Generative AI represents a new level of possibilities in this context. Modern models - from variational autoencoders and generative-competitive networks to large language models - have shown that they are capable of recognizing patterns in weakly structured data and transforming them into meaningful structures, be it in the form of texts, ontologies or scenarios [32,34].

This allows them to work with tacit knowledge and extract it from audio interviews, notes, discussion transcripts and work journals that remained outside the database in traditional CBR. Studies on capturing tacit knowledge through generative methods confirm that such models can capture and structure what is traditionally lost in the process of experience transfer [31,35].

In the retrieve phase, integration with generative models makes it possible to overcome the limitations of classical search. Instead of extracting only from pre-formalized cases, the system can now work with interviews, reports or work logs from experts. Studies on the use of GenAI to capture tacit knowledge show that such models are able to extract key elements even from oral and poorly structured data [31]. This is an argument in favor of extending retrieve with generative mechanisms: CBR retains the search structure, while GenAI expands the range of available knowledge.

The reuse phase is also enriched by generative possibilities. In traditional CBR, it is limited to adapting the found case to a new context [37], but Generative AI makes it possible to create new combinations and form ontologies by linking past experience with new data [34]. This creates the possibility of a generative “reconsideration” of old cases, which makes the system more flexible under conditions of uncertainty [37-40].

The revise phase in the classical sense requires expert review or the use of additional rules [28]. Here, GenAI can take on the role of a “scenario simulator” by generating alternative or as yet not formula table solution options, checking them for consistency and uncovering weaknesses [41]. This approach is supported by research e.g. in the field of digital infrastructure twins, where generative models are successfully used to model future scenarios [41].

In the retain phase, e.g.. the storage of new experiences, Generative AI enables the automation of the parameterization process: events are translated into structured cases ready for later search and explanation. Guruge [35] and colleagues show that generative models are able to structure even weakly formalized knowledge descriptions into reusable formats. This justifies the inclusion of GenAI as an “enriching storage layer” that allows to speed up and simplify the populating of the CBR database. Figure 2 shows the hybrid concept of combination of CBR and GenAI.

However, a key argument against unrestricted integration is the risk of hallucinations and the invention of facts. Smith and Vemula [36] emphasize that LLM can provide plausible but false explanations. Therefore, the central methodological principle is that generation must be controlled by adaptive prompts and checked by expert verification. Prompt engineering provides a cognitive “boundary of the field” of generation, while the human being is the last instance to check reliability. The possibility of combining these approaches has not only technical but also cognitive arguments. CBR embodies the function of memory and ensures reproducibility and explainability, while GenAI represents the imagination that fills in the gaps, expands the interpretive space and extracts/formulates hidden knowledge. Precisely because generative models are prone to hallucinations and the invention of facts, a control instance is required: adaptive prompts that define the limits of relevance and human verification as the final instance of verification. In this way, a methodological framework emerges in which memory and imagination interact in balance: CBR provides the structure and explainability, while Generative AI is built in as an enrichment mechanism, transforming individual traces of experience into collective knowledge. This symbiosis makes it possible to develop decision support systems that not only reproduce the past but also open up new horizons of understanding while maintaining user confidence in the logic of the system.

Water infrastructure as a playground for explainable AI

Practice in recent years shows that the potential of Generative AI is particularly evident in water management. Li et al. [38] have used Generative AI (using GAN) to detect pollution and anomalies in distribution networks, Koochali and colleagues [39] to model water levels in wastewater systems, and McMillan [40] used VAE as a model for Generative AI for self-repair tasks in water supply systems. In a broader context, Xu and Omitaomu [41] showed how generative models can reinforce digital twins of urban infrastructures by creating new scenarios and forecasts. Finally, the studies by Rothfarb [42] and Allen [43] show how LLM and hybrid generative architectures can optimize the operation of wastewater treatment plants and predict energy consumption. All these works emphasize a key feature: generative models serve not only as analytical tools, but also as sources of new knowledge that enrich the cognitive arsenal of decision support systems.

However, the issue of explainability and integration of knowledge is most evident where Case-Based Reasoning (CBR) methods have already been applied. Water management is an area in which the experience of operators and engineers is of crucial importance but is rarely recorded in a formalized form: It exists in the form of oral discussions, notes, observations and debates that are difficult to systematize. The digitization projects in Heringhausen and Jena clearly show how CBR makes it possible to transform expert knowledge into explainable solutions, while also highlighting the limits of traditional approaches where generative AI can provide new impetus.

In the municipality of Heringhausen (North Hesse, Germany) [49], the small central wastewater treatment plant at the lake Diemelsee was confronted with strong seasonal fluctuations of tourists: In summer, wastewater was produced by up to 5,000 tourists; in winter, the wastewater treatment plant was only loaded with the wastewater of around 400 residents. The old sewage treatment plant was not able to handle these extreme fluctuations either technologically or in terms of plant size so that the wastewater could be sufficiently treated. A new wastewater treatment plant therefore had to be built which, on the one hand, was able to treat this extreme load range in a professional and legally compliant manner. On the other hand, a high degree of digitalization and energy efficiency had to be implemented, which could only be achieved with good modelling. Due to the limited sensor and laboratory data of the old system, complete modeling was not possible, so the developers of the new system decided to compensate for the missing data with empirical values from the staff. During the planning process, the operating staff participated in the creation of simulations by formulating typical operating situations using their own experience, which were then transferred into a digital form and incorporated into the simulations. The output result was checked also with the involvement of the staff that could correct the input parameters if necessary. As a result, 20 “ representative situations” were worked out, based on which a CBR database could be created, even though there was insufficient measurement data available.

During subsequent operation, new conditions were then compared with already known cases and the system suggested optimal control parameters to the staff for further decisions. This approach made it possible to make the control of the system reproducible and explainable. The data acquisition process was very time-consuming and was carried out without generative AI, as this was not yet available at the time of planning.

The other example, the sewer network project of the city of Jena shows a different scale [50]. The main challenge there was to optimize the control of the sewer network for extreme weather conditions and to improve the operating conditions in the sewer network. More than 180 simulations were done and 13 scenarios were selected, covering a wide range of situations related to extreme weather conditions - from normal operation to periods of drought or extreme rainfall.

The optimization of control systems and specially designed

machines flush and throttle device had four main objectives for

dynamic sewer network management:

i. The additional storage of mixed water in rainfall in the

existing sewer through better utilization of previously unused

storage reserves of the sewer;

ii. to prevent mixed water from escaping from the full

sewer during heavy rainfall, although the use of storage reserves

could prevent this;

iii. Avoiding sediments on the sewer bottom during

periods of drought by automatically flushing the sewer bed,

which contributes to the development of foul gases that produce

unpleasant odors and can damage the sewer pipes;

iv. better control of the mixed water volumes in the

sewer in order to better distribute the inflow volumes to the

sewage treatment plant and thus avoid peak loads on the sewage

treatment plant and save energy (the sewer was used as a buffer

for the sewage treatment plant).

The database, also developed by using the experiences of the staff, with the various scenarios developed against the background of the four objectives formed the basis for the CBR system, which compared current weather forecasts with the cases in the database in real time and checked which scenario comes closest to the current reality and achieves the desired objectives. Based on the analysis, the system recommended measures such as predictive flushing during dry periods or the optimal use of reservoirs during heavy rainfall events. Feedback was an important component: the operating staff constantly made clarifications and corrections to the scenarios, transforming the system from a static instrument into a dynamically updated experience manual. But even here, the problem of the high workload of such a cycle remained - the constant simulations and checks required a considerable amount of personnel.

These two examples show the potential of CBR for storing and explaining individual (and tacit) staff experience and its limitations: Much of the knowledge exists in the form of “living traces” - oral discussions, engineers’ notes, meeting minutes - and is rarely formalized. As a result, the case base either remains limited, as in Heringhausen, or requires considerable resources for constant updating, as in Jena. This is precisely where Generative AI can play a key role: By analyzing various materials - from seminar transcripts to audio and video recordings - it identifies recurring patterns and converts them into ontologies. These ontologies can be parameterized and integrated into a CBR database, which in turn ensures the explainability and reproducibility of solutions: “We act in this way because this scenario was chosen in a similar case with similar parameters”. In practice, the combination of generative AI and Case Based Reasoning forms the basis for a new generation of technical decision support systems in which the expertise of specialists is not only stored but also expanded and is thus available for reviews, explanations and use in critical situations.

Synergy or confusion - what happens when CBR and GenAI work together?

The combination of CBR and GenAI is not just a technical experiment, but an attempt to develop a new methodology for explainable decision support systems especially to bring implicit knowledge on front. On the one hand, CBR has proven over long period its ability to structure experiences and provide explanations based on previous cases. On the other hand, modern generative models, especially large language models (LLMs), open up access to new sources of knowledge and forms of generalization that were previously unavailable to traditional approaches. Their synergy promises considerable advantages, but at the same time raises questions regarding trust and methodological stringency.

The strengths of the hybrid approach include, above all, the automation of the collection of expert knowledge, which also represents an effective form of knowledge management. In contrast to classical CBR, where populating the case base requires tedious manual structuring, GenAI is able to analyze large amounts of texts, protocols and even oral recordings and extract potentially useful elements for the creation of new cases. Das and colleagues [44] have shown that neurosymbolic CBR architectures can benefit significantly from integration with generative mechanisms that fill knowledge gaps and allow new logical forms to be constructed even when there are no direct equivalents in the database. Watson [45] develops this idea further, claiming that CBR in particular can take on the role of a “persistent memory” for LLM by compensating for its inability to store and systematize experience over the long term.

Another advantage is the ability to recognize hidden ontologies and patterns. In contrast to classical retrieval-oriented models, which are limited to searching for superficial matches, GenAI can integrate semantic connections and reconstruct cause-effect chains. The work of Guo et al. [20] in the area of software testing convincingly demonstrates how incorporating CBR into the cycle of generative models not only speeds up the solution of the task, but also improves the structuredness of the generated solutions, especially when using retrieval/reuse optimization methods. This confirms a more general conclusion as Kostas and colleagues [47] described: Cognitive dimensions of CBR such as self-reflection and metacognition can significantly enrich the architecture of LLM agents, transforming them from “talking models” to truly thinking systems.

However, the risks here are no less significant. Firstly, generative models are known to be prone to “hallucinations” - i.e. the creation of plausible but false explanations [26]. In the context of critical infrastructure, this can have catastrophic consequences: If the system proposes a scenario that seems convincing but has no empirical basis, trust in technology will quickly be lost. Secondly, there is a risk that experts will be inundated with garbage data. Generative models tend to produce an excessive number of variants, which without filtering and prioritization can complicate the verification process. Wilkerson [46] emphasizes that explanations based on cases are perceived by users as more reliable than explanations based on abstract rules. However, the quality of such explanations can decline sharply, especially with “noisy” input.

Multi-level checks and adaptive prompts are a key element of the solution. Practice has shown that architecture that combine retrieve-and-generate achieve the best results when the generation is controlled and managed by the storage system. In this context, CBR acts as a filter and stabilizer, ensuring that only tested scenarios that are comparable to previous experience are included in the final set. GenAI, in turn, acts as a generator of new interpretations of i.e. implicit knowledge and structures, which are then subjected to a review and fixation process. Such a hybrid circuit (GenAI → CBR → Expert) forms a trustworthy foundation where the creativity of machine imagination is balanced by the rigor of memory and human expertise. This study is in the same vein as recent work on the integration of Case-Based Reasoning and Generative AI, although the focus differs considerably. For example, Kostas and colleagues [47] propose to consider CBR as a “memory framework” for an LLM agent: The system stores and structures past cases, can retrieve and adapt them, and the cognitive control cycle is built through mechanisms of goaldirected autonomy. Here, CBR acts as a structured knowledge repository, while LLM implements the “brain” that is able to adapt and set goals.

Guo et al. [20] show a different way by focusing on the applied optimization of CBR in conjunction with LLM. In their test script generation scenario, CBR is used for the classical 4R cycle (retrieve, reuse, revise, retain), while generative models reinforce the phases of adaptation and revision. The authors show that the application of reranking and reinforced reuse methods can reduce the number of hallucinations and increase the reliability of the solutions.

The approach proposed in this study overlaps with these and other studies [48] in recognizing the key role of CBR as a stabilizing mechanism for generative models. However, the focus in this paper is placed on a different aspect - the extraction and formalization of tacit knowledge in the water management domain. Here, Generative AI plays the role of an “interceptor of living knowledge” by analyzing streaming material (audio and video recordings of meetings, transcripts of seminars, engineers’ work notes) and identifying recurring patterns and ontologies. These ontologies are then parameterized and become new cases for the CBR database. In such a framework, GenAI provides access to unstructured and implicit knowledge, while CBR transforms it into explainable and verifiable technical scenarios.

Thus, while Kostas and his colleagues [47] focus on the internal architecture of the LLM agent and Guo and his colleagues focus on optimizing the adaptation and generation of solutions, this paper addresses the framework for creating an input and verification system for the hybrid model. This control loop is designed to address the specifics of water management, where expert knowledge is often existing in a fragmented form that is difficult to formalize, and the cost of errors is too high to rely solely on automated methods. By integrating Generative AI into the process of knowledge acquisition and formalization and then transferring this knowledge into CBR, artificial intelligence becomes even more comprehensible: each decision is not only based on previous experience but also contains a rationale of how exactly this experience was created and structured. The result is a system in which memory and imagination work together to ensure trust, transparency and reliability in the management of critical infrastructure. It is precisely this synthesis that paves the way for new generations of decision support systems in which trust and efficiency are no longer mutually exclusive goals.

Challenges, research gaps, and the role of expert co-creation in hybrid AI systems

The discussed synergy between structured “memory” of casebased reasoning (CBR) and creative “imagination” of generative AI (GenAI) focuses on an adapted version of explainable AI systems (XAI). Such technical integration, as promising as it may be, is not the final result, but rather the starting point for a series of new, complex challenges that require a corresponding research agenda. Initial findings on technical feasibility make it clear that the actual hurdles to the use of such hybrid systems in critical infrastructures are less algorithmic and more socio-technical in nature. The central thesis here is that the successful combination of CBR and GenAI cannot be achieved solely by optimizing interfaces and data flows. Rather, a “socio-technical gap” emerges: a divide between the automated, potentially error-prone generation of implicit knowledge by GenAI and the human processes of validation, trust building, and accountability. Research on the risks of large language models (LLMs), such as hallucinations, bias, and the disclosure of sensitive data, underscores the absolute necessity of human oversight and verification. The success of hybrid CBRGenAI systems will therefore not be measured solely by their generative performance, but by the quality and robustness of the human-machine collaboration they enable [51].

The desired goals of a final hybrid model must be reviewed through a critical analysis of four key problem areas and adjusted as necessary. These challenges relate to knowledge acquisition, the reliability of the generated content, system transparency, and long-term maintainability. The classic “knowledge acquisition bottleneck” of CBR describes the difficult and labor-intensive process of collecting, formalizing, and entering expert knowledge into the case base [52]. At first glance, GenAI appears to solve this problem by enabling the automatic extraction of knowledge from unstructured sources such as interviews, protocols, or technical reports. However, this automation leads to a new, even more insidious challenge: the risk of filling the case base with an unprecedented amount of low-quality, distorted, or simply false information. GenAI’s inherent tendency to produce falsehoods or inaccuracies transforms the original problem of scarcity of formalized (implicit) knowledge into a potential flood of unconfirmed knowledge. The bottleneck is therefore not eliminated, but merely shifted “downstream”.

The knowledge acquisition bottleneck must be replaced by a “knowledge validation bottleneck.” The new critical hurdle is the limited capacity of human experts to review and validate the vast number of cases and ontologies that GenAI can produce. Research must therefore shift its focus away from the generation process alone and instead develop methods and tools that increase the efficiency and accuracy of expert validation itself. Studies by the authors on digitization in water management suggest that hybrid expertise is necessary for this research path, e.g., in addition to experts in knowledge generation and AI, application experts are also required who, ideally, have sufficient additional know-how in the other fields [53]. In the context of critical infrastructures such as water management, AI-generated hallucinations can have catastrophic consequences. A scenario invented by AI not only undermines confidence in an infrastructure system but also poses a real danger. This makes expert validation even more necessary, as is the use of specified and tested AI agents. In a negative case, this can also jeopardize the inherent transparency of the CBR system if the transparent content is called into question due to the origin of the data. Effective scalability must be achieved in further developments. If standard LLM systems are used, whose data sets are immense and therefore increasingly opaque, scalability will prove to be a major hurdle both within a critical infrastructure and in the case of transferability to neighboring infrastructures. From this perspective, too, the focus should be on specialized AI elements. Promising in this context is the development of so-called SLMs (smart language models), which, compared to previous LLMs (large language models), offer greater efficiency and adaptability across domains and, in particular, demonstrate the potential to perform specialized tasks with minimal computing effort [54]. At the same time, care must be taken to ensure that the implicit expertise captured is not corrupted by generally available data from large LLMs. It is therefore not enough to involve the experts in the information gathering process; they must also be involved in the evaluation of the results.

To truly mitigate the risks of GenAI, reactive review is not enough. The involvement of experts must be proactive and deeply integrated into the design and operation of the system, rather than just being a retrospective control step. The traditional Humanin- the-loop (HITL) approach often implies a supervisory role in which a human validates or corrects the machine-generated output after the fact. This is a reactive stance that is insufficient for critical systems. In a safety-critical system, waiting for a generated hallucination to occur and then intercepting it is inherently risky. A more robust approach is to prevent hallucinations from occurring in the first place [55]. This requires shifting expert involvement “upstream” into the process of knowledge creation and system design. The expert is no longer just a reviewer of results, but a co-designer of the knowledge base and system logic. This shift transforms HITL from a technical safeguard to a collaborative, participatory methodology. The focus shifts from simply “verifying AI content” to “jointly creating a shared knowledge model.” This requires new, structured formats for human-AI collaboration that go beyond simple review workflows [56].

This approach, in turn, carries another risk, namely the availability of the experts to be involved. Therefore, such a new approach should not be too ambitious from the outset, and it should also be linked as closely as possible to the everyday work of the selected experts. To this end, the authors have developed the so-called “Anyway Strategy” method, which allows innovations to be integrated into everyday work with relatively little time and effort in such a way that these innovations have a successful leverage effect but do not have too much of a negative impact on everyday work. This is one promising way to ensure that the selected experts remain active and highly motivated over a longer period of time [57].

From proof of concept to paradigm shift - what’s next for explainable AI

At present, there are only initial pilot tests that show that the combination of case-based reasoning and generative AI can compensate for the weaknesses of each individual approach and open up new horizons in the field of explainable artificial intelligence.

However, the future of this approach lies less in individual proofs of concept and more in the development of holistic hybrid architectures in which both methods are integrated into a uniform knowledge system. Even if local characteristics play a special role in the water industry, as in other critical infrastructures (energy, telecommunications, transport etc.), there are many commonalities of implicit knowledge across all local solutions that allow the transfer of individual results and thus speak in favor of the development of hybrid architectures.

Such an architecture can be described as a multi-stage cycle in which generative AI assumes the function of dynamically extracting and systematizing expert knowledge from various sources - texts, audio and video materials, transcripts of workshops and meetings. This data is not simply translated into a text format but forms the basis for the automatic creation and constant updating of ontologies that reflect important contexts:

Type of situation, conditions of occurrence, actions taken by professionals and their outcomes. These ontologies are then forwarded to the CBR cycle, which not only ensures the reproducibility and utilization of the experience gained, but also the explainability of each decision by referring to a specific case and its parameters.

Risk mitigation mechanisms are of particular importance. Experience from experiments already conducted shows that generative models without adaptive prompts, validation and multi-stage verification are prone to hallucinations and false generalizations. Therefore, future research should focus on the development of reliable filters and verification procedures: from the preliminary restriction of the generation field to prompt engineering and the mandatory involvement of experts in the feedback cycle. Such a verification cycle not only reduces the probability of errors, but also makes the system a trustworthy tool, where automatic generation is balanced by verified memories and human judgment.

In a broader sense, it can be spoken of the emergence of a new paradigm in the field of XAI. Traditionally, explainability has been associated with static knowledge bases and fixed cases, which provided transparency by referring to past experience but did not cope well with the dynamics of real systems. In the proposed framework, there is a transition from static to dynamic knowledge: Memory is supplemented and refined in constant interaction with new data streams, and explanations are no longer just a reference to the past, but the result of their meaningful integration into the present. It is therefore a question of creating new-generation decision support systems in which GenAI and CBR work together like imagination and memory and explainability is no longer a byproduct but becomes the central principle of the architecture.

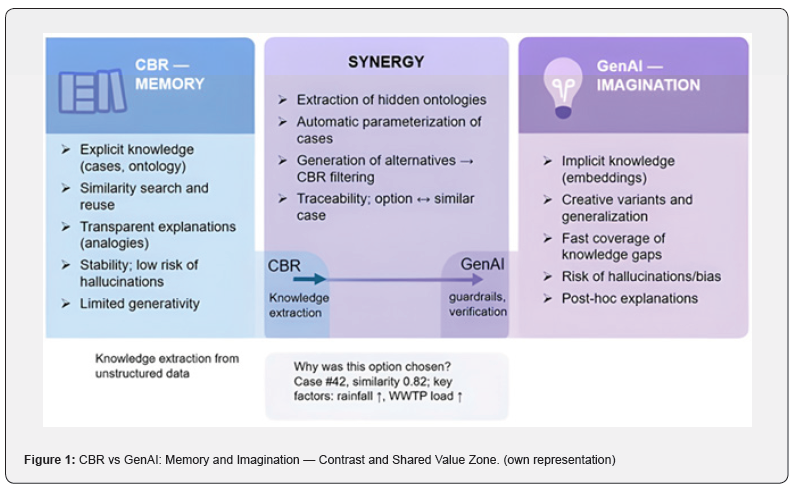

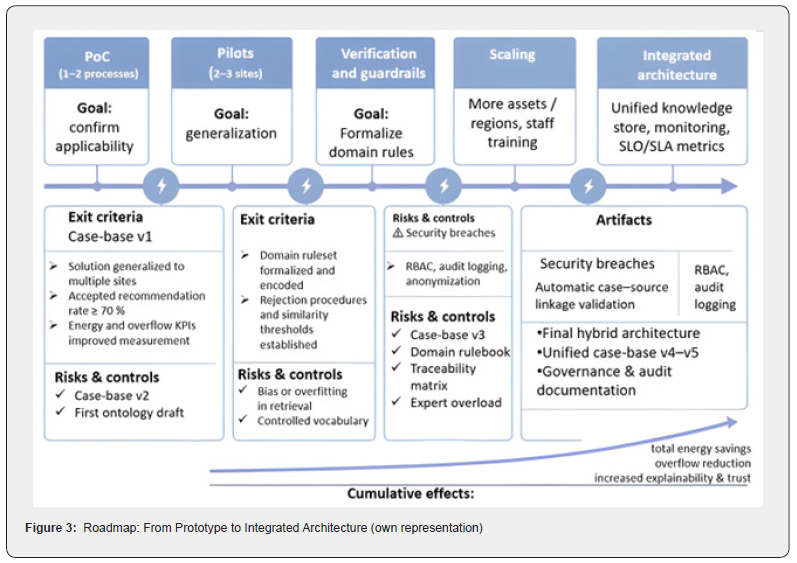

The progression from conceptual framework to operational deployment can be summarized in a structured roadmap, as illustrated in Figure 3. It outlines the gradual evolution from proof-of-concept prototypes to pilot implementations, followed by verification, scaling, and final architectural integration. Each phase defines specific goals, exit criteria, and control mechanisms— ensuring that the transition from experimental hybrid systems to mature, explainable infrastructures remain traceable, safe, and verifiable.

The structure presented can be seen as a step-by-step transition from ideas to practical implementation. In the first phase, prototypes of hybrid systems will be created in which Generative AI processes multimodal expert data and builds ontologies to populate the CBR database. These prototypes are then tested in pilot scenarios, for example on specific water management objects, where the efficiency and weaknesses of the approach are determined. The third phase involves the development of reliable verification mechanisms - adaptive prompts, filtering via CBR and expert feedback. After that, it will be possible to scale the solution to related critical systems such as energy or transportation. The final step is the development of an integrated architecture in which Generative AI and CBR form a unified cognitive system in which memory and imagination work together and explainability becomes the basic principle of operation. In addition, methods need to be improved in order to encourage older knowledge carriers in particular to reveal their implicit knowledge and actively participate in audio-visual recording.

Conclusion:

CBR plus Generative AI - towards a new generation of decision support

At first glance, case-based reasoning and generative models appear to be two different worlds: The first embodies a stable, structured and explainable memory, the second the flexibility of imagination, the ability to process unstructured data and uncover hidden regularities. But it is precisely their combination that paves the way for a new generation of getting an access to implicit knowledge as a base of a new kind of decision support systems. Case-based reasoning remains the framework of explainability: every decision can be linked to a concrete precedent, and the logic of the conclusion can be checked and reproduced. Generative AI, in turn, becomes the engine of extensibility: it is capable of extracting knowledge from streaming and heterogeneous materials - texts, interviews, seminar transcripts, audio and video recordings - and converting it into structured ontologies that fill and update the case base.

This synergy makes it possible to overcome the limitations of both approaches: the static and incomplete nature of traditional CBR systems and the tendency of generative models to hallucinate and make false generalizations. On the other hand, the combination allows effective access to implicit and tacit knowledge in critical infrastructure sectors like water management. An important prerequisite for this is a multi-level verification mechanism: adaptive prompts restrict the uncontrolled generation field, CBR filters and stabilizes the knowledge based on previous experience, and experts perform the final verification. The result is a hybrid architecture in which memory and imagination work together to create a system that can not only make decisions but also explain why those decisions are justified and reproducible.

Thus, the combination of CBR and Generative AI marks the transition from static, explainable systems to dynamic systems that are constantly enriched with previously undisclosed expertise and knowledge from the real world. This is not just an evolution of XAI in the traditional sense, but the emergence of a new paradigm in which explainability is no longer a limitation but becomes a strategic resource for trust and efficiency. And if today we are talking about pilot tests and initial prototypes, tomorrow it is precisely such hybrid systems that can form the basis of an intelligent infrastructure capable of working under uncertainty and preserving what is most important - the explainability of decisions.

References

- Mersha M, Lam K, Wood J, AlShami A, Kalita J (2025) Explainable Artificial Intelligence: A Survey of Needs, Techniques, Applications, and Future Direction. University of Colorado. Preprint.

- Yang W, Wei Y, Wei H, Chen Y, Huang G, et al. (2023) Survey on Explainable AI: From Approaches, Limitations and Applications Aspects. In: Human-Centric Intelligent Systems 3: 161-188.

- Maußner C, Oberascher M, Autengruber A, Kahl A, Sitzenfrei R, et al. (2025) Explainable Artificial Intelligence for Reliable Water Demand Forecasting to Increase Trust in Predictions. Water Research 268: 122779.

- Cerutti J, Abi-Zeid I, Lamontagne L, Lavoie R, J Rodriguez-Pinzon M (2023) A case-based reasoning tool for recommending measures to protect drinking water sources. In: Journal of Environmental Management 331: 117228.

- Infant S, Vickram S, Saravanan A, Mathan Muthu C M, Yuarajan D (2025) Explainable Artificial Intelligence for Sustainable Urban Water Systems Engineering. Results in Engineering, 25: 104349.

- Afroogh S, Akbari A, Malone E, Kargar M & Alambeigi H (2024) Trust in AI: Progress, Challenges, and Future Directions. Humanities and Social Sciences Communications.

- Jung M, von Garrel J (2021) Mitarbeiterfreundliche Implementierung von KI-Systemen im Hinblick auf Akzeptanz und Vertrauen.. In: TATuP 30(3): 37-43.

- Schoenborn D (2017) Explainable Agency in Artificial Intelligence. In: Workshop on Explainable AI, ECAI.

- Watson I (2000) Case-Based Reasoning is a Methodology not a Technology. In: Expert Update 3(3): 22-26.

- Oliveira EM, Reale RF, Martins JSB (2020) A Methodological Approach to Model CBR-based Systems. Journal of Computer and Communications 8(9): 1-16.

- Schultheis J, Zeyen C, Bergmann R (2023) Erläuterung von Empfehlungssystemen mit generativem CBR und lokalen modellunabhängigen Erklä In: Proceedings of the 31st International Conference on Case-Based Reasoning (ICCBR).

- Mounce SR, Mounce RB, Boxall JB (2015) Case-Based Reasoning to Support Decision Making for Managing Drinking Water Quality Events in Distribution Systems. Urban Water Journal 13(6): 727-738.

- Leake D, Wilkerson Z, Crandall D (2024) Combining Case-Based Reasoning with Deep Learning. In: AAAI 2024. preprint

- Rajarshi Das, Ameya Godbole, Nicholas Monath, Manzil Zaheer, Andrew McCallum (2020) Probabilistic Case-Based Reasoning for Open-World Knowledge Graph Completion. arXiv.

- Li W, Paraschiv F, Sermpinis G (2021) Ein datengesteuerter, erklärbarer, fallbasierter Ansatz zur Erkennung finanzieller Risiken. In: arXiv: 2107.08808

- Rajarshi Das, Ameya Godbole, Nicholas Monath, Manzil Zaheer, Andrew McCallum (2020) Probabilistic Case-Based Reasoning for Open-World Knowledge Graph Completion. In: arXiv: 2010.03548v2.

- Yu H, et al. (2023) Überbrückung der Kluft zwischen Erklärbarkeit und Leistung in der KI. In: arXiv:2301.05718

- Das A, Rad P (2020) Opportunities and Challenges in Explainable Artificial Intelligence (XAI): A Survey. IEEE. arXiv:2006.11371.

- Marom O (2025) Ein allgemeiner Rahmen für die abrufgestützte Generierung für multimodale fallbasierte Schlussfolgerungsanwendungen.

- Guo S, Liu H, Chen X, Xie Y, Zhang L, et al. (2025) Optimierung eines fallbasierten Argumentationssystems für die Generierung funktionaler Testskripte mit großen Sprachmodellen. Proceedings of the 31st ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD '25).

- Vemula, A. et al. (2022) Erklärung in der KI: Erkenntnisse aus den Sozialwissenschaften. In: Proceedings of the AAAI/ACM Conference on AI, Ethics and Society.

- Ribeiro MT, Singh S & Guestrin C (2016) "Why should I trust you?": Explaining the predictions of any classifier. Proceedings of the 22nd ACM SIGKDD Conference on Knowledge Discovery and Data Mining, pp. 1135-1144.

- Lundberg SM & Lee SI (2017) A unified approach to interpreting model predictions. Advances in neural information processing systems 30: 4765-4774.

- Watson I (2000) Case-based reasoning is a methodology, not a technology. Expert Update 3(3): 22-26.

- Hastie T & Tibshirani R (1990) Generalized additive models. London: Chapman & Hall/CRC.

- Mienye, Ibomoiye & Jere, Nobert (2024) A review of decision trees: concepts, algorithms and applications. IEEE Access. p. 1-1.

- Jain S & Wallace BC (2019) Attention is not an explanation. arXiv preprint.

- Aamodt A & Plaza E (1994) Case-based reasoning: Basic issues, methodological variations, and systems approaches. AI Communications 7(1): 39-59.

- Homem TPD, Santos PE, Costa AHR, Bianchi RADC & Lopez de Mantaras R (2020) Qualitative case-based reasoning and learning. Artificial Intelligence 283: 103258.

- Pascual-Pañach J & Onaindia E (2020) Ontology-based extension of the case base for planning applications. Knowledge-Based Systems 191: 105221.

- Zaoui Seghroucheni S, et al. (2024) Capturing tacit knowledge through generative AI: Methods and challenges. Knowledge management research and practice, preprint.

- Goodfellow I, Pouget-Abadie J, Mirza M, Bing Xu, David Warde-Farley, et al. (2014) Generative adversarial nets. Advances in Neural Information Processing Systems.

- Kingma DP & Welling M (2013) Auto-encoding variational Bayes. arXiv preprint.

- Papageorgiou DJ (2025) Knowledge graphs and generative AI in industrial process management. Journal of Industrial Information Integration 37: 100603.

- Guruge D (2024) Generative AI for knowledge management: Unlocking hidden expertise. Knowledge Management & E-Learning 16(2): 123-142.

- Smith B & Vemula A (2022) Hallucinations in large language models: Causes and remedies. arXiv preprint.

- Kolodner J L (1192) An introduction to case-based reasoning. Artif Intell Rev 6: 3-34.

- Li W, et al. (2023) GAN-based anomaly detection in water supply networks. Expert Systems with Applications 214: 119091.

- Koochali, A., et al. (2024) Deep generative models for urban drainage prediction. Water Science & Technology 89(2): 356-369.

- McMillan M (2024) Variational autoencoders for resilient water distribution systems. Procedia CIRP 118: 762-769.

- Xu C & Omitaomu O (2024) Generative AI for digital twins in urban water systems. Environmental Modeling & Software 169: 105743.

- Rothfarb J, et al. (2025). Multi-agent generative AI for wastewater treatment optimization. Water Research 232: 120999.

- Allen J, et al. (2025) Knowledge-based GNNs for energy forecasting in wastewater treatment plants. Applied Energy 374: 122145.

- Das R, Zaheer M, Thai D, Godbole A, Perez E, et al. (2021) Case-based reasoning for natural language queries over knowledge bases. arXiv:2104.08762.

- Watson I (2024) A case-based persistent store for a large language model. In ICCBR 2024 workshop on case-based reasoning and synergies of large language models. Mérida, Mexico.

- Wilkerson K (2024) LLM reliability and CBR: How case-based reasoning can improve large language model performance. In ICCBR 2024 Doctoral Consortium Proceedings. Mérida, Mexico.

- Kostas H, Christou D, Kondapalli V (2025) Case-based reasoning for large language model agents: Theoretical foundations, architectural components and cognitive integration. Preprint.

- Hatalis K, Christou D, Kondapalli V (2025) Case-based reasoning for large language model agents: Theoretical foundations, architectural components and cognitive integration. arXiv: 2504.06943.

- Müller-Czygan G, Wiese J, Tarasyuk V & Tschepetzki R (2022) Energieeinsparung auf Kläranlagen mit fallbasierter Steuerung (Cased Based Reasoning). In: Daniel Demmler DK, INFORMATIK 2022 - Computer Science in the Natural Sciences.

- Oeltze H, Simancas Suárez J, & Wiese J (2025) Effizienter Kanalbetrieb mit Einsatz von KI. Wasser und Abfall, pp. 20-24.

- Lozano-Paredes L (2025) Mapping AI’s role in NSW governance: a socio-technical analysis of GenAI integration. Frontiers in Political Science.

- Watson I, Marir F (1994) Case-based reasoning: A review. The knowledge engineering review 9(4): 327-354.

- Müller-Czygan G (2018) Empirical study on the significance of learning transfer and implementation factors in the digitization project "KOMMUNAL 4.0" with special attention to the interactions of technology and change management. Münster: FOM University of Applied Sciences for Economics and Management (thesis).

- Lu Z, Li X, Cai D, Yi R, Liu F, et al. (2024) Small language models: Survey, measurements, and insights. arXiv preprint arXiv: 2409.15790.

- Kou J, Lu X, Yin A, Zhang W, Fang X, et al. (2024) Beyond Isolated Fixes: A Comprehensive Survey on Hallucination Mitigation with a Three-Dimensional Taxonomy and Integrative Framework. 7th International Conference on Universal Village (UV), IEEE, pp. 1-60.

- Steayesh P (2025) Human in the Loop: Interpretive and Participatory AI in Research. Graduate Center Digital Initiatives. Research Paper.

- Müller-Czygan G (2021) HELIP® and “Anyway Strategy” – Two Human-Centred Methods for Successful Digitalization in the Water Industry, Annals of social science & management studies.