The Theory of Data Transmission Through -High- Energies From Yesterday’s Radio Waves to Today’s Laser!

Mohammad Maghferati1 and Pourya Zarshenas2*

1M.B.A Student of University of Northampton, Niloofar Abi Health and Sports Complex Management, England

2Master of Technology Management (R&D Branch), South Tehran Branch, Islamic Azad University (IAU), Tehran, Iran, Universal Scientific Education and Research Network (USERN), Tehran, Iran

Submission: July 24, 2023; Published: August 17, 2023

*Corresponding author: Pourya Zarshenas, Master of Technology Management (R&D Branch), South Tehran Branch, Islamic Azad University (IAU), Tehran, Iran, Universal Scientific Education and Research Network (USERN), Tehran, Iran

Pourya Z. The Theory of Data Transmission Through -High- Energies From Yesterday’s Radio Waves to Today’s Laser!. Recent Adv Petrochem Sci. 2023; 8(1): 555728.DOI: 10.19080/RAPSCI.2023.08.555728

Abstract

Data transmission through radio waves is not a new phenomenon. But implementing the idea that data can be transmitted through electromagnetic energy is a unique idea that can be extended to other types of magnetic energy. This article, which is set up to explain the theory of data transmission through electromagnetic energy waves, describes the different aspects of this issue in several different sections. In physics, electromagnetism is an interaction that occurs between particles with electric charge via electromagnetic fields. The electromagnetic force is one of the four fundamental forces of nature.

Keywords: Energy; Data Transmission; Radio Waves; Electromagnetic Energy; Electromagnetic Waves; Infrared; Ultraviolet; Gamma Waves; X-Rays

Introduction

Electromagnetism

In physics, electromagnetism is an interaction that occurs between particles with electric charge via electromagnetic fields. The electromagnetic force is one of the four fundamental forces of nature. It is the dominant force in the interactions of atoms and molecules. Electromagnetism can be thought of as a combination of electrostatics and magnetism, two distinct but closely intertwined phenomena. Electromagnetic forces occur between any two charged particles, causing an attraction between particles with opposite charges and repulsion between particles with the same charge, while magnetism is an interaction that occurs exclusively between charged particles in relative motion. These two effects combine to create electromagnetic fields in the vicinity of charge particles, which can accelerate other charged particles via the Lorentz force. At high energy, the weak force and electromagnetic force are unified as a single electroweak force.

The electromagnetic force is responsible for many of the chemical and physical phenomena observed in daily life. The electrostatic attraction between atomic nuclei and their electrons holds atoms together. Electric forces also allow different atoms to combine into molecules, including the macromolecules such as proteins that form the basis of life. Meanwhile, magnetic interactions between the spin and angular momentum magnetic moments of electrons also play a role in chemical reactivity; such relationships are studied in spin chemistry. Electromagnetism also plays a crucial role in modern technology: electric energy production, transformation and distribution, light, heat, and sound production and detection, fiber optic and wireless communication, sensors, computation, electrolysis, electroplating and mechanical motors and actuators. Electromagnetism has been studied since ancient times. Many ancient civilizations, including the Greeks and the Mayans created wide-ranging theories to explain lightning, static electricity, and the attraction between magnetized pieces of iron ore. However, it wasn’t until the late 18th century that scientists began to develop a mathematical basis for understanding the nature of electromagnetic interactions. In the 18th and 19th centuries, prominent scientists, and mathematicians such as Coulomb, Gauss and Faraday developed namesake laws which helped to explain the formation and interaction of electromagnetic fields. This process culminated in the 1860s with the discovery of Maxwell’s equations, a set of four partial differential equations which provide a complete description of classical electromagnetic fields. Besides providing a sound mathematical basis for the relationships between electricity and magnetism that scientists had been exploring for centuries, Maxwell’s equations also predicted the existence of self-sustaining electromagnetic waves. Maxwell postulated that such waves make up visible light, which was later shown to be true. Indeed, gammarays, x-rays, ultraviolet, visible, infrared radiation, microwaves, and radio waves were all determined to be electromagnetic radiation differing only in their range of frequencies (Figure 1).

In the modern era, scientists have continued to refine the theorem of electromagnetism to consider the effects of modern physics, including quantum mechanics and relativity. Indeed, the theoretical implications of electromagnetism, particularly the establishment of the speed of light based on properties of the “medium” of propagation (permeability and permittivity), helped inspire Einstein’s theory of special relativity in 1905. Meanwhile, the field of quantum electrodynamics (QED) has modified Maxwell’s equations to be consistent with the quantized nature of matter. In QED, the electromagnetic field is expressed in terms of discrete particles known as photons, which are also the physical quantity of light. Today, there exist many problems in electromagnetism that remain unsolved, such as the existence of magnetic monopoles and the mechanism by which some organisms can sense electric and magnetic fields.

History of the Theory

Originally, electricity and magnetism were two separate forces. This view changed with the publication of James Clerk Maxwell’s 1873 A Treatise on Electricity and Magnetism [1] in which the interactions of positive and negative charges were shown to be mediated by one force. There are four main effects resulting from these interactions, all of which have been clearly demonstrated by experiments: Electric charges attract or repel one another with a force inversely proportional to the square of the distance between them: unlike charges attract, like ones repel [2]. Magnetic poles (or states of polarization at individual points) attract or repel one another in a manner like positive and negative charges and always exist as pairs: every north pole is yoked to a south pole [3]. An electric current inside a wire creates a corresponding circumferential magnetic field outside the wire. Its direction (clockwise or counterclockwise) depends on the direction of the current in the wire [4]. A current is induced in a loop of wire when it is moved toward or away from a magnetic field, or a magnet is moved towards or away from it; the direction of current depends on that of the movement [4]. In April 1820, Hans Christian Ørsted observed that an electrical current in a wire caused a nearby compass needle to move. At the time of discovery, Ørsted did not suggest any satisfactory explanation of the phenomenon, nor did he try to represent the phenomenon in a mathematical framework. However, three months later he began more intensive investigations [5,6]. Soon thereafter he published his findings, proving that an electric current produces a magnetic field as it flows through a wire. The CGS unit of magnetic induction (oersted) is named in honor of his contributions to the field of electromagnetism [7]. His findings resulted in intensive research throughout the scientific community in electrodynamics. They influenced French physicist André-Marie Ampère’s developments of a single mathematical form to represent the magnetic forces between current-carrying conductors. Ørsted’s discovery also represented a major step toward a unified concept of energy.

This unification, which was observed by Michael Faraday, extended by James Clerk Maxwell, and partially reformulated by Oliver Heaviside and Heinrich Hertz, is one of the key accomplishments of 19th-century mathematical physics [8]. It has had far-reaching consequences, one of which was the understanding of the nature of light. Unlike what was proposed by the electromagnetic theory of that time, light and other electromagnetic waves are at present seen as taking the form of quantized, self-propagating oscillatory electromagnetic field disturbances called photons. Different frequencies of oscillation give rise to the different forms of electromagnetic radiation, from radio waves at the lowest frequencies, to visible light at intermediate frequencies, to gamma rays at the highest frequencies.

Ørsted was not the only person to examine the relationship between electricity and magnetism. In 1802, Gian Domenico Romagnosi, an Italian legal scholar, deflected a magnetic needle using a Voltaic pile. The factual setup of the experiment is not completely clear, nor if current flowed across the needle or not. An account of the discovery was published in 1802 in an Italian newspaper, but it was largely overlooked by the contemporary scientific community, because Romagnosi seemingly did not belong to this community [9]. An earlier (1735), and often neglected, connection between electricity and magnetism was reported by Dr. Cookson [10-14]. The account stated: A tradesman at Wakefield in Yorkshire, having put up a great number of knives and forks in a large box ... and having placed the box in the corner of a large room, there happened a sudden storm of thunder, lightning, &c. ... The owner emptying the box on a counter where some nails lay, the persons who took up the knives, which lay on the nails, observed that the knives took up the nails. On this the whole number was tried, and found to do the same, and that, to such a degree as to take up large nails, packing needles, and other iron things of considerable weight.

Classical Electrodynamics

In 1600, William Gilbert proposed, in his De Magnate, that electricity and magnetism, while both capable of causing attraction and repulsion of objects, had distinct effects [15]. The Mariners had noticed that lightning strikes had the ability to disturb a compass needle. The link between lightning and electricity was not confirmed until Benjamin Franklin’s proposed experiments in 1752 were conducted on 10 May 1752 by Thomas-François Dalibard of France using a 40-foot-tall (12 m) iron rod instead of a kite and he successfully extracted electrical sparks from a cloud [16,17].One of the first to discover and publish a link between man-made electric current and magnetism was Gian Romagnosi, who in 1802 noticed that connecting a wire across a voltaic pile deflected a nearby compass needle. However, the effect did not become widely known until 1820, when Ørsted performed a similar experiment [18]. Ørsted’s work influenced Ampère to produce a theory of electromagnetism that set the subject on a mathematical foundation [19].

A theory of electromagnetism, known as classical electromagnetism, was developed by various physicists during the period between 1820 and 1873 when it culminated in the publication of a treatise by James Clerk Maxwell, which unified the preceding developments into a single theory and discovered the electromagnetic nature of light [20]. In classical electromagnetism, the behavior of the electromagnetic field is described by a set of equations known as Maxwell’s equations, and the electromagnetic force is given by the Lorentz force law [21-25]. One of the peculiarities of classical electromagnetism is that it is difficult to reconcile with classical mechanics, but it is compatible with special relativity. According to Maxwell’s equations, the speed of light in vacuum is a universal constant that is dependent only on the electrical permittivity and magnetic permeability of free space. This violates Galilean invariance, a long-standing cornerstone of classical mechanics. One way to reconcile the two theories (electromagnetism and classical mechanics) is to assume the existence of a luminiferous aether through which the light propagates. However, subsequent experimental efforts failed to detect the presence of the aether. After important contributions of Hendrik Lorentz and Henri Poincaré, in 1905, Albert Einstein solved the problem with the introduction of special relativity, which replaced classical kinematics with a new theory of kinematics compatible with classical electromagnetism. (For more information, see History of special relativity). In addition, relativity theory implies that in moving frames of reference, a magnetic field transforms to a field with a nonzero electric component and conversely, a moving electric field transforms to a nonzero magnetic component, thus firmly showing that the phenomena are two sides of the same coin. Hence the term “electromagnetism”. (For more information, see Classical electromagnetism and special relativity and covariant formulation of classical electromagnetism.)

Electromagnetic Spectrum

The electromagnetic spectrum is the range of frequencies (the spectrum) of electromagnetic radiation and their respective wavelengths and photon energies. The electromagnetic spectrum covers electromagnetic waves with frequencies ranging from below one hertz to above 1025 hertz, corresponding to wavelengths from thousands of kilometers down to a fraction of the size of an atomic nucleus. This frequency range is divided into separate bands, and the electromagnetic waves within each frequency band are called by different names; beginning at the low-frequency (long-wavelength) end of the spectrum these are: radio waves, microwaves, infrared, visible light, ultraviolet, X-rays, and gamma rays at the high-frequency (short wavelength) end. The electromagnetic waves in each of these bands have different characteristics, such as how they are produced, how they interact with matter, and their practical applications. There is no known limit for long and short wavelengths. Extreme ultraviolet, soft X-rays, hard X-rays and gamma rays are classified as ionizing radiation because their photons have enough energy to ionize atoms, causing chemical reactions. Radiation of visible light and longer wavelengths are classified as nonionizing radiation because they have insufficient energy to cause these effects (Figure 2).

Humans have always been aware of visible light and radiant heat but for most of history it was not known that these phenomena were connected or were representatives of a more extensive principle. The ancient Greeks recognized that light traveled in straight lines and studied some of its properties, including reflection and refraction. Light was intensively studied from the beginning of the 17th century leading to the invention of important instruments like the telescope and microscope. Isaac Newton was the first to use the term spectrum for the range of colors that white light could be split into with a prism. Starting in 1666, Newton showed that these colors were intrinsic to light and could be recombined into white light. A debate arose over whether light had a wave nature or a particle nature with René Descartes, Robert Hooke and Christiaan Huygens favoring a wave description and Newton favoring a particle description. Huygens had a well-developed theory from which he was able to derive the laws of reflection and refraction. Around 1801, Thomas Young measured the wavelength of a light beam with his twoslit experiment thus conclusively demonstrating that light was a wave. In 1800, William Herschel discovered infrared radiation [5].

He was studying the temperature of different colors by moving a thermometer through light split by a prism. He noticed that the highest temperature was beyond red. He theorized that this temperature change was due to “calorific rays”, a type of light ray that could not be seen. The next year, Johann Ritter, working at the other end of the spectrum, noticed what he called “chemical rays” (invisible light rays that induced certain chemical reactions). These behaved similarly to visible violet light rays but were beyond them in the spectrum [6]. They were later renamed ultraviolet radiation. The study of electromagnetism began in 1820 when Hans Christian Ørsted discovered that electric currents produce magnetic fields (Oersted’s law). Light was first linked to electromagnetism in 1845, when Michael Faraday noticed that the polarization of light traveling through a transparent material responded to a magnetic field. During the 1860s, James Clerk Maxwell developed four partial differential equations (Maxwell’s equations) for the electromagnetic field. Two of these equations predicted the possibility and behavior of waves in the field. Analyzing the speed of these theoretical waves, Maxwell realized that they must travel at a speed that was about the known speed of light. This startling coincidence in value led Maxwell to make the inference that light itself is a type of electromagnetic wave. Maxwell’s equations predicted an infinite range of frequencies of electromagnetic waves, all traveling at the speed of light. This was the first indication of the existence of the entire electromagnetic spectrum.

Maxwell’s predicted waves included waves at very low frequencies compared to infrared, which in theory might be created by oscillating charges in an ordinary electrical circuit of a certain type. Attempting to prove Maxwell’s equations and detect such low frequency electromagnetic radiation, in 1886, the physicist Heinrich Hertz built an apparatus to generate and detect what are now called radio waves. Hertz found the waves and was able to infer (by measuring their wavelength and multiplying it by their frequency) that they traveled at the speed of light. Hertz also demonstrated that the new radiation could be both reflected and refracted by various dielectric media, in the same manner as light. For example, Hertz was able to focus the waves using a lens made of tree resin. In a later experiment, Hertz similarly produced and measured the properties of microwaves. These new types of waves paved the way for inventions such as the wireless telegraph and the radio. In 1895, Wilhelm Roentgen noticed a new type of radiation emitted during an experiment with an evacuated tube subjected to a high voltage. He called this radiation “x-rays” and found that they were able to travel through parts of the human body but were reflected or stopped by denser matter such as bones. Before long, many uses were found for this radiography.

The last portion of the electromagnetic spectrum was filled with the discovery of gamma rays. In 1900, Paul Villard was studying the radioactive emissions of radium when he identified a new type of radiation that he at first thought consisted of particles like known alpha and beta particles, but with the power of being far more penetrating than either. However, in 1910, British physicist William Henry Bragg demonstrated that gamma rays are electromagnetic radiation, not particles, and in 1914, Ernest Rutherford (who had named them gamma rays in 1903 when he realized that they were fundamentally different from charged alpha and beta particles) and Edward Andrade measured their wavelengths, and found that gamma rays were like X-rays, but with shorter wavelengths. The wave-particle debate was rekindled in 1901 when Max Planck discovered that light is absorbed only in discrete “quanta”, now called photons, implying that light has a particle nature. This idea was made explicit by Albert Einstein in 1905 but was never accepted by Planck and many other contemporaries. The modern position of science is that electromagnetic radiation has both a wave and a particle nature, the wave-particle duality. The contradictions arising from this position are still being debated by scientists and philosophers.

Electromagnetic waves are typically described by any of the following three physical properties: the frequency f, wavelength λ, or photon energy E. Frequencies observed in astronomy range from 2.4×1023 Hz (1 GeV gamma rays) down to the local plasma frequency of the ionized interstellar medium (~1 kHz). Wavelength is inversely proportional to the wave frequency, so gamma rays have very short wavelengths that are fractions of the size of atoms, whereas wavelengths on the opposite end of the spectrum can be indefinitely long. Photon energy is directly proportional to the wave frequency, so gamma ray photons have the highest energy (around abillionelectron volts), while radio wave photons have very low energy (around a femtoelectronvolt). These relations are illustrated by the following equations:

Where:

• c = 299792458 m/s is the speed of light in vacuum

• h = 6.62607015×10-34 J·s = 4.13566733(10) ×10-15 eV·s is the Planck constant.

Whenever electromagnetic waves travel in a medium with matter, their wavelength is decreased. Wavelengths of electromagnetic radiation, whatever medium they are traveling through, are usually quoted in terms of the vacuum wavelength, although this is not always explicitly stated. Generally, electromagnetic radiation is classified by wavelength into radio wave, microwave, infrared, visible light, ultraviolet, X-rays and gamma rays. The behavior of EM radiation depends on its wavelength. When EM radiation interacts with single atoms and molecules, its behavior also depends on the amount of energy per quantum (photon) it carries. Spectroscopy can detect a much wider region of the EM spectrum than the visible wavelength range of 400 nm to 700 nm in a vacuum. A common laboratory spectroscope can detect wavelengths from 2 nm to 2500 nm. Detailed information about the physical properties of objects, gases, or even stars can be obtained from this type of device. Spectroscopes are widely used in astrophysics. For example, many hydrogen atoms emit a radio wave photon that has a wavelength of 21.12 cm. Also, frequencies of 30 Hz and below can be produced by and are important in the study of certain stellar nebulae [8] and frequencies as high as 2.9×1027 Hz have been detected from astrophysical sources [9].

The types of electromagnetic radiation are broadly classified into the following classes (regions, bands, or types):

• Gamma radiation

• X-ray radiation

• Ultraviolet radiation

• Visible light

• Infrared radiation

• Microwave radiation

• Radio waves

This classification goes in the increasing order of wavelength, which is characteristic of the type of radiation. There are no precisely defined boundaries between the bands of the electromagnetic spectrum; rather they fade into each other like the bands in a rainbow (which is the sub-spectrum of visible light). Radiation of each frequency and wavelength (or in each band) has a mix of properties of the two regions of the spectrum that bound it. For example, red light resembles infrared radiation in that it can excite and add energy to some chemical bonds and indeed must do so to power the chemical mechanisms responsible for photosynthesis and the working of the visual system. The distinction between X-rays and gamma rays is partly based on sources: the photons generated from nuclear decay or other nuclear and subnuclear/particle process are always termed gamma rays, whereas X-rays are generated by electronic transitions involving highly energetic inner atomic electrons [10- 12]. In general, nuclear transitions are much more energetic than electronic transitions, so gamma rays are more energetic than X-rays, but exceptions exist. By analogy to electronic transitions, muonic atom transitions are also said to produce X-rays, even though their energy may exceed 6 teraelectronvolts (0.96 pJ),[13]. whereas there are many (77 known to be less than 10 keV (1.6 fJ) low-energy nuclear transitions (e.g., the 7.6 eV (1.22 aJ) nuclear transition of thorium-229m), and, despite being one million-fold less energetic than some muonic X-rays, the emitted photons are still called gamma rays due to their nuclear origin (Figure 3).

The convention that EM radiation that is known to come from the nucleus is always called “gamma ray” radiation is the only convention that is universally respected, however. Many astronomical gamma ray sources (such as gamma ray bursts) are known to be too energetic (in both intensity and wavelength) to be of nuclear origin. Quite often, in high-energy physics and in medical radiotherapy, very high energy EMR (in the > 10 MeV region)-which is of higher energy than any nuclear gamma ray-is not called X-ray or gamma ray, but instead by the generic term of “high-energy photons”. The region of the spectrum where a particular observed electromagnetic radiation fall is reference frame-dependent (due to the Doppler shift for light), so EM radiation that one observer would say is in one region of the spectrum could appear to an observer moving at a substantial fraction of the speed of light with respect to the first to be in another part of the spectrum. For example, consider the cosmic microwave background. It was produced when matter and radiation decoupled, by the de-excitation of hydrogen atoms to the ground state. These photons were from Lyman series transitions, putting them in the ultraviolet (UV) part of the electromagnetic spectrum. Now this radiation has undergone enough cosmological red shift to put it into the microwave region of the spectrum for observers moving slowly (compared to the speed of light) with respect to the cosmos (Figure 4).

Rationale for Names

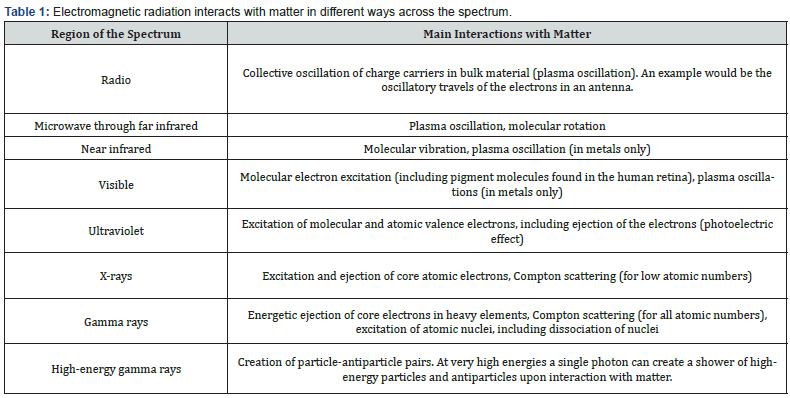

Electromagnetic radiation interacts with matter in different ways across the spectrum. These types of interaction are so different that historically different names have been applied to different parts of the spectrum, as though these were different types of radiation. Thus, although these “different kinds” of electromagnetic radiation form a quantitatively continuous spectrum of frequencies and wavelengths, the spectrum remains divided for practical reasons arising from these qualitative interaction differences (Table 1).

Types of Radiation

Radio waves

Radio waves are emitted and received by antennas, which consist of conductors such as metal rod resonators. In artificial generation of radio waves, an electronic device called a transmitter generates an AC electric current which is applied to an antenna. The oscillating electrons in the antenna generate oscillating electric and magnetic fields that radiate away from the antenna as radio waves. In reception of radio waves, the oscillating electric and magnetic fields of a radio wave couple to the electrons in an antenna, pushing them back and forth, creating oscillating currents which are applied to a radio receiver. Earth’s atmosphere is mainly transparent to radio waves, except for layers of charged particles in the ionosphere which can reflect certain frequencies. Radio waves are extremely widely used to transmit information across distances in radio communication systems such as radio broadcasting, television, two-way radios, mobile phones, communication satellites, and wireless networking. In a radio communication system, a radio frequency current is modulated with an information-bearing signal in a transmitter by varying either the amplitude, frequency, or phase, and applied to an antenna. The radio waves carry the information across space to a receiver, where they are received by an antenna and the information extracted by demodulation in the receiver. Radio waves are also used for navigation in systems like Global Positioning System (GPS) and navigational beacons and locating distant objects in radiolocation and radar. They are also used for remote control, and for industrial heating (Figure 5).

The use of the radio spectrum is strictly regulated by governments, coordinated by the International Telecommunication Union (ITU) which allocates frequencies to different users for different uses. Microwaves are radio waves of short wavelength, from about 10 centimeters to one millimeter, in the SHF and EHF frequency bands. Microwave energy is produced with klystron and magnetron tubes, and with solid state devices such as Gunn and IMPATT diodes. Although they are emitted and absorbed by short antennas, they are also absorbed by polar molecules, coupling to vibrational and rotational modes, resulting in bulk heating. Unlike higher frequency waves such as infrared and visible light which are absorbed mainly at surfaces, microwaves can penetrate materials and deposit their energy below the surface. This effect is used to heat food in microwave ovens, and for industrial heating and medical diathermy.

Microwaves are the main wavelengths used in radar, and are used for satellite communication, and wireless networking technologies such as Wi-Fi. The copper cables (transmission lines) which are used to carry lower-frequency radio waves to antennas have excessive power losses at microwave frequencies, and metal pipes called waveguides are used to carry them. Although at the low end of the band the atmosphere is mainly transparent, at the upper end of the band absorption of microwaves by atmospheric gases limits practical propagation distances to a few kilometers. Terahertz radiation or sub-millimeter radiation is a region of the spectrum from about 100 GHz to 30 terahertz (THz) between microwaves and far infrared which can be regarded as belonging to either band. Until recently, the range was rarely studied, and few sources existed for microwave energy in the so-called terahertz gap, but applications such as imaging and communications are now appearing. Scientists are also looking to apply terahertz technology in the armed forces, where high-frequency waves might be directed at enemy troops to incapacitate their electronic equipment [15]. Terahertz radiation is strongly absorbed by atmospheric gases, making this frequency range useless for longdistance communication.

Infrared Radiation

The infrared part of the electromagnetic spectrum covers the range from roughly 300 GHz to 400 THz (1 mm-750 nm). It can be divided into three parts [4]. Far-infrared, from 300 GHz to 30 THz (1 mm- 10 μm). The lower part of this range may also be called microwaves or terahertz waves. This radiation is typically absorbed by so-called rotational modes in gas-phase molecules, by molecular motions in liquids, and by phonons in solids. The water in Earth’s atmosphere absorbs so strongly in this range that it renders the atmosphere in effect opaque. However, there are certain wavelength ranges (“windows”) within the opaque range that allow partial transmission and can be used for astronomy. The wavelength ranges from approximately 200 μm up to a few mm is often referred to as Sub-millimeter astronomy, reserving far infrared for wavelengths below 200 μm.

Mid-infrared, from 30 to 120 THz (10-2.5 μm). Hot objects (black-body radiators) can radiate strongly in this range, and human skin at normal body temperature radiates strongly at the lower end of this region. This radiation is absorbed by molecular vibrations, where the different atoms in a molecule vibrate around their equilibrium positions. This range is sometimes called the fingerprint region, since the mid-infrared absorption spectrum of a compound is very specific for that compound. Near-infrared, from 120 to 400 THz (2,500-750 nm). Physical processes that are relevant for this range are like those for visible light. The highest frequencies in this region can be detected directly by some types of photographic film, and by many types of solid-state image sensors for infrared photography and videography.

Visible Light

Above infrared in frequency comes visible light. The Sun emits its peak power in the visible region, although integrating the entire emission power spectrum through all wavelengths shows that the Sun emits slightly more infrared than visible light. Visible light is the part of the EM spectrum the human eye is the most sensitive to. Visible light (and near-infrared light) is typically absorbed and emitted by electrons in molecules and atoms that move from one energy level to another. This action allows the chemical mechanisms that underlie human vision and plant photosynthesis. The light that excites the human visual system is a very small portion of the electromagnetic spectrum. A rainbow shows the optical (visible) part of the electromagnetic spectrum; infrared (if it could be seen) would be located just beyond the red side of the rainbow whilst ultraviolet would appear just beyond the opposite violet end. Electromagnetic radiation with a wavelength between 380 nm and 760 nm (400-790 terahertz) is detected by the human eye and perceived as visible light. Other wavelengths, especially near infrared (longer than 760 nm) and ultraviolet (shorter than 380 nm) are also sometimes referred to as light, especially when the visibility to humans is not relevant. White light is a combination of lights of different wavelengths in the visible spectrum. Passing white light through a prism splits it up into the several colors of light observed in the visible spectrum between 400 nm and 780 nm. If radiation having a frequency in the visible region of the EM spectrum reflects off an object, say, a bowl of fruit, and then strikes the eyes, this results in visual perception of the scene. The brain’s visual system processes the multitude of reflected frequencies into different shades and hues, and through this insufficiently understood psychophysical phenomenon, most people perceive a bowl of fruit.

At most wavelengths, however, the information carried by electromagnetic radiation is not directly detected by human senses. Natural sources produce EM radiation across the spectrum, and technology can also manipulate a broad range of wavelengths. Optical fiber transmits light that, although not necessarily in the visible part of the spectrum (it is usually infrared), can carry information. The modulation is like that used with radio waves (Figure 6). Above infrared in frequency comes visible light. The Sun emits its peak power in the visible region, although integrating the entire emission power spectrum through all wavelengths shows that the Sun emits slightly more infrared than visible light [16]. Visible light is the part of the EM spectrum the human eye is the most sensitive to. Visible light (and near-infrared light) is typically absorbed and emitted by electrons in molecules and atoms that move from one energy level to another. This action allows the chemical mechanisms that underlie human vision and plant photosynthesis. The light that excites the human visual system is a very small portion of the electromagnetic spectrum. A rainbow shows the optical (visible) part of the electromagnetic spectrum; infrared (if it could be seen) would be located just beyond the red side of the rainbow whilst ultraviolet would appear just beyond the opposite violet end. Electromagnetic radiation with a wavelength between 380 nm and 760 nm (400-790 terahertz) is detected by the human eye and perceived as visible light. Other wavelengths, especially near infrared (longer than 760 nm) and ultraviolet (shorter than 380 nm) are also sometimes referred to as light, especially when the visibility to humans is not relevant. White light is a combination of lights of different wavelengths in the visible spectrum. Passing white light through a prism splits it up into the several colors of light observed in the visible spectrum between 400 nm and 780 nm.

If radiation having a frequency in the visible region of the EM spectrum reflects off an object, say, a bowl of fruit, and then strikes the eyes, this results in visual perception of the scene. The brain’s visual system processes the multitude of reflected frequencies into different shades and hues, and through this insufficiently understood psychophysical phenomenon, most people perceive a bowl of fruit. At most wavelengths, however, the information carried by electromagnetic radiation is not directly detected by human senses. Natural sources produce EM radiation across the spectrum, and technology can also manipulate a broad range of wavelengths. Optical fiber transmits light that, although not necessarily in the visible part of the spectrum (it is usually infrared), can carry information. The modulation is like that used with radio waves.

Ultraviolet Radiation

Next in frequency comes ultraviolet (UV). The wavelength of UV rays is shorter than the violet end of the visible spectrum but longer than the X-ray. UV is the longest wavelength radiation whose photons are energetic enough to ionize atoms, separating electrons from them, and thus causing chemical reactions.

Short wavelength UV and the shorter wavelength radiation above it (X-rays and gamma rays) are called ionizing radiation, and exposure to them can damage living tissue, making them a health hazard. UV can also cause many substances to glow with visible light; this is called fluorescence. At the middle range of UV, UV rays cannot ionize but can break chemical bonds, making molecules unusually reactive. Sunburn, for example, is caused by the disruptive effects of middle range UV radiation on skin cells, which is the main cause of skin cancer. UV rays in the middle range can irreparably damage the complex DNA molecules in the cells producing thymine dimers making it a very potent mutagen. The Sun emits UV radiation (about 10% of its total power), including extremely short wavelength UV that could potentially destroy most life on land (ocean water would provide some protection for life there). However, most of the Sun’s damaging UV wavelengths are absorbed by the atmosphere before they reach the surface. The higher energy (shortest wavelength) ranges of UV (called “vacuum UV”) are absorbed by nitrogen and, at longer wavelengths, by simple diatomic oxygen in the air. Most of the UV in the mid-range of energy is blocked by the ozone layer, which absorbs strongly in the important 200-315 nm range, the lower energy part of which is too long for ordinary dioxygen in air to absorb. This leaves less than 3% of sunlight at sea level in UV, with all this remainder at the lower energies. The remainder is UV-A, along with some UV-B. The very lowest energy range of UV between 315 nm and visible light (called UV-A) is not blocked well by the atmosphere but does not cause sunburn and does less biological damage. However, it is not harmless and does create oxygen radicals, mutations, and skin damage [25-30].

X-rays

After UV come X-rays, which, like the upper ranges of UV are also ionizing. However, due to their higher energies, X-rays can also interact with matter by means of the Compton Effect. Hard X-rays have shorter wavelengths than soft X-rays and as they can pass through many substances with little absorption, they can be used to ‘see through’ objects with ‘thicknesses’ less than that equivalent to a few meters of water. One notable use is diagnostic X-ray imaging in medicine (a process known as radiography). X-rays are useful as probes in high-energy physics. In astronomy, the accretion disks around neutron stars and black holes emit X-rays, enabling studies of these phenomena. X-rays are also emitted by stellar corona and are strongly emitted by some types of nebulae. However, X-ray telescopes must be placed outside the Earth’s atmosphere to see astronomical X-rays, since the great depth of the atmosphere of Earth is opaque to X-rays (with areal density of 1000 g/cm2), equivalent to 10 meters thickness of water. This is an amount sufficient to block almost all astronomical X-rays.

Gamma Rays

After hard X-rays come gamma rays, which were discovered by Paul Ulrich Villard in 1900. These are the most energetic photons, having no defined lower limit to their wavelength. In astronomy they are valuable for studying high-energy objects or regions, however as with X-rays this can only be done with telescopes outside the Earth’s atmosphere. Gamma rays are used experimentally by physicists for their penetrating ability and are produced by a few radioisotopes. They are used for irradiation of foods and seeds for sterilization, and in medicine they are occasionally used in radiation cancer therapy [18]. More commonly, gamma rays are used for diagnostic imaging in nuclear medicine, an example being PET scans. The wavelength of gamma rays can be measured with high accuracy through the effects of Compton scattering. Throughout most of the electromagnetic spectrum, spectroscopy can be used to separate waves of different frequencies, producing a spectrum of the constituent frequencies. Spectroscopy is used to study the interactions of electromagnetic waves with matter. Considering the widespread use of mobile devices and the increased performance requirements of mobile users, shifting the complex computing and storage requirements of mobile terminals to the cloud is an effective way to solve the limitation of mobile terminals, which has led to the rapid development of mobile cloud computing. How to reduce and balance the energy consumption of mobile terminals and clouds in data transmission, as well as improve energy efficiency and user experience, is one of the problems that green cloud computing needs to solve. This paper focuses on energy optimization in the data transmission process of mobile cloud computing. Considering that the data generation rate is variable, because of the instability of the wireless connection, combined with the transmission delay requirement, a strategy based on the optimal stopping theory to minimize the average transmission energy of the unit data is proposed.

Demand for digital services is growing rapidly. Since 2010, the number of internet users worldwide has more than doubled, while global internet traffic has expanded 20-fold. Rapid improvements in energy efficiency have, however, helped moderate growth in energy demand from data centers and data transmission networks, which each account for 1-1.5% of global electricity use. Significant additional government and industry efforts on energy efficiency, RD&D, and decarbonizing electricity supply and supply chains are necessary to curb energy demand and reduce emissions rapidly over the coming decade to align with the Net Zero by 2050 Scenario. Digital technologies have direct and indirect effects on energy use and emissions and hold enormous potential to help (or hinder) global clean energy transitions, including through the digitalization of the energy sector. The data centers and data transmission networks 1 that underpin digitalization accounted for around 300 Mt CO2-eq in 2020 (including embodied emissions), equivalent to 0.9% of energy-related GHG emissions (or 0.6% of total GHG emissions). Since 2010, emissions have grown only modestly despite rapidly growing demand for digital services, thanks to energy efficiency improvements, renewable energy purchases by information and communications technology (ICT) companies and broader decarburization of electricity grids in many regions. However, to get on track with the Net Zero Scenario, emissions must halve by 2030.

Global data center electricity use in 2021 was 220-320 TWh, or around 0.9-1.3% of global final electricity demand. This excludes energy used for cryptocurrency mining, which was 100-140 TWh in 2021.3. Since 2010, data center energy use (excluding crypto) has grown only moderately despite the strong growth in demand for data center services, thanks in part to efficiency improvements in IT hardware and cooling and a shift away from small, inefficient enterprise data centers towards more efficient cloud and hyperscale data centers. However, the rapid growth in workloads handled by large data centers has resulted in rising energy use in this segment over the past several years (increasing by 10-30% per year). Overall data center energy (excluding crypto) appears likely to continue growing moderately over the next few years, but longer-term trends are highly uncertain. Although data center electricity consumption globally has grown only moderately, some smaller countries with expanding data center markets are seeing rapid growth. For example, data center electricity use in Ireland has more than tripled since 2015, accounting for 14% of total electricity consumption in 2021. In Denmark, data center energy use is projected to triple by 2025 to account for around 7% of the country’s electricity use. Globally, data transmission networks consumed 260-340 TWh in 20214, or 1.1-1.4% of global electricity use. The energy efficiency of data transmission has improved rapidly over the past decade: fixed-line network energy intensity has halved every two years in developed countries, and mobile-access network energy efficiency has improved by 10- 30% annually in recent years.

Internet traffic globally was up 23% in 2021, lower than the 40-50% pandemic-driven surge in 2020. GSMA members reported that their network data traffic increased by 31% in 2021 while total electricity use by operators rose by 5%. Data from major European telecom network operators analyzed by Lundén et al. mirror these global efficiency trends. Electricity consumption by reporting companies -representing about 36% of European subscriptions and 8% of global subscriptionsincreased by only 1% between 2015 and 2018, while data traffic tripled. Strong growth in demand for data network services is expected to continue, driven primarily by data-intensive activities such as video streaming, cloud gaming and augmented and virtual reality applications. However, these data-intensive services may only have limited impacts on energy use in the near term since energy use does not increase proportionally with traffic volumes. In addition, the average energy consumption of video streaming is low compared with other everyday activities, with enduser devices such as televisions consuming the majority. But if streaming and other data-intensive services add to peak internet traffic, the build-out of additional infrastructure to accommodate higher anticipated peak capacity could raise overall network energy use in the long run.

Mobile data traffic is also projected to continue growing quickly, quadrupling by 2027. 5G’s share of mobile data traffic is projected to rise to 60% in 2027, up from 10% in 2021. Although 5G networks are expected to be more energy efficient than 4G networks, the overall energy, and emissions impacts of 5G are still uncertain. Demand for data center services is also poised to rise, driven in part by emerging digital technologies such as blockchain (particularly proof-of-work) and machine learning. For example, Bitcoin-the most prominent example of proof-of-work blockchain and most valuable cryptocurrency by market capitalization - consumed an estimated 105 TWh in 2021,5 20-times more than it used in 2016. Ethereum, second behind Bitcoin in terms of market capitalisation and energy use, consumed around 17 TWh in 2021. In September 2022, Ethereum transitioned from a proof-of-work consensus mechanism to proof-of-stake, which is expected to slash energy use by 99.95%. As blockchain applications become more widespread, understanding, and managing their energy use implications may become increasingly important for energy analysts and policy makers.

Machine learning (ML) is another area of demand growth, with potentially significant implications for data center energy use in upcoming years. While the amount of computing power needed to train the largest ML models is growing rapidly, it is unclear how quickly overall ML-related energy use in data centers is increasing. At Facebook, computing demand for ML training (increasing by 150% per year) and inference (increasing by 105% per year) have outpaced overall data center energy use (up 40% per year) in recent years. Google reports that ML accounted for only 10-15% of their total energy use, despite representing 70-80% of overall computing demand. The nature of data center demand appears likely to evolve over the coming decade. 5G, the Internet of things and the metaverse are likely to increase demand for low-latency computing, increasing demand for edge data centers. User devices such as smartphones - increasingly equipped with ML accelerators - are set to increase the use of ML with uncertain effects on overall energy demand. ICT companies invest considerable sums in renewable energy projects to protect themselves from power price volatility, reduce their environmental impact and improve their brand reputation. Hyperscale data center operators in particular lead in corporate renewable energy procurement, mainly through power purchase agreements (PPAs). In fact, Amazon, Microsoft, Meta, and Google are the four largest purchasers of corporate renewable energy PPAs, having contracted over 38 GW to date (including 15 GW in 2021). Apple (2.8 TWh), Google (18.3 TWh) and Meta (9.4 TWh) purchased or generated enough renewable electricity to match 100% of their operational electricity consumption in 2021 (primarily in data centers). Amazon consumed 30.9 TWh (85% renewable) across their operations in 2021, with a goal of achieving 100% renewables by 2025.

However, matching 100% of annual demand with renewable energy purchases or certificates does not mean that data centers are powered exclusively by renewable sources. The variability of wind and solar sources may not match a data center’s demand profile, and the renewable energy may be purchased from projects in a different grid or region from where demand is located.

Renewable energy certificates are unlikely to lead to additional renewable energy production, resulting in inflated estimates of real-world emissions mitigation. Google and Microsoft have announced 2030 targets to source and match zero-carbon electricity on a 24/7 basis within each grid where demand is located. A growing number of organizations are working towards 24/7 carbon-free energy. Although a few network operators have also achieved 100% renewables (including BT, TIM, and T-Mobile), data transmission network operators are generally lagging data center operators in renewable energy purchase and use. Compared with data centers, which are typically large, centralized, and more flexible in location, telecommunication network operators have many sites (with limited flexibility for site selection). As a result, accessing renewable energy is noted as a challenge in many markets, particularly in emerging and developing economies with less well-developed energy markets.

1. In January 2021 data center operators and industry associations in Europe launched the Climate Neutral Data Centre Pact, which includes a pledge to make data centers climateneutral by 2030 and has intermediate (2025) targets for power usage effectiveness and carbon-free energy.

2. The Open Compute Project is a collaborative community focused on redesigning hardware technology to efficiently support the growing demands on computing infrastructure.

3. The 24/7 Carbon-free Energy Compact, coordinated by Sustainable Energy for All and the United Nations, includes three data center operators - Google (a pioneer of 24/7 carbonfree energy), Microsoft and Iron Mountain.

4. DIMPACT is a collaborative project convened by Carnstone and the University of Bristol to measure and report the carbon footprint of digital services. DIMPACT participants include some of the largest media companies in the world, including Netflix, the BBC, and the Economist.

Improving data collection and sharing on ICTs and their energy-use characteristics can help inform energy analysis and policymaking, particularly in segments where data is limited or not available (e.g., small data centers). National research programs can develop better modelling tools to improve understanding and forecasting of the energy and sustainability impacts of data centers and networks. Governments can also play an important role in helping to develop appropriate indicators to track progress on energy efficiency and sustainability, building on efforts by industry and researchers.

Improve Data Collection and Transparency

Governments can be instrumental in implementing policies and programs to improve the energy efficiency of data transmission networks while ensuring reliability and resilience. Potential policies include network device energy efficiency standards, improving metrics and incentives for efficient network operations, and supporting international technology protocols.

Data centers could also become even more energy efficient, while providing flexibility to the grid. Governments can offer guidance, incentives, and standards to encourage further energy efficiency, while regulations and price signals could help incentivize demandside flexibility. For example, allowing for some flexibility in ancillary service requirements (e.g., longer notice periods, longer response times) may make it easier for data center operators to participate in demand response programs.

The ICT sector has been a leader in corporate renewable energy procurement, particularly in North America and Europe. But the Asia Pacific region has lagged in terms of renewable energy use due in part to the limited availability of renewables, regulatory complexity, and high costs. Regulatory frameworks should incentivize varied, affordable, and additional renewable power purchasing options. Enacting policies to encourage energy efficiency, demand response and clean energy procurement Waste heat from data centers could help to heat nearby commercial and residential buildings or supply industrial heat users, reducing energy use from other sources. Waste heat arrangements should be assessed on a site-by-site basis and include a range of criteria including economic viability, technical feasibility, offtaker demand, and impact on energy efficiency. Given the high costs of new infrastructure, proximity to users of waste heat or existing infrastructure is needed to ensure that waste heat is used. To overcome potential barriers to waste heat utilization, such as achieving sufficiently high temperatures and contractual and legal challenges, policy makers, data center operators and district heating suppliers need to work together on adequate incentives and guarantees. ICT companies can help energy researchers and policy makers better understand how changing demand for ICT services translates into overall energy demand by sharing reliable, comprehensive, and timely data. For example, data center and telecommunication network operators should track and publicly report energy use and other sustainability indicators (e.g., emissions, water use). Cloud data center operators should provide robust and transparent tools for their customers to measure, report and reduce the GHG emissions of cloud services. Industry groups that collect self-reported energy and sustainability information from members (e.g., Bitcoin Mining Council) should share underlying data and methodologies with researchers to increase the credibility of their sustainability claims [31-40].

Collect and Report Energy Use and Other Sustainability Data

ICT companies should set ambitious efficiency and CO2 emission targets and implement concrete measures to track progress and achieve these goals. This includes alignment with the ICT industry’s science-based target to reduce GHG emissions by 45% between 2020 and 2030. For data center operators, this includes following energy efficiency best practices, locating new data centers in areas with suitable climates and low water stress, and adopting the most energy-efficient servers and storage, network, and cooling equipment. All companies along the ICT value chain must do their part to increase systemwide efficiency, including hardware manufacturers, software developers and customers. Several major data centers and telecom network operators have set and/or achieved targets to use 100% clean electricity on an annual matching basis. More ambitious approaches to carbon-free operations can have even greater environmental benefits, specifically by accounting for both location and time. ML and other digital technologies can help achieve such goals by actively shifting computing tasks to times and regions in which low-carbon sources are plentiful.

In cooperation with electricity utilities, regulators and project developers, data center operators investing in renewable energy should identify projects that maximize benefits for the local grid and reduce overall GHG emissions. This could also include the use of emerging clean energy technologies such as battery storage and green hydrogen to increase flexibility and contribute to system-wide decarburization. Increase the purchase and use of clean electricity and other clean energy technologies. Demand for data center services will continue to grow strongly, driven by media streaming and emerging technologies such as artificial intelligence, virtual reality, 5G and block chain. As the efficiency gains of current technologies decelerate (or even stall) in upcoming years, more efficient new technologies will be needed to keep pace with growing data demand. Invest in RD&D for efficient next-generation computing and communications technologies. In addition to their operational energy use and emissions, data centers and data transmission networks are also responsible for “embodied” life cycle emissions, including from raw material extraction, manufacturing, transport and end-of-life disposal or recycling. Companies should ramp up efforts to reduce embodied emissions across their supply chains, including devices and buildings. Data centers and data transmission networks also pose other environmental impacts beyond energy use and greenhouse gas emissions, such as water use and the generation of electronic waste. Companies should adopt technologies and approaches to minimize water use, particularly in drought-prone areas.

The popularity of mobile devices such as smartphone and tablet PC has enabled people to communicate not only anytime, anywhere, but also through various applications on mobile devices to access online shopping, online social networking, news, and other services. It is a great convenience. However, mobile devices have the characteristics of limited computing power and storage capacity. In addition, the capacity of mobile devices is severely limited by battery power limitations. Mobile devices cannot be charged anytime, anywhere in a mobile scene, and the user experience is affected by limited battery power. To address the above shortcomings, mobile devices offload computing tasks to cloud platforms through high-speed wireless communications to reduce computing overhead and save energy, extending battery life and speeding up applications. Mobile devices also alleviate storage shortages by periodically sending data on the device as a backup to the cloud. This has led to the birth of a computing paradigm-mobile cloud computing (MCC)-that leverages resources in the cloud to help mobile devices collect, store, and process data, extending the capabilities of resource-constrained mobile devices. Data communication between mobile devices and the public clouds. Due to the widespread use of mobile devices, data traffic has increased dramatically in recent years. According to IDC’s forecast, the total global mobile data will reach 40,000 EB in 2020, with a compound annual growth rate of 36%. As a result, the energy efficiency of mass data transmission between mobile devices and cloud has become a key issue in MCC. Studying this problem is conducive to building a green mobile network environment.

Researchers have done a lot of research on energy-saving issues in MCC and proposed different mechanisms and methods. For example, in [1, 2]. energy-saving issues are proposedIn [1]. The problem of energy-saving data transmission between mobile devices and cloud is achieved by dynamically selecting an energyefficient link and delaying poorly connected data transmission. The goal is to minimize the time-average energy consumption of mobile devices while ensuring the stability of both device-side and cloud-side queues. In [3,4]. a new online task scheduling or control algorithm is proposed for mobile device optimization on the throughput-energy trade-off using the Lyapunov optimization framework, without requiring any statistical information of traffic arrivals and link bandwidth in [5]. a novel data transmission optimization method, called DTM based on mobile agent deployed between mobile device layer and cloud service layer, is proposed to optimize large volume data transmission for mobile clients in MCC, which helps to reduce energy consumption and decrease waiting tim in [6]. a layered heterogeneous mobile cloud architecture for high data rate transmission is proposed. Then, an energy efficiency scheme based on joint data packet fragmentation and cooperative transmission is designed, and the energy efficiency corresponding to different packet sizes and the cloud size is analyzed in [7]. The authors proposed eTime, an energy-efficient data transmission strategy between cloud and mobile devices, based on Lyapunov optimization. The eTime relies solely on current state information to make global energydelay trade-off decision and can actively and adaptively seize the timing of good connectivity to prefetch frequently used data while deferring delay-tolerant data in bad connectivity. Similarly, as described in [7]. inspired by the popularity of prefetch-friendly or delay-tolerant apps, Liu et al [8]. designed and implemented the application-layer transmission protocol, AppATP, which leverages cloud computing to manage data transmissions for mobile apps, transferring data to and from mobile devices in an energyefficient manner in cloud computing in [9].an online control algorithm based on Lyapunov optimization theory is proposed to optimize the data transmission between mobile devices and cloud. The algorithm can make control decisions for application scheduling, interface selection, and packet dropping to minimize the combined utility of network energy cost and packet dropping penalty. In summary, researchers have done a lot of research on the energy efficiency of data transmission between mobile devices and clouds. Despite this, many factors have not been considered, such as data dynamic arrival and transmission delay. Therefore, based on factors such as energy consumption, bandwidth, delay, and dynamic arrival of data, this paper proposes a strategy to realize the minimum transmission energy consumption of unit data by using secretary problem in optimal stopping theory.

Theoretical Background and Problem Description

System Model

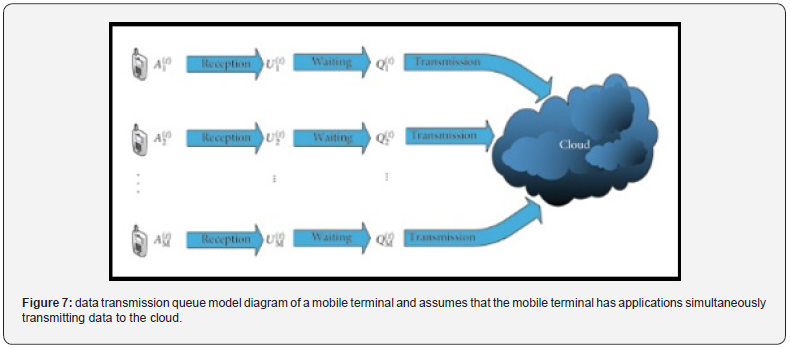

The research goal of this paper is to optimize the energy consumption generated by mobile terminals transmitting data to the cloud in MCC under the condition of satisfying transmission delay. Figure 7 is a data transmission queue model diagram of a mobile terminal and assumes that the mobile terminal has applications simultaneously transmitting data to the cloud. The transmission rate varies randomly with the quality fluctuation of the wireless channel. When the transmission power of the mobile terminal is given, to cope with the randomness and unpredictability of the wireless connection, the mobile terminal selects a channel with good quality, that is, the transmission rate is large to transmit data, which is beneficial to reducing the average energy consumption of the transmission data. In fact, when the channel state changes randomly, the mobile terminal selects a better time to transmit data according to the temporary channel condition information, which is a distributed opportunistic scheduling problem [11].

The distributed opportunistic scheduling problem can be solved by the optimal stopping theory [12,13]. Optimal stopping theory is that the decision-makers choose a suitable moment to stop observing and execute the given behavior based on the continuously observed random variables to maximize the interests of the decision-makers. Optimal stopping theory has been studied in the field of wireless communications. For example, in [10]. communication is deferred to an acceptable time deadline based on optimal stopping theory until the best expected channel conditions are found to minimize the energy consumption of the wireless device and extend its battery life in [14]. Building on optimal stopping theory, the fundamental trade-off between the throughput gain from better channel conditions and the cost for further channel probing is characterized in ad hoc networks in [15]. The authors quantify the power consumption of heartbeats of real-world mobile instant messaging (IM) apps through extensive measurements. Furthermore, the authors propose a device-to-device- (D2D-) based heartbeat relaying framework for IM apps to reduce energy for heavy signaling traffic transmission in [16]. In fact, 5G is a new paradigm that brings new technologies to overcome the challenges of the next generation wireless mobile network. The heterogeneous environment (such as network functions virtualization (NFV), software-defined networking (SDN), and cloud computing) of 5G will cause frequent handoff in small cells where users join and leave frequently; besides transmission performance, the security and privacy of cloud and wireless networks is also important for users, such as in [17]. Furthermore, outsourcing service fair payment based on blockchain has been studied in cloud computing in [18].

This paper mainly studies the energy-saving optimization problem of data transmission with time delay requirement and variable data generation rate in MCC. A data transmission energy optimization strategy based on optimal stopping theory is proposed. Optimal stopping theory is adopted so that the mobile terminal stops detecting the channel and obtains the time when the wireless channel transmission rate is large to minimize the energy consumption of data transmission. The specific research ideas are as follows: first, a data transmission queue model with multiple applications is constructed, and the data generation rate is dynamic because it is more realistic. Considering comprehensively the energy consumption and delay in the transmission process, the goal is to minimize the average energy consumption per unit of data. Based on secretary problem in optimal stopping theory, a rule is proposed to abandon the first k candidates, from (k + 1) th candidate; if he is better than the top k candidates, then he is hired. Currently, the average absolute ranking of the selected candidate is the smallest.

Contactless capacitive energy transmission, in short, capacitive energy transmission, is a technical alternative to contactless inductive energy transmission in terms of its functional principle. In contrast to the usage of magnetically coupled windings for energy transmission as in inductive energy transmission, the electric field between several flat electrodes is used for energy transmission. In direct comparison to the more established contactless inductive energy transmission, capacitive energy transmission is less suitable for transmitting energy over large mechanical distances or for achieving high transmission capacities. There are applications for contactless transmission of low power where the advantages of capacitive energy transmission are more important than the restrictions associated with it, assuming favorable conditions for the usage of capacitive energy transmission to be at hand. These principle-related advantages and limitations result directly from the structure of the actual capacitive transmission path specified by the functional principle. In general, the distance between the primary-side source and the secondary-side sink or load should be very small for capacitive energy transmission. The capacity of the transmission path doubles with each halving of the distance between the two sides. Technically useful capacities for energy transfer in the range of a few watts start in the range of two-digit picofarad, which hardly allows distances of more than 1 mm for the electrodes in palmsized areas. In some applications, however, such a small distance is not an actual restriction. For example, if the secondary side to be supplied rests mechanically on the primary side as is the case with charger cups for mobile phones. The advantages of capacitive energy transmission, on the other hand, would come into play in the example of the mobile phone charger. In this example, the virtually negligible thickness of the actual transmission path is a potential advantage in terms of product design. Assuming a plastic housing, the entire transmission path can be produced by two metallization on said housing. Together with a protective layer on the outside of the device, this typically results in a total layer thickness of several 10 μm. A comparably thin inductive transmission path would hardly be technically feasible or would be significantly limited in terms of power and efficiency due to the lack of copper cross-section.

As part of the FVA 602-I research project, an application investigated in detail at the IEW addresses the capacitive power supply of sensors together with data transmission, in short, capacitive near-field telemetry. The environment in rolling bearings and gears under consideration here is often associated with thin and flat installation spaces due to its design. Here, there is no general necessity for bridging distances greater than approx. 0.5 mm. For the implementation of near-field telemetry in such test arrangements, a simple production and a high mechanical loading capacity of the actual transmission path are decisively important criteria. It is not uncommon that the transmission lines themselves are made to order for a specific test series.

Sending Data via Laser Communication More Effective and Secure than via radio frequencies

If the current corona era has taught us anything, it is how dependent we have become on the Internet. Access to the Internet has now become “a kind of human right,” so says Will Crowcombe, systems engineer and technical project coordinator within the Laser Communication Program at the Netherlands Organisations for Applied Scientific Research (TNO). “I myself have two children aged respectively 7 and 9 years old. If the Internet connection goes down at our house, it really is a tragedy. “Advancements in technology, such as self-driving cars and smart home appliances like thermostats, refrigerators, lights, and smoke detectors, have driven a huge increase in data traffic. All the Zooming, online education, Netflixing and computer games during lockdown periods have only compounded this. Getting all that data to another location quickly and in good time still poses quite a challenge, according to Crowcombe.

Radio Frequency Spectrum

Crowcombe: “The current spectrum of radio frequencies is so full that people are already fighting over the available space. Communication via radio frequencies can no longer cope with the explosive growth in data traffic. However, this is possible with the help of laser communication which transmits data via laser light. Hundreds or even thousands of satellites, which together form a communication network, are used for this purpose. This makes it possible to connect to any place on earth.” The reach of a laser communications network is not limited to cities and densely populated areas, Crowcombe goes on to explain. “If you look at the map of the Netherlands to see where you have internet coverage, it’s still disappointing. In the big cities and around roads it’s fine. However, if you go to sparsely populated areas, such as wooded areas, that is no longer the case. With laser communication, you can also provide an Internet connection in these remote areas, as well as on board ships and aircraft.” “The available bandwidth for laser communication is 1000 times greater than for ‘normal’ radio frequency communication. This opens much more room to send huge amounts of data.”

Security

Finally, according to Crowcombe, laser communication is a whole lot safer than radio frequency communication. “Laser communication uses narrow laser beams instead of wide radio signals. You can, if you align the laser beams properly, determine exactly where you want to send your information. This makes laser-satellite communication very suitable for purposes where data security is extremely important. Like when the Ministry of Defence sends confidential information.” Securing data is also crucial for banks and financial institutions, according to Crowcombe. “A hack on major financial transactions can have catastrophic consequences. Already, however, quantum computers are coming onto the market that can crack all existing codes. That makes these kinds of financial institutions extremely vulnerable. The same applies to other large companies and organizations, as has happened in a few cases recently in the Netherlands. Not to mention on a personal level: consumers are also susceptible to phishing.” The good news is that the quantum properties of photons in laser beams can also be used to counter the potential threat of quantum computing. This is done with the help of so-called Quantum Keys. These are simply impossible to crack, even with quantum computers. That is also why large financial institutions are so interested in them. Unfortunately, for the time being, China is the only country that has made major strides in adopting this quantum key distribution technology. This is prompting all kinds of parties, such as the EU, to get a move on as well.”

Optimal Accuracy

The research at TNO is at the cutting edge of precision instrumentation and optics, a field in which the Netherlands has considerable experience and has built up a network with companies such as Philips and ASML. Crowcombe: “We focus mainly on communication between satellites with ground stations and aircraft, and between satellites themselves. In addition to the development of precise mechanisms, optical components, mirrors, and components for photonics, we are also working on developing technologies needed for future, more advanced terminals. Such as ‘TOmCAT ‘, which stands for ‘Terabit Optical Communication Adaptive Terminal’. The challenges that this entails can be compared to a laser pointer pen. You must imagine for a moment that you have to keep a light beam motionless on an object that is thousands of kilometers away. What’s more, you also must pass through the Earth’s atmosphere first. With Adaptive Optics, it becomes feasible to set up a stable connection despite these atmospheric turbulences.”

Scaling up<

In the meantime, at TNO, following a research phase with some preliminary test setups and demonstrations, the time has come to broaden the scope. Crowcombe: “The idea is to have those terminals that we have been working on produced on a larger scale, and to make them smaller, cheaper, and therefore more commercially viable. We want to do this with the help of public and private investors. By joining forces with the Dutch high tech and aerospace industry, we can gain a leading global position in the field of laser communication. Not only can this create thousands of high-value jobs for the Netherlands, but it will also ultimately benefit the consumer as well. After all, that’s what we’re all looking for in the end-to stay connected.”

Why Lasers?

As science instruments evolve to capture high-definition data like 4K video, missions will need expedited ways to transmit information to Earth. With laser communications, NASA can significantly accelerate the data transfer process and empower more discoveries. Laser communications will enable 10 to 100 times more data to be transmitted back to Earth than current radio frequency systems. It would take roughly nine weeks to transmit a complete map of Mars back to Earth with current radio frequency systems. With lasers, it would take about nine days. Additionally, laser communications systems are ideal for missions because they need less volume, weight, and power. Less mass means more room for science instruments, and less power means less of a drain of spacecraft power systems. These are all critically important considerations for NASA when designing and developing mission concepts. “LCRD will demonstrate all of the advantages of using laser systems and allow us to learn how to use those best operationally,” said Principal Investigator David Israel at NASA’s Goddard Space Flight Center in Greenbelt, Maryland. “With this capability further proven, we can start to implement laser communications on more missions, making it a standardized way to send and receive data.”

How it Works

Both radio waves and infrared light are electromagnetic radiation with wavelengths at different points on the electromagnetic spectrum. Like radio waves, infrared light is invisible to the human eye, but we encounter it every day with things like television remotes and heat lamps. Missions modulate their data onto the electromagnetic signals to traverse the distances between spacecraft and ground stations on Earth. As communication travels, the waves spread out. The infrared light used for laser communications differs from radio waves because the infrared light packs the data into significantly tighter waves, meaning ground stations can receive more data at once. While laser communications aren’t necessarily faster, more data can be transmitted in one downlink. Laser communications terminals in space use narrower beam widths than radio frequency systems, providing smaller “footprints” that can minimize interference or improve security by drastically reducing the geographic area where someone could intercept a communications link. However, a laser communications telescope pointing to a ground station must be exact when broadcasting from thousands or millions of miles away. A deviation of even a fraction of a degree can result in the laser missing its target entirely. Like a quarterback throwing a football to a receiver, the quarterback needs to know where to send the football, i.e., the signal, so that the receiver can catch the ball in stride. NASA’s laser communications engineers have intricately designed laser missions to ensure this connection can happen.

Laser Communications Relay Demonstration

Located in geosynchronous orbit, about 22,000 miles above Earth, LCRD will be able to support missions in the near- Earth region. LCRD will spend its first two years testing laser communications capabilities with numerous experiments to refine laser technologies further, increasing our knowledge about potential future applications. LCRD’s initial experiment phase will leverage the mission’s ground stations in California and Hawaii, Optical Ground Station 1 and 2, as simulated users. This will allow NASA to evaluate atmospheric disturbances on lasers and practice switching support from one user to the next. After the experiment phase, LCRD will transition to supporting space missions, sending, and receiving data to and from satellites over infrared lasers to demonstrate the benefits of a laser communications relay system.