Phonological Encoding in Children who Stutter

Sangeetha Mahesh*, Geetha MP, Amulya S, Helga Maria Nivanka Ravel

Department of Clinical Services, Clinical Reader, All India Institute of Speech and Hearing, India

Submission: September 25, 2018; Published: October 04, 2018

*Corresponding author: Dr. Sangeetha Mahesh, Clinical Reader, Department of Clinical Services, All India Institute of Speech and Hearing, Mysuru, India.

How to cite this article: Sangeetha M, Geetha M, Amulya S. Phonological Encoding in Children who Stutter. Glob J Oto, 2018; 17(5): 555972. DOI: 10.19080/GJO.2018.17.555972

Abstract

Stuttering is defined as a temporal disruption of the successive and simultaneous programming of muscular movements required to produce a speech sound or its link to the next sound. Few of the models and theories posited that individuals who stutter were found to have deficits in phonological encoding. The main aim of the study was to check the difference in phonological encoding using phoneme monitoring in silent naming task and to compare between children with stuttering and children with no stuttering. Thirty Four children in the age range of 8 to 12 years who were diagnosed as having stuttering with a severity of mild and above degree of stuttering and thirty four age and gender matched children with no stuttering participated in the study. The experiment included Phoneme monitoring task. The present study was conducted in two phases: Stimulus Preparation and Task Design Programming; Administration of the tasks on Children who stutter (CWS) and Children who do not stutter (CNS) groups. The reaction time and accuracy of the participants’ responses were measured automatically using DMDX software. In phoneme monitoring task, CWS was found to be slow in monitoring the presence and absence of the target phoneme and less accurate when compared to CNS. It can be concluded that overall CWS of the present study experience general monitoring deficits and in specific they experience deficits in phonologic encoding process. The present study adds on to the theoretical knowledge on nature of stuttering in children, especially supporting the psycholinguistic model of stuttering.

Keywords: Phonological Encoding; Phoneme Monitoring Task; Dmdx Software; Children who stutter, Children who do not stutter

Introduction

Peters and Starkweather believed that stuttering is associated with a lack of balance between the linguistic and motoric systems involved in speech production [1]. The literature states that Stuttering is considered as disorder of language development and this fact was emphasized by Bloodstein [2]. These conceptions inspired the researchers to comprehensively investigate the association between stuttering frequency and various linguistic variables. Numerous studies have investigated the impact of various linguistic variables such as lexical retrieval Bloodstein & Gantwerk [3]; Helmreich & Bloodstein [4]; Dayalu, Kalinowski et al. [5] and morphological structure of the words, syntactical complexity Brundage & Ratner [6]; Hannah & Gardner [7] on frequency of stuttering and proved that there is a strong relationship between linguistic factors and stuttering.

Few of the models and theories posited that individuals who stutter were found to have deficits in phonological encoding and thus, phonological encoding deficits were considered as one of the probable causes for stuttering. Levelt [8] defined phonological encoding as the processes involved in retrieving or building a phonetic or articulatory plan from each lemma or word and the utterance as a whole. It has been proposed that phonological encoding involves three components [9] Generation of segments that constitutes words [3], Integration of sound segments with word frames and [10] Assignment of appropriate syllable stress Levelt [8]. This phonological encoding process serves as an interface between lexical processes and speech motor production Levelt [8]; Levelt, Roelofs et al. [11] and is significant for incremental speech planning and production. According to Levelt’s speech production model, self monitoring of inner or silent speech occurs at the output of phonological encoding. Levelt [8] and Levelt et al. [11] argued that the speakers monitor their speech output for errors in the speech plan before sending the code for articulatory planning and execution. Thus monitoring which is considered as a natural sub-process of speech production is required to access the sub-lexical units such as phonemes. In case of individuals who stutter, it is said that fluency break down occurs because of their faulty covert monitoring mechanism. According to Gestural linguistic model Browman & Goldstein [12]; Saltzman & Munhall [13], the phonological encoding process is closely associated with speech motor production.

Several studies have directly and indirectly supported an evidence of association between impaired phonological encoding and stuttering in children who stutters. Melnick, Conture, et al. [14] had directly assessed the phonological encoding skills in children with stuttering using priming task and they found out that the performance of both the groups namely children who stutter (CWS) and children who do not stutter (CNS) were comparable in related prime condition. Byrd Conture et al. [15] had used picture naming auditory priming paradigm to directly assess the phonological encoding skills in children with and without stuttering. The results that the three year old CWS and CNS and five year old CWS were found to be faster in the holistic priming condition whereas five year old CNS were faster in the segmental condition. Therefore, three year old CWS and CNS and five year old CWS were reported to slower in segmental priming condition. These findings were attributed to the developmental differences in phonological encoding between the groups. By age of five, CWS exhibit a delay in segmental encoding abilities when compared to neurotypical peers. Therefore, this study suggested a possible delay in the transition of phonemic competence from holistic to segmental processing abilities in children with stuttering [16].

On the western forefront, Sasisekaran, Brady et al. [17] studied the phonological encoding process in older children with stuttering aged between 10 and 14 years of age using phoneme monitoring during silent picture naming task. The authors hypothesized that phoneme monitoring within words indicates the way the phonemes are encoded in speech output. Results revealed that CWS performed slowly in monitoring subsequent phonemes within bisyllabic words when compared to CNS. They did not find any significant difference between the groups in auditory tone monitoring tasks. The percentage of errors made by both the groups in phoneme and auditory tone monitoring tasks were found to be comparable. The performance of CWS group was found to be significantly slow when compared to CNS. Therefore, Sasisekaran et al. [17] stated that CWS experience temporal asynchronies in one or more processes leading up to phoneme monitoring.

Need for the Study

In summary, it is evident that there is relationship between language and stuttering. Literature also supports the idea of phonological encoding deficits in adults and children with stuttering. Various paradigms have been used to study the phonological encoding deficits however, results are inconclusive. Most of the paradigms used to study the phonological encoding are indirect. None of these paradigms pin pointed the presence of phonological encoding deficits as the cause for stuttering but rather identified phonological encoding to be one among various other factors contributing towards stuttering. Though numerous studies had provided evidence to support the fact altered efficiency in performing phonological encoding was observed among children with stuttering, none of these studies have clearly stated their altered performance in phonological encoding is because of the delay in timely encoding of phonemic segments during speech production or presence of more errors during the phonological encoding process or both.

It is said in the literature that the process of phonological encoding is obscured from direct observations since it is deeply embedded in the process of language formulation and on the western forefront there are very few direct sources of evidence which supports the fact the children who stutter were found to have phonological encoding deficits. But on the Indian forefront, no direct source of evidence was found to support this abovementioned fact. Though studies have been conducted in western context, the results cannot be generalized to other languages since English is stress timed and Kannada is syllable timed. Thus, the need arose to investigate phonological encoding skills in children who stutter aged between 8 and 12 years of Indian context. To our knowledge, there are no studies performed on children with respect to Indian context in general and Kannada speakers in particular.

Aim

The main aim of the study was to check the difference in phonological encoding using phoneme monitoring in silent naming task and to compare between children with stuttering and children with no stuttering.

Objectives

a. Is there any difference in speed of phoneme monitoring (reaction time) between CWS and CNS and within CWS group.

b. Is there any difference in the percentage of error response in phoneme monitoring between CWS and CNS and within CWS group.

Method and Materials

Participants

Inclusion Criteria for Clinical group

The present study included two groups,

Group I: The Clinical group consisted of 34 (10 Mild, 17 Moderate and 7 Severe degree of stuttering) Kannada speaking individuals in the age range of 8-12 years, clinically diagnosed as Stuttering by the Speech- Language Pathologist.

Group II: The control group consisted of 34 age and gender matched Kannada speaking individuals.

Inclusion Criteria for the Typically Developing Group

Thirty-Four age and gender matched children with no stuttering comprised the control group. All these children were right handed and native speakers of Kannada. These participants were matched with the clinical group for the socioeconomic status using the NIMH socioeconomic status scale Venkatesan [18]. All the participants who belonged to the control group were reported to have no history of sensory, neurological, communicative, academic, coginitive, intellectual or emotional disorders and orofacial abnormalities. These participants were randomly recruited from Holy Trinity and Gangothri Public schools, Mysuru.

Test Materials Used

The following assessment tools were administered on the participants of both the groups. The Handedness Preference was assessed using Modified Laterality Preference Schedule tool Venkatesan [18]. To rule out group differences in Vocabulary, Articulation and Cognitive skills, semantic section from Linguistic profile test Karanth, Ahuja, et al., computerized re-standardized version of Kannada articulation test Deepa & Savithri and Cognitive linguistic assessment protocol for children (Anuroopa) were administered as a part of screening. The children who pass these screening tests were considered for the study.

The experiment of the present study included two tasks namely,

a) Picture Familiarization and Naming task

b) Phoneme Monitoring task

The picture familiarization task was presented prior to phoneme monitoring task. The purpose of this order of presentation was to familiarize the participants with the target pictures. The experiment protocol was taken as mentioned in Sasisekaran, Brady et al. [17] study.

The present study was conducted in two phases

a) Stimulus Preparation and Task Design Programming

b) Administration of the tasks on Children who stutter (CWS) and Children who do not stutter (CNS) groups.

Phase 1: Stimulus preparation and Task Design Programming

Picture Familiarization and Naming task

Purpose: This task was done to familiarize the participants with 34 target pictures and their names that were considered for the phoneme monitoring task. It mainly serves as a purpose to rule out the influence of lexical retrieval on the interpretation of the participants’ responses, guide the participant to arrive at the target word and also to avoid any kind of confusions.

Stimulus: Seventeen phonemes (/ṭ/, /d̪/, /r/, /v/, /p/, /ḍ/, /dʒ/, /g/, /ʃ/, /k/, tʃ, /s/, /n/, /t̪/, /m/, /b/, /h/) were selected based on the mean percentage of highly dysfluent phonemes Sangeetha & Geetha [19]. Thirty-Four Kannada bisyllabic nouns (CVCV) with a frequency value of below 10 were considered. The words having frequency value of below ten was considered as the most frequently used words in Kannada language. The frequency of each word was noted from Morphophonemic analysis of the Kannada language by Ranganatha. The target phonemes occurred in initial and medial positions of the target words. Five Speech Language Pathologists (SLPs) were asked to validate thirty-four target pictures representing the 34 target nouns. The judges were asked to rate the target pictures based on four parameters such as image agreement (picture to name correspondence), name agreement (correspondence between the given name for the target noun and the name provided by the participants), word familiarity (assessed based on how familiar the target noun is from experience) and image appropriateness (judged based on whether the representation of the target noun is appropriate to the age range). They were asked to respond by using a 4 point rating scale for each of the parameters as follows: For image and name agreement: 0-no correspondence, 1-least correspondence, 2-partial correspondence, 3-most correspondence; For word familiarity: 0-unfamiliar, 1-least familiar, 2-partial familiar, 3-most familiar; For appropriateness: 0-absolutely inappropriate, 1-slightly inappropriate, 2-slightly appropriate, 3-absolutely appropriate. The target pictures with 75% agreement between the five judges were considered for the study. Out of 34, five target pictures (such as /gu:ḍu/, /ʃiva/, /nari/, /ba:le/ and /t̪ale/) were rated as partial correspondence/ familiar/ appropriate by three judges and these pictures were modified as per the suggestions given. The target pictures which were modified were validated again by five SLPs and these pictures had an agreement of 75% among the five judges.

Instrumentation: The target pictures were presented via computer.

Design: In this task, thirty-four target pictures were randomly presented manually on the computer screen and the participants were asked to name the pictures overtly

Phoneme Monitoring Task

Purpose

The task was designed to measure the participants’ response time in ms and accuracy in monitoring the presence or absence of target phonemes during silent picture naming.

Stimulus

In this task, thirty four pictures from the picture familiarization and naming task were used in order to elicit phoneme monitoring responses. The audio samples of the target phonemes were validated by five SLPs. The seventeen target phonemes were presented along with vowel /a/ but the subjects were asked to monitor the target phoneme irrespective of the sound preceding or following it. The target pictures were presented in two blocks. In the first block, thirty-four pictures occurred twice (once with the phoneme as a target, thus requiring participants to provide a “Yes” response and once without the phoneme as a target requiring a “No” response). In the second block, twenty target pictures were randomly presented (the pictures represent the ten target words having the phoneme as the target requiring a “Yes” response and ten without the phoneme as a target requiring a “No” response). The presentation order of the trials was randomized within each block and the order of the presentation of two blocks was counterbalanced across the participants.

Instrumentation

The seventeen target phonemes were pre-recorded (audio) using PRAAT software (version 5.3). The recording of the target phonemes was done in a sound treated room at an appropriate intensity. The target phonemes were uttered by the native Kannada adult speaker. The colored pictures representing thirtyfour bisyllabic target words were selected from the internet and saved in jpg format. DMDX software (version 5) was used for the presentation of the target phonemes and pictures, phonemes to be monitored and recording of the reaction time and accuracy of the subjects’ manual responses in the computer.

Design

In both the blocks, the trials were presented with an opening screen of 700ms followed by auditory presentation of the prerecorded target phoneme. This was followed by random inter stimulus interval of 700ms, 1400ms and 2100ms. The inter stimulus interval (ISI) between auditory presentation of the target phoneme and visual presentation of the target picture was varied between 700ms, 1400ms, 2100ms in order to reduce the anticipatory button press. Followed by this was the presentation of the target picture which appeared on the screen for 3000ms and then the participant’s response time was measured. The same target picture was presented again with a gap of 500ms for the participant to name it aloud. The target picture was presented again to check if the child was thinking of the target word as opposed to another word when responding to the monitoring task. Presentation of the next trial in the sequence was initiated automatically after 3000ms in case of “No” response. This was programmed on the DMDX software (version 5) with the help of technical staff. If the target phoneme was present in the target word, the subjects were asked to indicate through a “Yes” response and “No” in case if the target phoneme was not present in the target word. Five catch trials were given for practice purpose. Figure 1 illustrates the steps followed in programming the presentation of each trial of both the blocks in DMDX software.

Phase 2: Administration of the Tasks on CWS and CNS Groups

Picture Familiarization Task

First, the participants were familiarized with the thirty-four target pictures that were considered for the phoneme monitoring task and later these target pictures were randomly presented on the laptop screen for them to overtly name it. In case of any errors made by the participants, a corrective feedback was provided i.e. the naming errors were corrected by the tester and verbal cue was also provided in order to guide the subject to arrive at the target word. Two to three attempts were provided to the participants until they correctly name the target pictures which were named incorrectly in the first attempt. After familiarizing them with the target pictures, the participants were asserted to monitor for the target phonemes in the target words in the phoneme monitoring task. For each participant, this task took 15 minutes for to complete.

Phoneme Monitoring Task

Each participant was made to sit comfortably in front of the laptop screen and the testing was done in a distraction free environment. The participants were instructed that first they would hear a phoneme for e.g. /ṭa/ and then after a small time gap, a picture that they had named earlier would appear on the screen for e.g. picture of /lo:ṭa/. Since the target phonemes were presented along with vowel /a/, the subjects were asked to monitor the target phoneme irrespective of the sound preceding or following it. On seeing the target picture, they were asked to identify the heard phoneme in the pictorial representation of the target word (irrespective of its position in the target word) by covertly naming it. The response keys such as “key 1” and “key 2” were programmed specifically on the laptop keyboard. If they identify the heard phoneme in the target word, then they were asked to press “key 1” indicating “Yes” and if the heard phoneme is not in the target word, they were asked to press “key 2” indicating “No”. The participants were instructed to press the response keys as quickly as possible. This was followed by a small-time gap and then the same picture was presented again for them to name it aloud. This task took 30 minutes for each participant to complete. The time taken to complete the entire experiment was 70 minutes approximately. The participants took 10 minutes (approximately) to complete the catch trials of each of the tasks. In the three tasks such as simple motor task, auditory tone and phoneme monitoring tasks, break was given to the participants after the completion of one block. But in the phoneme monitoring task, rest period was given within the first block i.e. after presentation of 34 stimuli. The duration of the rest period was controlled by the participants, i.e. the participants were instructed to press the spacebar once they were ready to continue.

Analysis

The reaction time and accuracy of the participants’ responses were measured automatically using DMDX software. The incorrect responses were indicated by negative sign in the software and time lapsed errors were indicated by-4000ms. For phoneme monitoring reaction time to “Yes” and “No” responses in both initial and medial positions were obtained and averaged for each of the subjects in both the groups separately. For measuring the accuracy, the number of accurate responses was counted and then a raw score was obtained for a total set of 88 stimuli for phoneme monitoring task.

Results

Phoneme Monitoring Task

Comparison of Speed of Phoneme Monitoring in Phoneme Monitoring Task Across CWS and CNS and also within CWS Group

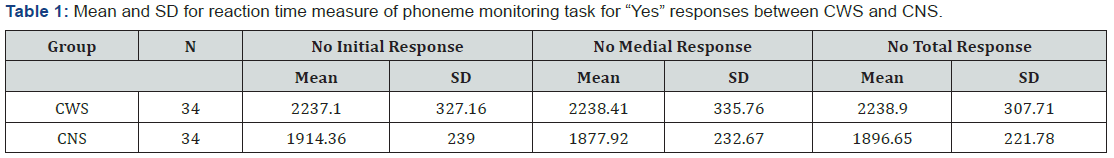

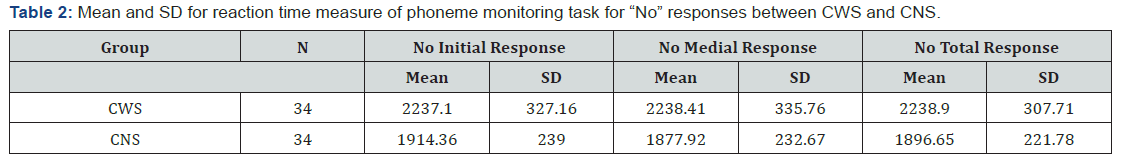

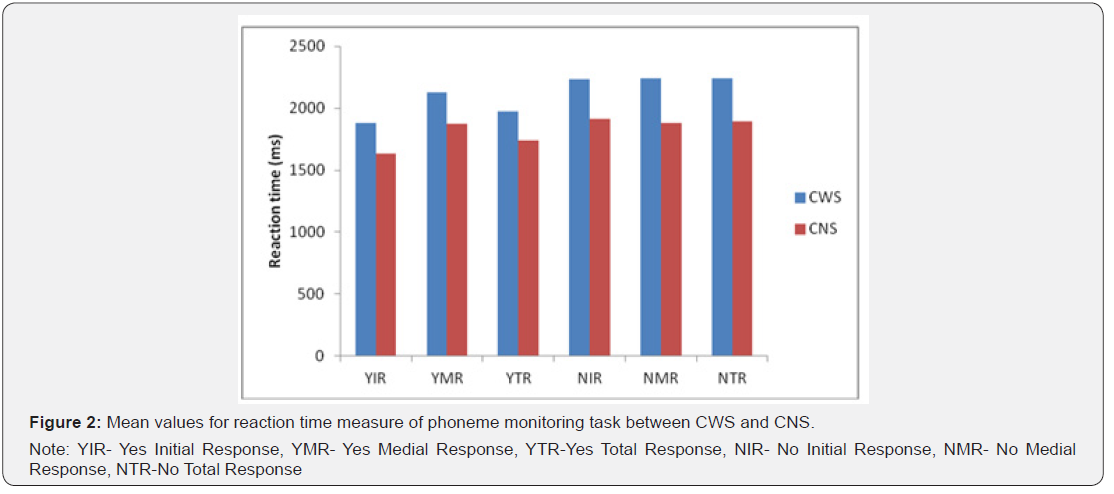

Since both the groups were normally distributed, Independent t test was used and the results suggested significant difference between CWS and CNS groups in phoneme monitoring response time to “Yes” responses (t(66)=3.49; p< 0.01) and “No” responses (t(66)=5.26; p< 0.001). A significant difference was found between CWS and CNS groups in the speed of monitoring the target phoneme occurring in initial position (t(66)=3.81; p< 0.001) and also in the medial position (t(66)=2.51; p< 0.05). In initial (t(66)=4.64; p< 0.001) and medial (t(66)=5.14; p< 0.001) positions, there was significant difference between CWS and CNS groups in the speed of monitoring to “No” responses. This was further supported by comparing the mean values and it was noted that the participants from CWS group were found to be slow in eliciting “Yes” and “No” responses when compared to children who do not stutter. For CWS group, their speed of monitoring the presence of target phoneme in initial and medial positions was observed to be slow when compared to participants from CNS group. For CNS group, their speed of monitoring in eliciting “No” responses was faster across both the positions when compared to CWS group. Table 1 indicates the mean and SD values of phoneme monitoring response times of “Yes” responses for CWS and CNS groups. Table 2 represents the mean and SD values of phoneme monitoring response times of “No” responses for CWS and CNS groups. Figure 2 represents the mean values for reaction time measure of phoneme monitoring task between CWS and CNS.

Comparison was made between phoneme monitoring time to “Yes” responses and “No” responses, phoneme monitoring time to “Yes” responses in initial position and “Yes” responses in medial position and phoneme monitoring time to “No” responses in initial position and “No” responses in medial position. Paired t test results showed the difference between the reaction time in eliciting “Yes” and “No” responses to be significant (CNS-t(33)=6.32; p< 0.001; CWS-t(33)=4.90; p< 0.001) since the reaction time in eliciting “Yes” responses (CNS-M=1738.23; SD=233.70; CWS-M=1974.97; SD=318.70) was shorter than the reaction time in eliciting “No” responses (CNS-M=1896.65; SD=221.78; CWS-M=2238.90; SD=307.71). The speed of monitoring the presence of phoneme in initial position (CNS-M=1635.59; SD=206.74; CWS-M=1879.17; SD=310.14) was significantly shorter (CNS-t(33)=5.22; p< 0.001; CWS-t(33)=3.09; p< 0.01) than in medial position (CNS-M=1870.70; SD=356.89; CWS-M=2129.32; SD=482.49). For the control group, the speed of eliciting “No” responses in initial position (M=1914.36; SD=239.00) was longer than in medial position (CNS-M=1877.92; SD=232.67) whereas for the CWS group, the speed of eliciting “No” responses in initial position (CWS-M=2237.10; SD=327.16) was shorter than in medial position (CWS-M=2238.41; SD=335.76) but the difference was not significant (CNS- t(33)=1.35; p>0.05; CWS-t(33)=0.03; p>0.05).

Comparison of Percentage of Error Phoneme Monitoring Responses in Phoneme Monitoring Task Across CWS and CNS and also within CWS group

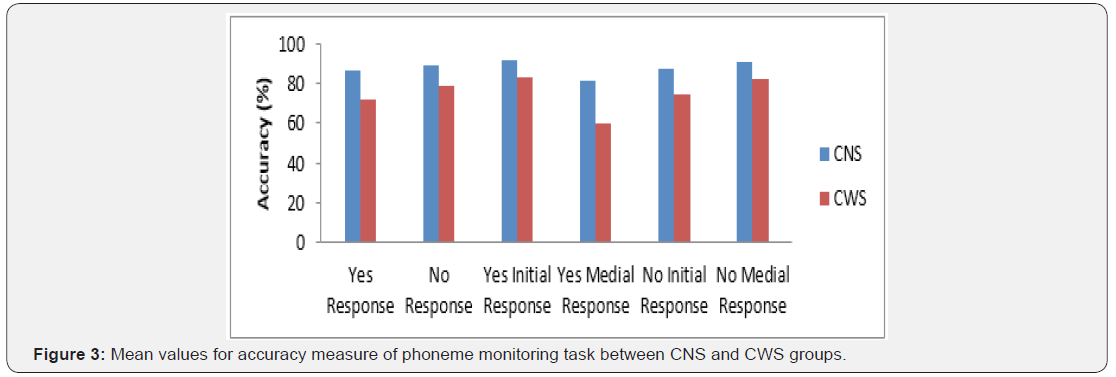

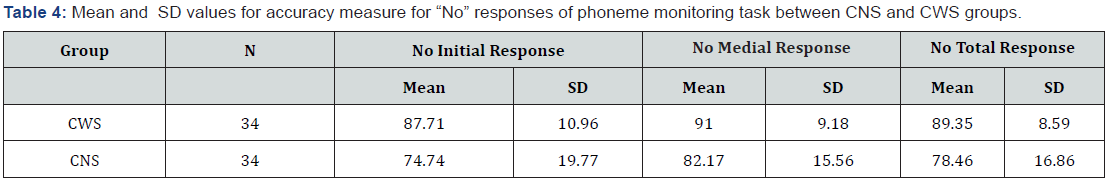

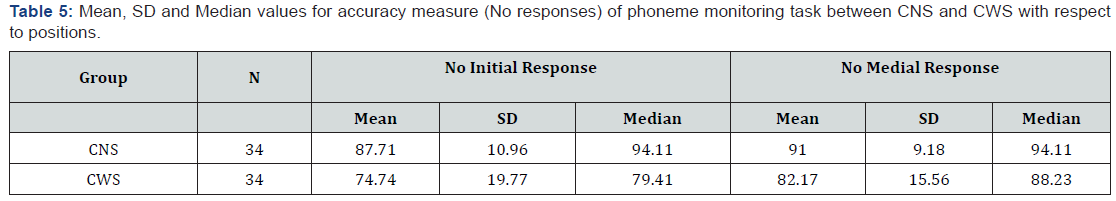

Since CWS and CNS groups were not normally distributed, Mann Whitney U test was used. It showed significant difference (׀z׀=3.50; p< 0.001) between both the groups in terms of phoneme monitoring accuracy measure of “Yes” responses. Significant difference (׀z׀=0.97; p< 0.05) was also found between both the groups in terms of phoneme monitoring accuracy measure of “No” responses. Thus, CNS were more accurate in eliciting “Yes” and “No” responses when compared to CWS. With respect to initial and medial positions, the difference between both the groups in phoneme monitoring response time to “Yes” (Initial-׀z׀=2.56; p< 0.05; Medial-׀z׀=3.70; p< 0.001) and “No” (Initial-׀z׀=3.11; p< 0.01; Medial-׀z׀=3.12; p< 0.01) responses was also observed to be significant. Based on the mean values, it was revealed that children who stutter were less accurate in monitoring the presence and absence of target phoneme occurring in initial and medial positions of the target words when compared to children who do not stutter. Tables 3 and 4 represents the mean and SD values for accuracy measure of “Yes” and “No” responses in phoneme monitoring task between CNS and CWS groups. Figure 3 represents the mean values for accuracy measure of phoneme monitoring task between CNS and CWS groups.

Comparison was made between phoneme monitoring accuracy measure of “Yes” responses and “No” responses, phoneme monitoring accuracy measure of “Yes” responses in initial position and “Yes” responses in medial position and phoneme monitoring accuracy measure of “No” responses in initial position and “No” responses in medial position. The Wilcoxon signed rank test results showed significant difference between accuracy measure of “Yes” and “No” responses for CWS group (׀z׀=2.02; p< 0.05) but for CNS group, significant difference was not found (׀z׀=1.66; p>0.05). Significant difference was found between the accuracy measure of monitoring the target phonemes in initial and medial positions (CNS-׀z׀=4.28; p< 0.001; CWS-(׀z׀=4.71; p< 0.001). There was significant difference between the accuracy measure of “No” responses in initial and medial positions for the CWS group (׀z׀=3.31; p< 0.01) but not for CNS group (׀z׀=1.61; p>0.05). The mean values were compared and results showed that the participants elicited “No” responses (CNS-M=89.35; SD=8.59; CWS-M=78.46; SD=16.86) more accurately than “Yes” responses (CNS-M=86.59; SD=11.00; CWS-M=71.79; SD=18.39). Monitoring the presence of target phonemes in initial position (CNS-M=92.04; SD=8.30; CWS-M=83.39; SD=15.22) was found to be more accurate than in medial position (CNS-M=81.14; SD=16.11; CWS-M=60.20; SD=24.66). Monitoring the absence of target phonemes in medial position (CNS-M=91.00; SD=9.18; CWS-M=82.17; SD=15.56) was found to be more accurate than in initial position (CNS-M=87.71; SD=10.96; CWS-M=74.74; SD=19.77).

Discussion

Performance of CWS and CNS in Phoneme Monitoring Task

In the present study, CWS were found to be slow in eliciting “Yes” and “No” responses when compared to CNS and also across initial and medial positions. This was indicated by a significant difference found between CWS and CNS groups. This finding proves that children who stutter experience difficulties to encode phonologic units. The central capacity required for this task was assumed to be reduced among CWS which in turn impedes their performance in phoneme monitoring task Neilson and Neilson. In this task, the differences observed in terms of timing domain were dependent of the differences in simple motor responses which were considered as an inherent component of phoneme monitoring task. The speed of monitoring to elicit “Yes” and “No” responses were found to be slow in CWS group of the present study. This implies that to make a “Yes” decision, children who stutter experience a delay in achieving higher activation level of the target phoneme. It can be assumed that when they hear the target phoneme, they tend to perform post lexical search strategy in order to confirm the response which explains the delay. To make a “No” decision, it can be assumed that there is a delay in retrieving and activating the phonemes present in the name of the target picture. The findings of Sasisekaran, Brady et al. [17] study is in partial agreement with the findings of the present study where the participants from their CWS group were found to be slow in eliciting “Yes” responses only. In the present study, the “Yes” and “No” responses were minimally affected by prediction bias (since the target picture is presented twice, the response obtained in the first encounter could predict the response on the second encounter) whereas in Sasisekaran et al. [17] study, the “Yes” responses were minimally affected and “No” responses were affected to a maximum extent. In Sasisekaran et al. [17] study, children who stutter do not have general monitoring deficits whereas they have deficits in specific to phonological encoding process. On the contrary, the CWS group of the present study experience general monitoring and phonological encoding deficits. This finding is supported by another study by (Darshini and Swapna) where they found that Kannada AWS experienced a delay in encoding phonologic codes of their own speech and also in the speech generated by others. So they also experience delay in the auditory perception task. Therefore, AWS had deficits in both phonological encoding and general monitoring abilities [19].

Many models and theories support the findings of the present study that the monitoring time of children who stutter were slow when compared to children who do not stutter. EXPLAN theory Howell [11] states that children tend to stutter when there is a temporal asynchrony in encoding phonologic codes during motor planning and execution. Based on WEAVER ++ model Ramus et al. it can be stated that CWS group of the present study may have experienced delay in activating and retrieving the required phonologic codes at the lexical level. According to influential model Dell, children who stutter take a longer time to activate the appropriate phonologic segment nodes which leads to delay in the generation of phonologic elaboration of metrical frame. This explains the slow monitoring time observed among children who stutter in the current study. Based on the neurolinguistic model, Perkins, Kent et al. [20] attributed the present study’s finding to inefficiency in processing segmental units which is commonly observed among children who stutter. The spreading activation model (Dell; Dell & O’Seaghdha) supports the findings of the present study that among children who stutter, the time taken for the target phonologic units to reach a highest level of activation was found to be delayed when compared to children who do not stutter. Children who stutter tend to be hyper vigilant in monitoring the errors in their motor plan and the threshold to initiate covert repairs was reported to be less (vicious circle hypothesis; Vasic & Wijnen. Thus, this reason could be accounted for slow reaction time in monitoring the target phonemes which was observed among CWS group of the present study when compared to CNS.

Significant difference was found between CWS and CNS groups in terms of phoneme monitoring accuracy measure of “Yes” and “No” responses and also across initial and medial positions. The percentage of errors in eliciting “Yes” and “No” responses were found to be more among CWS when compared to CNS. The same was observed across both the positions. On the contrary, Sasisekaran, Brady et al. [17] found that both the groups were comparable in the accuracy measure of “Yes” and “No” responses, but the difference was not statistically significant. They reported that the participants of their CWS group experienced encoding difficulties in time domain only and it was not accompanied by reduced error rate. But in the present study, for CWS group, the slow monitoring time was accompanied by increased error rate.

The findings of the present study are in congruous with the findings of (Darshini and Swapna). AWS performed poorly in phoneme monitoring task when compared to ANS. This was indicated by observing a significant difference between both the groups with respect to reaction time and accuracy measures. The AWS group took longer time in eliciting “Yes” responses when compared to ANS group. They were also less accurate in eliciting “Yes” responses. They had attributed this finding to the mismatch between the activation levels and the retrieval of the phonemes. Even in their study, AWS performed poorly in monitoring the phonemes occurring in initial, medial and final positions when compared to ANS. Among AWS, they found that they took longer time in monitoring the presence of phoneme occurring in initial, medial and final positions, but they were found to be less accurate in monitoring the phonemes occurring in medial and final positions. Thus, for AWS, increased reaction time was accompanied by reduced error rate during monitoring the presence of target phoneme in initial position. According to covert repair hypothesis Kolk & Postma [21] and spreading activation model (Dell 1986; Dell & O’Seaghdha 1991) rationales, the initial syllable gets activated appropriately though it gets activated slowly. But there is a deficit in encoding the remaining portion of the word in their own formulated speech since the time taken to activate the medial and final syllables was found to be slow which was accompanied with diminished accuracy.

Sasisekaran et al. [17] also found that AWS were found to be faster and more accurate in encoding the phonemes occurring in initial position when compared to medial and final positions. This implies that they were sensitive to sequential encoding of speech. AWS performed poorly in phoneme monitoring task when compared to ANS. This group difference was attributed to the difficulties faced by AWS in storing and retrieving the speech plan from the speech buffer as opposed to delays in the activation and selection of phonemes during phonological encoding.

Many models and theories support the findings of the present study that children who stutter were less accurate in self monitoring skills when compared to children who do not stutter. Based on the spreading activation model (Dell; Dell & O’ Seaghdha), the findings of the present study imply that CWS were less accurate because the competing phonologic units might have more activation strength than the target phonologic node. The probable reasons for the competing phonologic unit to get activated could be because of the residual activation where it would have been recently selected and the activation has not decayed yet or faulty activation of the units. As suggested by Cover repair hypothesis Postma & Kolk [21], CWS group of the present study exhibited increased error rate in phoneme monitoring task and this could be their failed covert attempts to correct the errors in the phonologic encoding of an utterance. Phonologic encoding deficits are observed among CWS group of the present study supports this theory. With respect to Cover repair hypothesis Postma & Kolk [21] theory, the present study findings implies that CWS experience difficulty in selecting the appropriate phonemes required for the name of the target picture and their self-monitoring system fails to correct these errors covertly which leads to increased error rate in phoneme monitoring task. Due to repeated covert repairs, the correct portions of the plan become temporarily unavailable, which results in slowed monitoring time and less accurate. The finding of the present study is supported by Vicious circle hypothesis Vasic & Wijnen where it states that the ability to encode phonologic sequences is impaired among CWS. Vasic & Wijnen stated that hyper vigilant monitoring system results in recurrent repairs of even minor subphonemic irregularities resulting in unnecessary reformulations of the speech plan ultimately resulting in a “vicious circle” which explains the slowed reaction time and increased error rates observed among CWS group of the present study. Increased error rate observed among CWS of the present study could be because of asynchrony in the assembly of phonologic units (Fault line hypothesis, Wingate). According to Neuropsycholinguistic model, Perkins, Kent et al. [20] stated that stuttering occurs due to delay in linguistic processing which is because of segmental processing inefficiency or due to ineffective activation of the components that contribute to the final act of speaking. This explains why CWS were found to be delayed and less accurate in encoding phonologic codes.

On comparing the reaction time in eliciting “Yes” and “No” responses, CWS and CNS took longer time to elicit “No” responses compared to “Yes” responses. This reflects hyper-monitoring that is they could have done repeated monitoring to ensure that the heard phoneme is not in the presented picture of the target word. Both the groups have followed a similar trend in timing domain but the CWS group took longer reaction time in eliciting “Yes” and “No” responses. Therefore, the CWS were found to be delayed in encoding phonemes, but they were not deviant when compared to CNS group. In terms of accuracy, both the groups were more accurate in eliciting “No” responses when compared to “Yes” responses. Less error rate in eliciting “No’ responses could be attributed slow reaction time. There is no deviancy in the trend pattern but CWS group was found to be less accurate when compared to CNS group.

In terms of positions, CWS and CNS groups took a longer time to monitor the presence of the target phoneme occurring in medial position when compared to initial position. Both the groups had followed the similar trend in timing domain. Thus, there is no deviancy between the groups in terms of timing domain. In terms of accuracy, CWS and CNS were more accurate in eliciting “Yes” responses when phoneme occurred in initial position when compared to medial position and vice versa for “No” response. In terms of accuracy measure of “Yes” and “No” responses, both the groups followed similar trend, but CNS group were more accurate than CWS group. Based on MacKay and Macdonald model rationale, phonologic time nodes connected to phoneme nodes of the phonologic system generates less pulses per second for activating the phonemes in medial and final position of the words whereas it generates more pulses per second for activating the initial phoneme of the word. The activation level of the initial phoneme doesn’t get self-inhibited and as a result of this the medial phonemes does not get activated under the most primed win principle (the target which is primed with highest level of activation). This can be attributed to longer reaction time and less accuracy rate in monitoring the phonemes occurring in medial position of the word.

Both the groups have followed sequential encoding of speech. These findings imply that children who stutter experience difficulties in encoding phonemes occurring in medial position when compared to initial position. They might find it easier to plan the initial phonemes when compared to phonemes occurring in medial position as suggested by EXPLAN model. The finding can be interpreted through EXPLAN model. CWS group of the present study might be inefficient in segmenting the later portion of the word. As suggested by EXPLAN model, they might have a subconscious default setting i.e. they assume that the target phoneme for which they are monitoring occur only in initial position not in the later portion of the word. Because of this, their reaction time to monitor the target phoneme in medial position is delayed and less accurate. The reasons for CWS group to be less accurate in monitoring phonemes occurring in medial position could be because of delay in retrieving the appropriate phoneme as the threshold required for activation of appropriate may be increased (Dell; Dell & O’Seaghdha) or more effort is required since the later portion of the word becomes temporarily unavailable (as suggested by EXPLAN model) or mismatch between the retrieved and activated phoneme or segmental inefficiency. Levelt and Wheeldon [8] also reported that monitoring latencies of their CWS group gradually increased along with the serial position of the segments within the target word. The findings of the present study suggests that children who stutter may have an unstable language planning system and this could be attributed to strong linguistic differences in certain aspects of phonological encoding.

To summarize, CWS group performed poorly than CNS group in simple motor task, auditory tone and phoneme monitoring tasks in terms of timing domain. In terms of accuracy, CWS and CNS performed similarly in simple motor and auditory tone monitoring tasks. But in phoneme monitoring task, CNS group performed more accurately than the CWS group. Therefore, CWS as a sub group have general monitoring difficulties and in specific, they exhibit phonologic encoding difficulties also [20-23].

Conclusion

It can be concluded that overall CWS of the present study experience phoneme monitoring deficits when compared to CNS. CWS tend to be hyper vigilant in monitoring the errors in their motor plan and the threshold to initiate covert repairs was reported to be less (vicious circle hypothesis; Vaic and Wijnen, 2005). The present study adds on to the theoretical knowledge on nature of stuttering in children, especially supporting the psycholinguistic factors related to stuttering.

Clinical Implications

Phoneme monitoring tasks are sensitive to assess the presence of linguistic (phonologic encoding) deficits among CWS of Indian context. It would be beneficial for holistic therapeutic approach where the clinician would work on their speech, linguistic and motoric aspects.

aknowledgements

It is a part of ARF project titled ‘’Phonological encoding in the children who stutter’’, 2016-17. We extend our thanks to Dr Savithri S.R, Director, All India Institute of Speech and Hearing for providing the permission to conduct project and approval for the submission of the paper.

References

- Peters HF, Starkweather CW (1990) The interaction between speech motor coordination and language processes in the development of stuttering: Hypotheses and suggestions for research. Journal of Fluency Disorders 15(2): 115-125.

- Bloodstein (2002) Early stuttering as a type of language difficulty. Journal of Fluency Disorders 27(2): 163-167.

- Bloodstein O, Gantwerk (1967) Grammatical function in relation to stuttering in young children. Journal of Speech, Language, and Hearing Research 10(4): 786-789.

- Howell P (2004) Assessment of some contemporary theories of stuttering that apply to spontaneous speech. Contemporary issues in communication science and disorders: CICSD 31: 121-139.

- Dayalu, Kalinowski, Stuart, Holbert, Rastatter (2002) Stuttering frequency on content and function words in adults who stutter: A concept revisited. Journal of Speech, Language, and Hearing Research 45(5): 871-878.

- Brundage, Ratner (1989) Measurement of stuttering frequency in children’s speech. Journal of fluency disorders 14(5): 351-358

- Hannah EP, Gardner JG (1968) A note on syntactic relationships in nonfluency. Journal of speech and hearing research 11(4): 853-860

- Levelt W J M (1989) Speaking: From intention to articulation. MIT press, Cambridge MA, USA.

- Goldman, Fristoe (1986) Goldman Fristoe test of articulation. American Guidance Service.

- Helmreich HG, Bloodstein O (1973) The grammatical factor in childhood disfluency in relation to the continuity hypothesis. Journal of speech and hearing research 16(4): 731-738.

- Levelt WJ, Roelofs A, Meyer (1999) A theory of lexical access in speech production. Behavioral and brain sciences 22(1): 1-75

- Browman, Goldstein L (1997) The gestural phonology model. Speech production: Motor control, brain research and fluency disorders 57- 71.

- Saltzman EL, Munhall KG (1989) A dynamical approach to gestural patterning in speech production. Ecological psychology 1(4): 333-382.

- Melnick KS, Conture, Ohde RN (2003) Phonological priming in picture naming of young children who stutter. Journal of Speech, Language, and Hearing Research 46(6): 1428-1443.

- Roelofs A (2004) Seriality of phonological encoding in naming objects and reading their names. Memory & Cognition 32(2): 212-222.

- Byrd, Conture, Ohde (2007) Phonological priming in young children who stutter: Holistic versus incremental processing. American Journal of Speech Language Pathology 16(1): 43-53.

- Sasisekaran J, Brady A, Stein J (2013) A preliminary investigation of phonological encoding skills in children who stutter. Journal of fluency disorders 38(1): 45-58.

- Venkatesan S (2009) The NIMH socio-economic status scale: Improvised version. All India Institute of Speech and Hearing, Mysore, India.

- Sangeetha M, Geetha YV (2015) Linguistic Analysis of Mono and Bilingual Children with Stuttering. An unpublished doctoral thesis submitted to University of Mysore, India.

- Perkins W H, Kent RD, Curlee (1991) A theory of neuro psycholinguistic function in stuttering. Journal of Speech, Language, and Hearing Research 34(4): 734-752.

- Postma A, Kolk H (1993) The covert repair hypothesis: Prearticulatory repair processes in normal and stuttered disfluencies. Journal of Speech, Language, and Hearing Research 36(3): 472-487.

- Arnold HS, Conture EG, Ohde RN (2005) Phonological neighborhood density in the picture naming of young children who stutter: Preliminary study. Journal of Fluency Disorders 30(2): 125-148.

- Wolk L, Blomgren M, Smith AB (2000) The frequency of simultaneous disfluency and phonological errors in children: A preliminary investigation. Journal of fluency disorders 25(4): 269-281.