Automatic Determination of Fabric Composition of Clothing in E-Commerce Images

Viktoria Sorokina1* and Sergey Ablameyko2

1Belarussian State University, Belarus

2Belarussian State University, United Institute of Informatics, Belarus

Submission: February 20, 2023; Published: February 28, 2023

*Corresponding author: Viktoria Sorokina, Belarussian State University, Belarus

How to cite this article: Viktoria Sorokina and Sergey Ablameyko. Automatic Determination of Fabric Composition of Clothing in E-Commerce Images. Curr Trends Fashion Technol Textile Eng. 2023; 8(1): 555730. DOI: 10.19080/CTFTTE.2023.08.555730

Abstract

A proposed technique in the e-commerce industry involves using a combination of generative adversarial (GAN) and convolutional neural networks (CNN) to identify the fabric composition of clothing. The method involves using GAN to generate synthetic images of clothing with known fabric composition and then training a CNN to classify the fabric composition of real clothing images using the synthetic images as training data. This approach involves generating copies of clothing images with the fabric material magnified to the fibers and fabric structure level, and then using them to teach the CNN how to recognize fabric types in real clothing images. The experimental results suggest that this method is successful in accurately identifying the fabric composition of e-commerce clothing, which can be useful in enhancing the search and browsing experience on websites.

Keywords: Classification of fabric composition; Generative adversarial network; Convolutional neural network; E-commerce

Introduction

The popularity of online shopping for clothing has been growing rapidly in recent years, but a major challenge is the inability to touch and feel the clothes, which makes it hard for consumers to determine the fabric composition. Fabric composition is an important factor for consumers as it affects the durability, comfort, and care of the garment. Unfortunately, many online platforms where clothing is sold lack a standardized way of describing the products, so fabric composition information may be missing. It is time-consuming to manually prepare a full description that includes fabric properties and classification, as it requires a physical article of clothing and an expert to determine the properties of the fabric. The goal of this work is to automate this process without relying on human decision-making.

To simplify the task of fabric classification, the proposed approach involves replacing the original fabric material of a garment with a larger version for more accurate classification and model simplification. This method combines generative adversarial (GAN) and convolutional neural (CNN) networks to determine the fabric composition of clothing in the field of e-commerce. The main idea is to move from determining the surface of the garment from a distance to examining the structure of the fabric for more precise fabric composition identification.

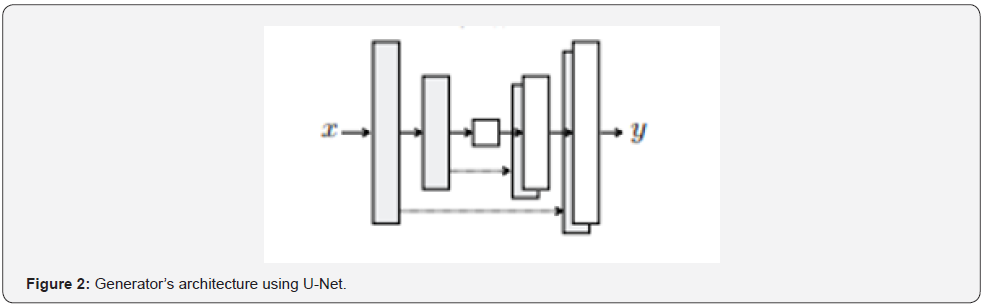

Our main contributions are developing the new approach to the problem of determining the composition of tissue using conditional generative-adversarial networks; present the classification task itself as a simple structure sufficient for high accuracy results (modification of the VGG-19 neural network using the attention model [1]); accelerating GAN learning by using the U-Net [2] architecture in the generator. We achieve state-of-the-art results on standard benchmarks.

Related Works

Classification of fabrics is important in several industries such as textile manufacturing, fashion industry, and e-commerce. This task involves categorizing different types of fabrics based on their visual characteristics, including the type of fabric (e.g. cotton, polyester, silk), weave pattern (e.g. twill, satin), texture (e.g. smooth, rough), or other visual features. This can be achieved using image-based methods where the classifier is trained to recognize different types of fabrics based on their visual characteristics, using either supervised or unsupervised learning approaches.

Previous studies [3-5] have used similar approaches to solve the task of fabric classification and identification, but the main challenge is that they were developed and tested on small datasets, which affected their accuracy. However, study [6] achieved high accuracy by using a large training dataset of approximately 10,000 fabric samples and a convolutional neural network with a Center Loss function.

In this article, the authors use a pix2pix network, which belongs to the class of conditional generative adversarial networks (cGAN), to generate enlarged images of fabric materials, and a VGG-19 convolutional neural network modified with an attention model to classify the resulting fabric samples. The main idea is to shift the focus of fabric classification from identifying the surface of clothing items from a distance to identifying the fabric structure, and then solving the task using a convolutional neural network. The goal is to develop an algorithm that can determine the composition of a clothing item from a standard image in an e-commerce product catalog.

Method

We developed the method allowing to classify fabric composition of clothing in an e-commerce image that includes the following stages.

Segmentation: To enhance the accuracy of the method presented in the article, it is possible to segment the image in advance to differentiate the foreground, i.e., the object, from the background. The model utilized for this purpose was created by the authors and described in [7], which is based on YOLACT convolutional neural network and weight standardization.

Texture maps generation: The second step involves generating a texture map, which requires producing an image of an enlarged fabric material from a standard product image. Figure 1 illustrates the input and output images as an example.

To solve the problem, a generative-adversarial network was used, for which an appropriate data set was needed for training. As no previous solution existed for the problem of fabric composition determination through the use of generative adversarial and convolutional neural networks, a training dataset was required to include the original clothing image and an enlarged fabric material image. Manual creation of such a dataset is time-consuming; thus, we opted for automatic synthesis. For this purpose, we utilized an open-source image database of fabric samples developed for sample classification in the [8]. Additionally, we utilized a dataset of clothing items, divided into four classes, along with corresponding binary masks where 255 represents an object and 0 represents the background.

To solve the problem, it was necessary to choose a neural network capable of generating high-quality images indistinguishable from real ones. The pix2pix architecture [9] was chosen.

Pix2pix, also known as Image-to-Image Translation with Conditional Adversarial Networks, is a type of conditional generative adversarial network (GAN) that is used for image-toimage translation tasks. In this study, instead of the traditional encoder-decoder method, which involves down sampling the input data through several layers until it reaches the bottleneck layer, and then up sampling it back, to enhance the generator’s performance, the U-Net architecture was utilized. This approach involves adding connections between every layer i and layer n-i, where n represents the total number of layers. Each of these connections simply merges all channels at level i with the channels at level n-i. The generator’s architecture using the U-Net approach is presented in figure 2.

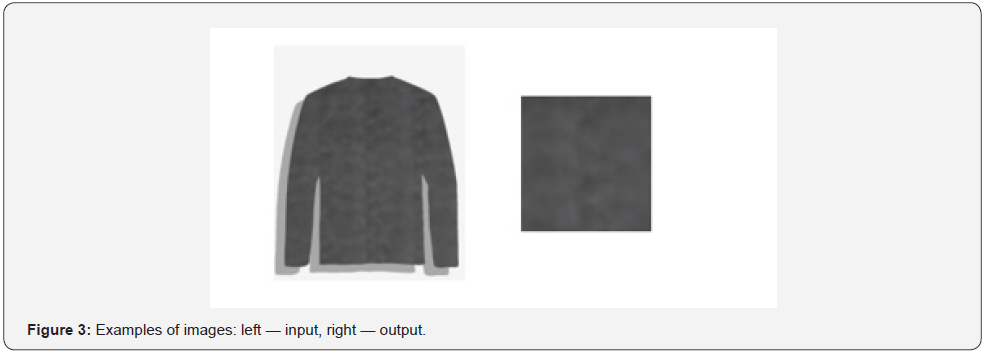

Fabric Sample Selection: The task of extracting a fabric sample includes the generation of the 50x50 px image that displays the texture of the fabric. To solve the problem, the dominant color of the material is determined, on the basis of which the corresponding fragment is selected from the original image of the clothing in the field of e-commerce. An example of input and output images is shown in figure 3.

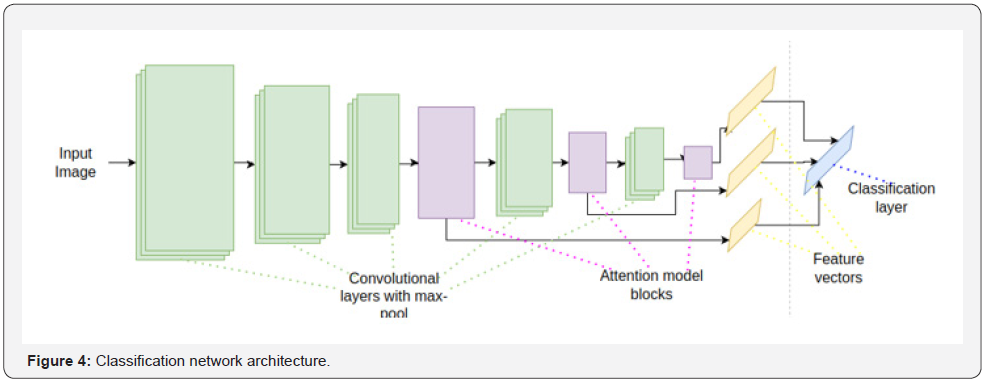

Fabric Sample Classification: Next, a model was built based on VGG-19 [10] and the attention model [11] by analogy with our work [1] for classifying samples.

The general structure of the VGG-19 network with the attention model is shown in figure 4. To prevent overfitting and improve the ability of the model to generalize, a pre-trained VGG-19 network was used with fully connected layers removed. Attention blocks are added after the third, fourth and fifth Pooling layers of the VGG-19 network, respectively. Finally, the outputs of the three attention blocks are combined together to form the final feature vector.

Experiments and Results

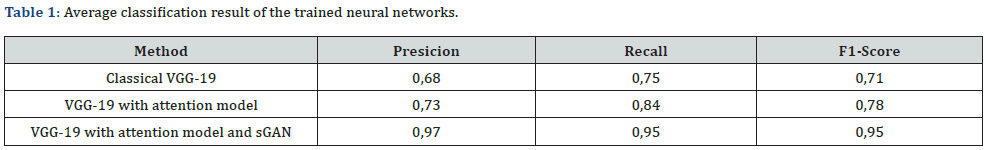

The task was to classify 10 different types of fabrics used in garments for e-commerce using three different neural networks: classical VGG-19, VGG-19 with an attention model, and VGG- 19 with both an attention model and pix2pix. The models were trained on an NVIDIA T4 GPU, and the performance was evaluated using precision, recall, and F1-score metrics. The accuracy of classification is presented in the table 1.

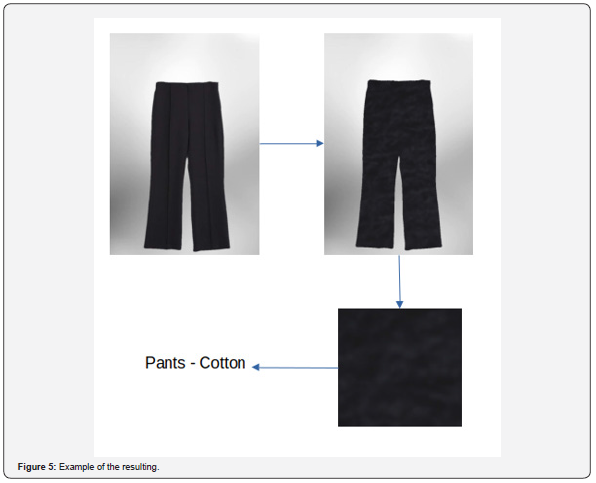

Example of the image classified with developed algorithm is presented in the figure 5.

Discussion and Conclusion

The original contribution of this study lies in the creation of a model that combines both generative adversarial and convolutional neural networks to address the challenge of identifying the fabric composition of clothing items in the realm of e-commerce. This approach yields improved outcomes without necessitating physical resources like material samples or a domain expert to tackle the problem.

During the experiment, a generative adversarial network was utilized to synthesize and annotate a dataset of e-commerce products. Neural networks were then constructed to identify the fabric composition of clothing items, and their performance was evaluated. The findings demonstrated that the novel method for recognizing fabric in clothing achieved higher accuracy compared to previously known approaches. Moreover, incorporating the attention model also yielded positive outcomes, resulting in improved metrics.

The shown method can be applied to other architectures of neural networks, and also used to solve the regression problem, when it is necessary to establish the percentage composition of the material.

References

- Sorokina V, Ablameyko S (2021) Extraction of Human Body Parts from the Image Using Convolutional Neural Network and Attention Model. In: Proceedings of 15th International conference Pattern Recognition and Information Processing. Minsk, UIIP NASB, pp. 84-88.

- Ronneberger O (2015) U-Net: Convolutional Networks for Biomedical Image Segmentation. In: Medical Image Computing and Computer-Assisted Intervention - MICCAI 9351. Springer, Cham.

- Suciati N, Herumurti D, Wijaya AY (2016) Fractal-based texture and HSV color features for fabric image retrieval. In: IEEE International Conference on Control System, Computing and Engineering. pp. 178-182.

- Jing J, Li Q, Li P, Zhang L (2016) A new method of printed fabric image retrieval based on color moments and gist feature description.

- Cao Y, Zhang X, Ma G, Sun R, Dong D (2017) SKL algorithm based fabric image matching and retrieval. In: International Conference on Digital Image Processing. P. 104201F.

- Wang, Xin S, Alex Z, Yueqi (2019) Fabric Identification Using Convolutional Neural Network. Doi: 10.1007/978-3-319-99695-0_12

- Sorokina V, Ablameyko S (2020) Neural network training acceleration by weight standardization in segmentation of electronic commerce images. In: Studies in Computational Intelligence. Vol.976, PP.237-244.

- Kampouris C (2016) Fine-grained material classification using micro- geometry and reflectance. In: 14th European Conference on Computer Vision - Amsterdam.

- Isola P (2016) Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 1125-1134.

- Karen S, Andrew Z (2015) Very Deep Convolutional Networks for Large-Scale Image Recognition. In: International Conference on Learning Representations, San Diego, pp. 1137-1149.

- Dzmitry B, Kyunghyun C, Yoshua B (2015) Neural Machine Translation by Jointly Learning to Align and Translate. In: 3rd International Conference on Learning Representations.