Reconstruction of Historical Buildings using Structure from Motion (SfM) Applications. An Operational Evaluation and Graphic Analysis

Jose Luis Cabanes*

Polytechnic University of Valencia, Spain

Submission: May 14, 2019; Published: May 23, 2019

*Corresponding Author: Jose Luis Cabanes, Niversidad Politecnica De Valencia, Spain

How to cite this article: Jose Luis Cabanes. Reconstruction of Historical Buildings using Structure from Motion (SfM) Applications. An Operational Evaluation and Graphic Analysis. Civil Eng Res J. 2019; 8(3): 555737. DOI: 10.19080/CERJ.2019.08.555737

Abstract

The digital reconstruction of historic buildings has seen a recent boost with the availability of automatic applications for close range photogrammetry that can be used to quickly produce accurate digital surface models (DSM). We provide an overview of these applications and assess some of our recent development work in order to frame their capabilities. We examine these applications from the graphic point of view, since the resulting digital meshes create visual patterns that represent new frontiers in the graphic expression of historic buildings and now mean that classic line drawings are no longer the only option for interpretation.

Keywords: Close Range Photogrammetry; SfM Applications; DSM Models; Mesh Edition

Structure from Motion (SfM) and 3D Reconstruction

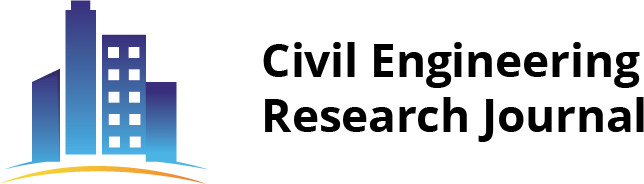

Automatic photogrammetry is based on new theoretical foundations. We must remember that the onset of digital photogrammetry just 30 years ago was entirely manual, and the evaluation of results was only possible in a line format. Subsequently, photo-modelling techniques added the possibility of photo-texturing surfaces using a methodology that was initially manual. Processes were automated in parallel with developments in artificial vision. During the first decade of this century, semiautomatic applications were launched that handled with a high level of automation the two basic phases of a project: namely, the construction of a camera model and its subsequent use. The construction of the model was handled using coded or backlit targets for the identification of orientation points, and the use of these applications was facilitated with techniques of linear correlation using image pairs (as supported by the principles of epipolar geometry) to initially obtain deep recovery or a point cloud model–and then a meshed model or DSM (digital surface model) (Figure 1).

In a third stage, applications were developed that ran automatically with minimal operator intervention. The novelty resided in the first phase (which is performed with a set of algorithms known as structure from motion or SfM) which involves resolving the inherent complexity of sets of converging lines to produce results with an acceptable visual quality. Although the focus was initially on aerial photogrammetry and robotics, a number of applications were quickly developed for fields such as photogrammetric surveying, automatic reconstruction of virtual reality (so that, for example, virtual objects can be integrated with real scenes), reconstruction from video sequences, and macro-panoraming (Figure 2).

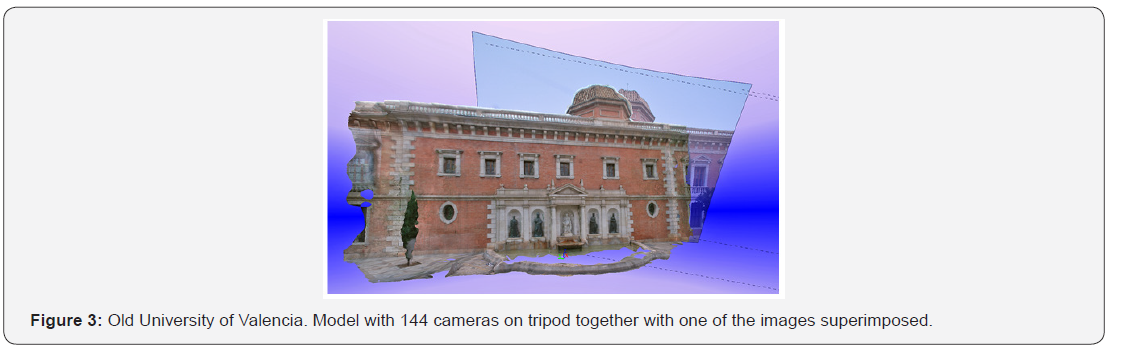

Understanding the relevant SfM algorithms is worthwhile as it can help us in our goal of building an operational protocol suitable for scanning buildings and urban scenes from sequences of photographs. There are generally four stages in the process: identification of key points; correlation; projective reconstruction; and metric reconstruction. The first stages operate through search algorithms that examine the entire and highly contrasted pixel image for key points – as defined by their coordinates and using a ‘descriptor’ that represents the characteristics of the adjacent points, such as SIFT (scale invariant feature transform) or SURF [1]. A correlation is then performed that evaluates the match between the original image descriptors and the second image (target image) within a given search area [2] (Figure 3).

The inevitable errors in the process caused by excessive disparity between shots, shadows, glare, or other causes are then repaired using robust adjustment algorithms such as RANSAC (random sample consensus) that identify key point sets and perform alignments using the orthogonal regression technique – discarding those that exceed a certain threshold and so reveal false matches (usually more common in photos taken far from the point of interest). With this data it is possible to start the ‘projective reconstruction’ of the sequence using the matrix fundamental F, which begins with the projectivity existing between the epipolar lines of a photo-pair. By transforming this into a relationship between coordinates of points on these lines it is possible to increase the number of orientation points obtained in each image without prior knowledge of camera positions [3].

It is then possible to retrieve the relative position of each cameras (with its pinhole orientation parameters) using the essential matrix E, and the coplanarity between the vector of the photo-pair cameras and the corresponding vector image. This phase is known as ‘metric reconstruction’ or “clustering” [4]. The above results are optimized globally according to various strategies using least squares adjustments of the coordinates of the calculated points–limiting the sum of the back-projection errors to below a threshold.

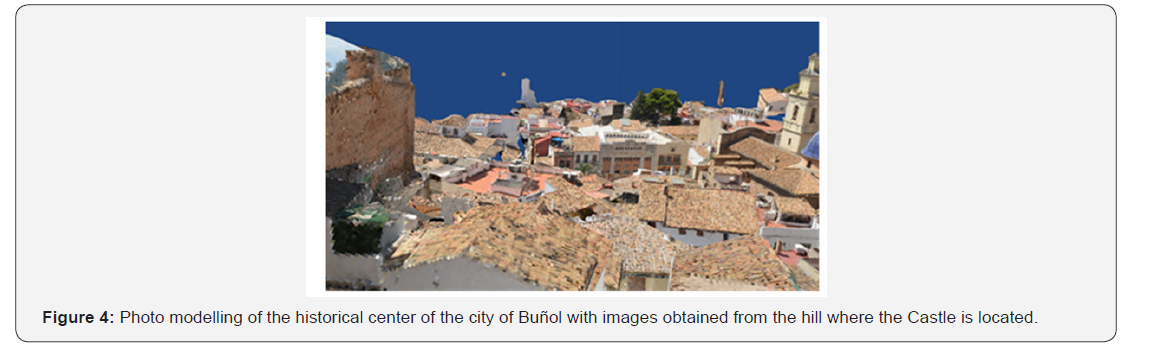

After adjusting the photo alignments (estimation of interior and exterior orientation parameters) it is possible to reconstruct the geometry of the model forming the DSM, and then obtain a triangulated envelope associated with a real texture atlas according to the normal vectors of each facet (Figure 3,4).

Acquisition and processing Strategy

The photo-set construction phase is undoubtedly the most delicate from an analytical point of view because the absence of a stable geometric approach requires a reliable strategy for data acquisition. We have focused on three aspects: the characteristics of the images; the shot sequence schedule; and influence of the photo scale on the quality of the model produced [5]. Below we look at the results in broad terms.

The preparation of the photographic material is crucial as the file size in MB of the generated model is closely linked to several of the photo shoot conditions. Format and quality are crucial and so the density of the model from video sequences is generally limited. Some applications do not allow the use of varying formats, image qualities, and focal lengths and in any case this practice is generally inadvisable. Uniformity in the color histograms directly influences success in the matching process, and so the process of manually adjusting the lighting and bracketing shots (as in the macro panoramas) is important for equalizing exposures and white balance in various frames of the sequence. The calibration of the cameras is also relevant. Working with idealized images provides better results, as linear regression processes are more accurate.

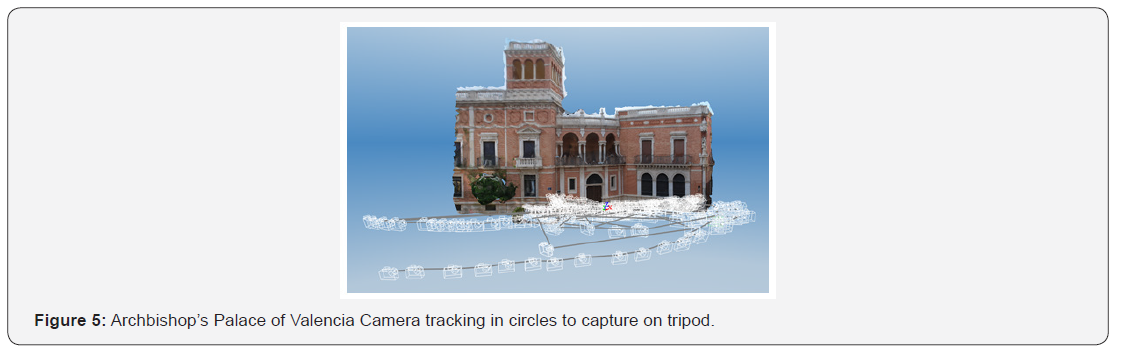

The shot programming sequence is crucial for projective reconstruction and the construction of a DSM. The sequence must address aspects such as overlap, direction, and the average photo scale of the shots. The aim is to achieve as much continuous overlap as possible (with overlaps of around 80% between shots and limited rotations). The shooting direction is also conditioned by the geometry of the model and it is essential to capture the various orientations from frontal angles. In this respect we have demonstrated the effectiveness of sequences describing arcs around the model (or sections of the model) with nadiral and azimuthal angles of differing signs – so that all the orientations are sufficiently registered from the front. Spliced auxiliary sequences and close-ups are very effective for maintaining the continuity of the registration (Figure 5).

The density of the obtained depth map is also directly linked to the average photo scale of the sequence. This feature can be controlled with greater precision in the applications running in local mode. In these applications, the sampling rate may be adjusted during the formation of the DSM in parallel with the “deep” rate (discarding ellipsoids with errors that are too large at the points calculated by direct triangulation). In the case of web-service applications, our tests confirm that when using photo scales of 1:500 the overall densities obtained are consistent with a reconstruction scale of 1:50.

This acquisition strategy largely determines the operational mechanics of various applications. The web service applications generally allow fewer settings overall; however, they significantly increase productivity because of the major processing capacity provided by the remote servers. Some applications even decompose the model image into different clusters of sizes that are manageable for parallel processing, thereby multiplying physical range and speed. Local mode applications enable some manual control over all the phases and so offer various quality levels for: camera alignment (with the option of manually indicating the orientation points); depth recovery (DSM); and texture mapping.

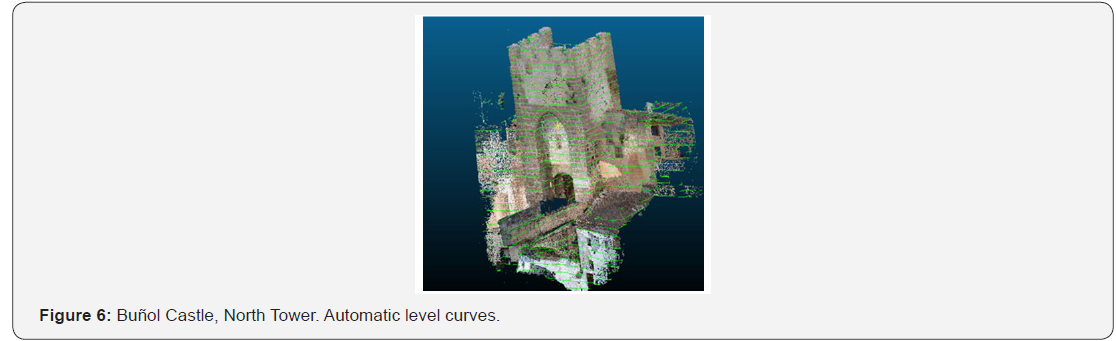

Conclusions: New Directions for Graphical Analysis of Historic Buildings

So far, we have briefly discussed an approach to automatic photo-modelling of historical buildings, and we have highlighted how the latest techniques lead to a significant improvement in the capacity to recover 3D information and produce reliable metrics (Figure 6). These photo models are now also much more accessible by the development of multi sensor capture techniques that combine laser scanning with photogrammetry (on tripod and on drone) or even video photogrammetry, which allows us to address issues such as “complexity, occlusions, structures variety and inaccessible locations (…), that will affect capturing all the geometric details of such structures, and require new methodologies “to collect large amount of data from various positions that must be accurately registered and integrated together” [6,7].

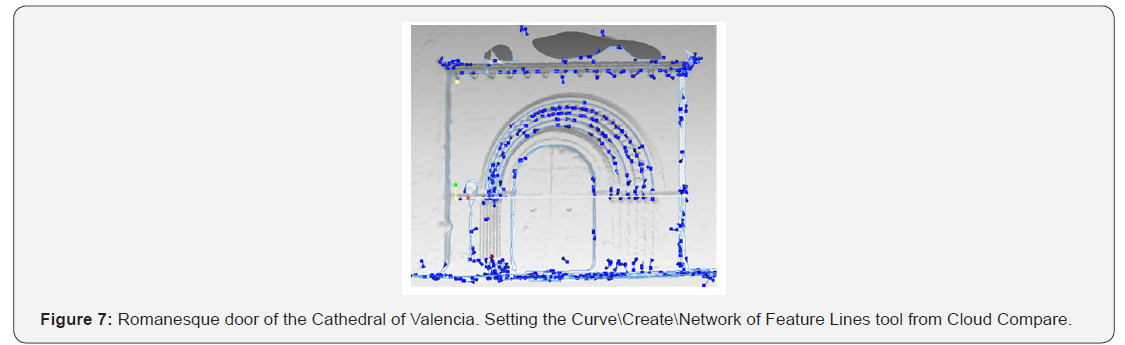

The other central question is how to frame these developments so that the graphics obtained provide useful measurement and documentary information. In this regard, we can offer some basic conclusions. Firstly, we have acquired a new method to describe buildings that supplements classical line drawings. Photo-textured meshes offer an attractive result because of their life-like appearance and the option of inversive navigation. However, presentation in other formats must be recognized as equally valuable. These applications interpret graphical variables such as lines or shaded areas as parts of drawings; and not simply as intersecting edges and apparent contours (as has been the case until now). “All of this research is focused on theoretical and practical goals using 2D and 3D models – and these methods and technologies are used for representing the intrinsic characteristics of an object (geometry, topology, and texture) and then advancing one level of analysis and making a critical recovery of information” [8].

With this premise we tested post-processing tools for reverse engineering objects at a basic editing level (filtering, orientation, and scale) and at an advanced level (subdivision, contours, edges, contours, and segmentation). Results such as 3D sectional projections and the curved levels that we demonstrated earlier can be obtained automatically through a suitable operational use (i.e. without manual geometric interpretation by the operator). This makes the detailed evaluation of complex geometric models possible – as is necessary in the case of historical buildings where surveys must objectively capture sculptural details, random elements of damage, as well as providing information on rendering and basic contours. Interestingly, this connects with the intentions of the first reconstruction drawings obtained using mechanical instruments by Maurice Carbonell and other pioneers. This type of result was almost unattainable using the older manual methods of digital photogrammetry (Figures 6-8).

References

- Barazzetti L, Remondino F, Scaioni M (2010) Extraction of accurate tie points for automated pose estimation of close-range blocks.

- Kraus K (1993) Photogrammetry. In: Umler F (Eds.), (4th edn), Bonn. ISBN 3-427-78684-6.

- Pollefeys M, Vergauwen M, Van Gool L (2000) Automatic 3d modeling from image sequences. ISPRS, Vol. XXXIII, Amsterdam, Netherlands, p. 1-8.

- Lindequist C (2010) 3D Reconstruction of buildings from images with automatic façade refinement. Master’s thesis in Vision, Graphics and Interactive Systems. Aalborg University, Denmark, pp. 451-460.

- Beraldin JA, Guidi G, Ciofi S, Atzeni C (2002) Improvement of metric accuracy of digital 3d models through digital photogrammetry. A case study: Donatello´s Maddelena. IEEE Proceedings of the International Symposium on 3D Data Processing Visualization and Transmission, Padova, Italy.

- Remondino F, El-Hakim S, Girardi S, Rizzi A, Benedetti S, et al. (2009) Virtual Reconstruction and Visualization of Complex Architectures. Proceedings of the ISPRS Working Group V/4 Workshop 3D-ARCH.

- Fernández-Lozano J, Gutiérrez-Alonso G (2016) Improving archaeological prospection using localized UAVs assisted photogrammetry: An example from the Roman Gold District of the Eria River Valley (NW Spain). Journal of Archaeological Science: Reports 5: 509-520.

- Bianchini C (2014) Survey, Modeling, Interpretation as multidisciplinary components of a knowledge system. Scientific Research and Information Technology 4(1): 15-24.