A Mathematical Approach to Measurement of Academic Visibility in Universities

Nwachukwu MA* and Uzoije AP

Department of Environmental Management, Nigeria

Submission: June 20, 2018; Published: August 10, 2018

*Corresponding author: Nwachukwu MA, Department of Environmental Management, Federal University of Technology Owerri, Nigeria, Tel: +2348163308776; Email: futo.essg@hotmail.com

How to cite this article: Nwachukwu MA, Uzoije AP. A Mathematical Approach to Measurement of Academic Visibility in Universities. Ann Rev Resear. 2018; 2(5): 555598. DOI: 10.19080/ARR.2018.02.555598

Abstract

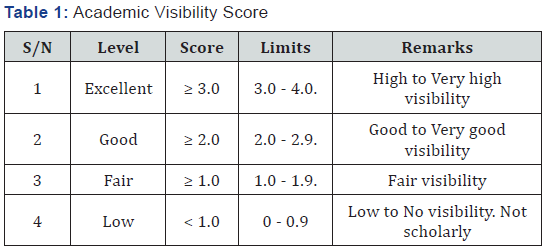

Research and publications which marks how scholarly a university faculty is, has remained the vital tools for measuring academic visibility of a university and that of faculty. Online social network tools notwithstanding, quality journals remain the most efficient tool to Project University and faculty to global visibility. In this paper, statistical model equations for measuring academic visibility of faculty (AVF) and that of university (AVU) have been formulated as a novel contribution. This new mathematical method unlike the traditional citation and scientometric methods is fast and provided a score board where 3.0 - 4.0 represents high to excellent visibility. 2.0-2.9 is good to very good visibility, 1.0 -1.9 is fair visibility while 0.0 to 0.9 is zero to low visibility. University Library should take the challenge of measuring and promoting academic visibility while faculty is obliged to submit their publications to the university library annually.

Keywords: Research publication; Journal ranking; Faculty; University and Visibility equations

Abbrevations: AJOL: African Journals Online; STS: Science Technology and Society Studies; SCI: Science Citation Index

Introduction

Universities share three levels of visibility: Local, Regional and Global visibilities. Universities with local visibility trade their products and research publications locally while universities with regional and global visibility trade their products, research and publications regionally and globally respectively. There is needing to continuously discuss the idea of academic visibility in universities particularly of developing countries. It is possible that over 70% of research in these universities end up in local journals. Quality of research and publication partly depends on the profile of research grant and technology available to researchers in a university. Much about quality of research and publication or visibility of a university also depends on ability, exposure and faculty scholarship ambition. Visibility of a university starts from local to regional and to the global levels, and significantly measured by research potentials, publication and society impacts. Universities and product of universities are ranked locally, regionally and worldwide significantly based on their impacts and visibility. Impacts and visibility of a university are large scale functions of research and publications. Most universities that have achieved global visibility (World class universities) started from zero level, and progressively between 100-200 years followed advances in science and technology to the level they are today. With present advances in science and technology, young universities need not take as much years to become globally visible, all other factors being equal. The factor of funding above others, has remained a critical issue militating against visibility of universities in developing countries. The concept of academic visibility has been with universities in a salient manner over the years until the advancement in internet technology and the birth of open access journals made it a conspicuous issue among scholars and universities. While some universities are enhancing their visibility, some are declining. A university would be making a great mistake for not monitoring or measuring its visibility with age. One salient factor of technology militating against research potentials of some universities is lack of research software and poor knowledge of modeling techniques. In the past (Pre-internet), academic visibility was being measured on the bases of the number of books written and sold, journal articles, professional affiliations and conference presentations or attendance. Presently, internet facility provides cheap and fast visibility far beyond traditional borders. There is no excuse for any faculty to have poor visibility.

Most often, excuses have been based on non-availability of research funding and facilities contested across nations. This notwithstanding, a hardworking researcher could reach global visibility through review papers. Research based on literature review could be very highly rated, significant and excellently visible to global research community. All high-quality journals call for review papers and all high-quality papers, be it original or review call for hard work. Many researchers dread review paper because of the extensive desk work involved, and for not knowing what it is and how to write it, yet it remains a classical line of research. Detail guidelines on how to write a good review paper is largely available online. From the archive of University of Texas [1] review articles are: “An attempt by one or more writers to sum up the current state of research on a particular topic”. Ideally, the writer searches for everything relevant to the topic, and then sorts it all out into a coherent view as it now stands. Review Articles will show the main people working in a field, recent advances and discoveries, significant gaps, current debates and new research ideas. Unlike research articles, review articles are good places to get a basic idea about a topic.

Journal ranking, or evaluations have been provided simply through institutional lists established by academic leaders or through committee vote. These approaches have been notoriously politicized and inaccurate reflections of actual prestige and quality, as they would often reflect the biases and personal career objectives of those involved in ranking the journals; also causing the problem of highly disparate evaluations across institutions [2]. Consequently, many institutions have required external sources of evaluation of journal quality. The traditional approach here has been through surveys of leading academics in a given field, but this approach too has potential for bias, though not as profound as that seen with institution-generated lists [3] As a result, governments, institutions, and leaders in scientometric research have turned to a litany of observed bibliometric measures on the journal-level that can be used as surrogates for quality and thus eliminate the need for subjective assessment [2]. Several journal-level metrics have been proposed, and most cited-journals are ranked based on a number of factors such as impact factor, Eigen factor, SC Imago journal rank, h-index and Google scholar rankings. Researchers could easily identify high and low-ranking journals and indexed or non-indexed journals in their research interest area. Local journals are usually not ranked or indexed, while regional journals may be ranked or indexed in regional data bases such as African Journals Online (AJOL) data base. Global journals are globally ranked and indexed in international data bases such as Web of science (ISI), Journal citation report, PubMed, Scopus, Coprinus, Science, Technology and Society Studies (STS), Thompson Reuters, Science Citation Index (SCI), Abstracting and indexing as topic, EBSCO, EMBASE, BioMed, Mendeley and few others.

Literature Review

According to Leahey visibility is a form of social capital such that if an academic is prolific, then there is a greater likelihood of being highly visible, leading to other scholarship opportunities [4] Webber KL [5] expressed the dominance of research in ranking universities when he stated that the prestige of a university will be judged by the research output of that university. The traditional means of assessing academic productivity and reputation has been citation analysis. Citation analysis for scholarly evaluation has a very extensive literature that weighs appropriateness within and across disciplines as well as offering nuanced discussion of a range of metrics [6- 8]. Recently, metrics like the h-index, g-index, and e-index have been adopted by Google Scholar (GS) to provide web-based citation analysis previously limited to citation indexes like ISI and Scopus. This model of citation analysis has become popular, as open access scholarship becomes more increasingly popular. Traditional citation analysis has significant limitations by not accommodating many aspects of scholarly activities. Dewette & Denisi [9] measured the visibility of the universities scientific production using scientometric methods. Both the citation analysts and the scientometric methods are clumsy; slow and often frustrating [9].

Methodology

The New Visibility Model Equation

Academic visibility measurement can be fast, easy and widely applied using the New Visibility Model Equations that runs on three tools:

Number of journal publications

Journal quality and

Age of publication.

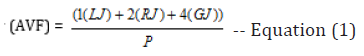

Academic Visibility of Faculty

Where: P = Total Publications of an individual faculty not older than 15 years

LJ = Number of publications in Local Journals

RJ = Number of publications in Reginoal Journal

GJ = Number of publications Global (High rank) Journals

Score per paper: LJ = 1, RJ = 2, GJ = 4

For example, if P of a faculty = 30, LJ = 15, RJ = 10, and GJ = 5, AVL = 1.8

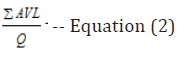

Academic Visibility of University (AVU)

Where Q = Total number of faculty in a university (less assistant and adjunct faculty)

Analysis of typical case examples derived from the above statistical relations (1 & 2), provided some limits and classification of academic visibility in a scores board presented in Table 1. The above statistical equations of visibility are applicable or limited to publication set not older than 15 years.

Result

After several trials calculations using Federal University of Technology Owerri, and individually involving a number of faculties at different levels, a four-level classification of academic visibility was formulated as presented in Table 1 below.

Conclusion

his paper has reviewed the concept and the state of academic visibility in universities. Introduced in the paper is a mathematical relation for computing academic visibility of individual faculty and that of university. This new mathematical model unlike the existing traditional methods makes academic visibility measurement fast and easy, with a possibility of higher degree of accuracy. It provided a score board where 3.0- 4.0 represents excellent or highest visibility. 2.0-2.9 is good to very good visibility, 1.0-1.9 is fair visibility while 0.0 to 0.9 is zero to low visibility. Several research skills, internet and online social network facilities have been identified as excellent tools for enhancing academic visibility, but these tools are to be considered after journal publications, with high preference to publication in top quality journals. Academic visibility is to be measured annually by a section of the university library.

References

- What’s is a Review Article? (2011) The University of Texas Archived from the original.

- Lowry PB, Moody GD, Gaskin J, Galletta D, Humpherys S, et al. (2013) Evaluating journal quality and the Association for Information Systems (AIS) Senior Scholars’ J basket via bibliometric measures. MIS Quarterly 37(4): 993-1012.

- Lowry PB, Romans D, Curtis A (2004) Global journal prestige and supporting disciplines: A scientometric study of information systems journals. J Assoc for Information Systems 5(2): 29-80.

- Leahey E (2007) Not by productivity alone: How visibility and specialization contribute to academic earnings. American sociol review 72 (4): 533-561.

- Webber KL (2011) Measuring Faculty Productivity. University Rankings 105-121.

- Neuhaus C, Daniel HD (2008) Data sources for performing citation analysis: an overview. Journal of Documentation 64 (2): 193-210.

- Adam D (2002) Citation analysis: The counting house. Nae (415): 726- 729.

- Moed HF (2005) Citation analysis in research evaluation. Vol 9 Kluwer Acad, Pub, Netherlands.

- Dewett T, Denisi AS (2004) Exploring scholarly reputation: It’s more than just productivity. Scientometrics 60(2): 249-272.